Request Demo

Can AI predict patient outcomes based on genomic data?

21 March 2025

Introduction to AI and Genomics

In today’s healthcare landscape, artificial intelligence (AI) has emerged as a transformative tool that can process vast volumes of complex data, offering solutions from early disease detection to personalized treatment strategies. One of the most promising domains is the prediction of patient outcomes using genomic data. By leveraging machine learning algorithms and advanced computational infrastructures, AI can uncover patterns and correlations in genomic datasets that can inform prognosis, predict treatment response, and even guide the design of novel therapies. This integration of AI and genomics is not only theoretically appealing but is already showing real-world promise through various applications and studies.

Basics of Artificial Intelligence

Artificial intelligence refers to the simulation of human intelligence in machines that are designed to think and act like humans. At its core, AI employs various algorithms—including supervised and unsupervised learning techniques, deep learning networks, support vector machines, random forests, evolutionary algorithms, and neural networks—to understand complex datasets and make predictions. In the biomedical context, these algorithms have advanced from simple regression and classification models to multifaceted deep learning systems that can handle multi-dimensional data. For instance, convolutional neural networks (CNNs) have been used in medical imaging diagnostics and, increasingly, in genomic data analyses to predict outcomes by learning intricate patterns that traditional statistical methods might miss.

As AI systems learn from annotated examples, they become proficient at recognizing subtle patterns—such as gene expression levels or mutation signatures—that correlate with disease progression. In the realm of genomics, where data dimensions can reach billions of datapoints for whole-genome analyses, AI provides the computational horsepower necessary to identify clinically relevant markers and patterns that could enable personalized prognostication and therapeutic decision-making.

Overview of Genomic Data

Genomic data refer to the complex information encoded within an individual’s DNA and RNA. This includes not only the entire sequence of nucleotides in the genome but also epigenetic markers, gene expression profiles, and mutation patterns that contribute to disease phenotypes. Modern next-generation sequencing (NGS) technologies generate massive amounts of such data, making it possible to capture the genetic blueprint underlying patient-specific disease processes.

The nature of genomic data is inherently high-dimensional and heterogeneous. It can include point mutations, structural variants, copy number alterations, and methylation status, among others, which together provide a holistic picture of how genetic alterations drive disease and influence treatment responses. Combining genomic data with other clinical parameters—such as imaging data, electronic health records, and pathology reports—further enriches the dataset and increases the potential for deep insight through AI-enabled analysis. This comprehensive view of a patient’s molecular profile holds immense potential for predicting outcomes ranging from survival probabilities to the likelihood of response to particular therapeutic regimens.

AI Techniques in Genomic Data Analysis

The application of AI to genomic data analysis has evolved rapidly in the past decade. By employing advanced algorithms to process and integrate disparate data types, AI systems are now capable of predicting patient outcomes with impressive accuracy. The techniques used involve both standard machine learning methods and novel deep learning architectures that can make sense of the high-dimensional space inherent in genomic datasets.

Common AI Algorithms

A wide range of AI algorithms have been employed to analyze genomic data. Some of the most common techniques include:

- Supervised Learning Algorithms: These include support vector machines (SVM), random forests, and ensemble methods that have been utilized to pinpoint gene signatures associated with disease outcomes. By training on labelled datasets (e.g., genomes from patients with known outcomes), these algorithms can learn the relationship between genomic features and prognosis.

- Deep Learning Architectures: Using neural networks, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), deep learning methods can uncover complex nonlinear relationships within genomic data. These methods have demonstrated superior performance in tasks such as variant calling and predicting treatment responses by integrating multi-omics data.

- Unsupervised Learning Techniques: Clustering methods like self-organizing maps and principal component analysis (PCA) help in discovering intrinsic structures in the data, allowing the classification of tumors into new subtypes based on their mutation profiles. These discoveries often lead to the identification of novel biomarkers correlated with patient outcomes.

- Hybrid Models: In some settings, AI algorithms combine supervised and unsupervised techniques, or integrate statistical models with machine learning, to manage the heterogeneity and high dimensionality of genomic data. For example, integrating gene regulatory network analyses with pattern recognition algorithms helps in reconstructing the pathways that drive disease progression.

These algorithms not only offer predictions regarding patient outcomes but also provide insights into the underlying biological mechanisms, thereby bridging the gap between computational predictions and biological interpretability.

Data Processing and Integration

One of the key strengths of AI lies in its ability to process and integrate heterogeneous datasets. When applied to genomic data, AI systems must handle data preprocessing, normalization, and integration of diverse datasets such as gene expression profiles, mutation catalogs, and epigenomic modifications.

- Data Preprocessing: Genomic datasets often require rigorous data cleaning, normalization, and dimensionality reduction to remove noise and focus on the most relevant features. AI pipelines automate many of these steps, ensuring that downstream analyses are both robust and efficient.

- Multimodal Data Integration: To improve the predictive performance, AI systems frequently combine genomic data with other clinical parameters. For example, digital pathology images, electronic health record (EHR) data, and genomic sequence data can be integrated to provide a more comprehensive view of the patient’s disease state. This multimodal integration is crucial because it empowers AI models to discern interactions between clinical features and molecular alterations.

- Feature Extraction and Representation Learning: Advanced deep learning models are capable of automatically extracting hierarchical features from raw data without the need for manual feature engineering. In the genomic context, features may include mutation burden, gene expression levels, or epigenetic modifications, which are then used to construct predictive models of patient outcomes.

- Knowledge Graphs and Ontologies: To enhance explanatory power, some systems integrate genomic data with curated databases and ontologies, constructing knowledge graphs that relate genetic variants to clinical outcomes. This aids not only in prediction but also in providing explainable insights into how a specific genetic alteration might be driving disease progression.

By processing large quantities of genomic data and combining them with other clinical variables, these AI techniques facilitate the discovery of previously unnoticed associations that are critical for predicting patient outcomes.

Applications in Predicting Patient Outcomes

The integration of AI with genomic data has given rise to several applications aimed at predicting patient outcomes. Clinical decision-making is increasingly enriched by AI models that use genomic signatures to forecast treatment responses, disease progression, survival probabilities, and adverse events. These applications span various stages of disease management—from early detection and prognostication to personalized therapy selection.

Case Studies and Examples

Evidence from numerous studies and patents supports the notion that AI can predict patient outcomes based on genomic data:

- Cancer Prognosis and Genomic Prediction: A number of studies have demonstrated that AI models can analyze the genomic sequences of cancer patients to predict life expectancy and treatment outcomes. For instance, systems described in patents have proposed AI methods that analyze genome sequences and cell biology alterations to determine patient survival probabilities, thereby integrating genomic markers with clinical data to predict prognosis. These systems leverage deep learning algorithms that analyze genetic variants and molecular patterns to provide actionable prognostic information.

- Multimodal Predictions: Work from research groups has shown that integrating genomic data with other diagnostic modalities (e.g., histology, imaging, and clinical records) leads to more comprehensive predictive models. A notable study combined genomic sequencing and pathology image analysis to yield a deep learning-based algorithm that predicts patient outcomes more accurately than single-source data models, demonstrating improvements in prognostic accuracy across multiple types of cancer. This kind of integration supports the view that while genomics alone provides significant insights, the combination with other modalities further enhances prediction quality.

- Predictive Models for Treatment Response: Patented systems have detailed methods for predicting treatment survival prospects using enriched subject-specific datasets that incorporate mutation profiles across cancer types. One example involves a system that not only predicts whether a patient is likely to respond favorably to a particular chemotherapy regimen but also checks if the underlying reasons comply with oncological guidelines, thereby providing a dual function of prediction and guideline validation. This approach highlights the practical utility of AI in personalized medicine.

- Genomic Signature Identification in Complex Diseases: In disorders other than cancer, AI techniques have been applied to large genomic datasets to identify signatures that are predictive of disease progression and outcome. For example, studies have applied AI methods to stratify patients with complex diseases such as cardiovascular disorders or neurodegenerative conditions based on their genomic profiles, linking specific genetic variants to estimated risk levels and treatment outcomes. In these applications, AI not only serves as a prediction tool but also informs the understanding of underlying disease mechanisms.

Each of these case studies illustrates that by harnessing the power of AI to analyze high-dimensional genomic data, clinicians and researchers can predict patient outcomes with increasing precision, support decision-making, and ultimately improve patient care.

Performance Metrics and Evaluation

Defining the success of AI-driven predictive models requires robust performance metrics and thorough evaluation protocols. In the context of genomic-based predictions:

- Area Under the Receiver Operating Characteristic Curve (AUROC): This is one of the most common metrics used to evaluate the discrimination ability of AI models. Several studies report AUROC values often exceeding 0.8 when predicting outcomes such as mortality or disease progression, indicating high predictive performance. For instance, one model measured an AUROC of 0.935 when predicting patient survival from genomic data.

- Precision, Recall, and F1-Score: These additional metrics help assess the sensitivity and specificity of the predictive model. High precision indicates that the model’s positive predictions are reliable, while high recall (or sensitivity) shows that the model is effective in detecting actual cases. Balancing these measures via the F1-score is essential in clinical applications where both false positives and false negatives can have serious consequences.

- Calibration Plots and Bland-Altman Analyses: To ensure that the predicted probabilities align with real outcome frequencies, calibration plots are used. These graphical methods allow researchers to understand if a model’s predictions overestimate or underestimate the true outcome probability. Such evaluation is critical when using AI to inform therapy decisions.

- Clinical Impact Metrics: Beyond statistical performance, models are increasingly evaluated using metrics that directly relate to patient outcomes. For example, improvement in overall survival, reduction in adverse event rates, and better resource allocation (e.g., ICU admissions) are important indicators of a model’s efficacy in a clinical environment. Furthermore, randomized controlled trials and prospective studies are now being emphasized as the gold standard for evaluating the real-world impact of AI predictions on patient care.

The rigorous evaluation of AI models, integrating both technical performance and clinical outcome measures, is central to validating the use of such systems in healthcare. The performance metrics not only showcase the predictive power of the AI system but also guide improvements and calibrations needed for accurate real-world deployment.

Ethical and Privacy Considerations

As AI increasingly becomes involved in predicting patient outcomes from genomic data, it raises several ethical and privacy-related issues. These concerns are particularly acute in genomics due to the unique sensitivity and permanence of genetic information. Ensuring patient data privacy, addressing algorithmic biases, and ensuring transparency are all critical to maintaining trust in AI applications.

Data Privacy Issues

Genomic data is inherently personal, and protecting patient privacy when using such data in AI models is paramount. The advent of robust privacy regulations such as HIPAA in the United States and GDPR in Europe has underscored the need for stringent safeguarding of personal data. AI systems that integrate genomic data must incorporate encryption, secure data storage, and anonymization techniques to prevent data breaches.

Moreover, ensuring the de-identification of patient data during the preprocessing phases is critical so that even if datasets are combined or analyzed, the individual identities are not compromised. Given that genomic information is often publicly accessible in some contexts, the potential for misuse or unintentional data disclosures poses distinct ethical challenges that demand careful handling by AI program developers and data custodians.

Ethical Implications in AI Predictions

Beyond privacy, the ethical implications of using AI to predict patient outcomes are wide-ranging:

- Algorithmic Transparency and Explainability: One of the most discussed ethical issues is the “black box” nature of many AI algorithms. Physicians and patients may be reluctant to rely on AI outputs if they cannot understand the basis of the predictions. Although techniques like explainable AI (XAI) are emerging to address these concerns, current implementations often do not convey the underlying rationale in a clear and interpretable manner. This lack of transparency can undermine trust when clinicians are unable to verify that the predictions are biologically plausible or clinically sound.

- Bias and Fairness: AI systems are only as good as the data on which they are trained. If genomic datasets are non-representative or biased towards certain populations, the predictions generated may inadvertently reinforce disparities in healthcare outcomes. It is essential that ethical guidelines incorporate measures to detect and mitigate bias in AI models. This includes ensuring diverse datasets, routine auditing for skewed outputs, and aligning algorithmic decisions with equitable clinical practices.

- Autonomy and Informed Consent: Using genomic data for predictive analytics raises questions about patient consent. Patients must be fully informed about how their genomic data are being used, especially when such data influence decisions that affect their clinical outcomes. Ethical frameworks must balance the need for data-driven insights with respect for individual autonomy and the right to privacy.

- Liability and Accountability: When AI predictions directly inform patient care, the question of who is responsible for errors or adverse outcomes becomes complex. Liability might be shared among AI developers, healthcare providers, and institutions. Without clear guidelines, the integration of AI in clinical decision-making may inadvertently expose patients to harm without consistent accountability measures in place.

By addressing these privacy and ethical considerations thoroughly, developers and clinicians can ensure that the deployment of AI-driven genomic prediction tools is both responsible and aligned with the fundamental principles of medical ethics.

Challenges and Future Directions

While AI has shown great promise in predicting patient outcomes using genomic data, several challenges remain that must be overcome for these methods to be widely integrated into clinical practice. Looking forward, research and development in this domain continue to address technical, ethical, and practical barriers.

Technical Challenges

1. High-Dimensional Data and Complexity:

Genomic datasets are enormous and complex, comprising billions of data points that describe genetic variants, expression profiles, methylation patterns, and more. Effectively managing this “big data” requires state-of-the-art computational infrastructures and innovative algorithms capable of processing large volumes of data without compromising on accuracy. Overfitting, noise, and high dimensionality remain persistent technical challenges that necessitate careful model selection, robust feature selection, and the integration of advanced dimensionality reduction techniques.

2. Integration of Multimodal Data:

One of the promises of personalized medicine is integrating genomic data with clinical records, imaging studies, and other biomarkers. However, merging datasets from such different sources poses significant technical challenges due to differences in data format, scale, and quality. Efficient data integration requires developing bespoke pipelines and novel algorithms that can harmonize these diverse sources, thereby improving the predictive performance of AI models.

3. Explainability and Model Transparency:

Despite advances in deep learning, many AI models remain “black boxes” that produce predictions without clear indications of which features contributed to the outcome. This lack of interpretability is particularly problematic when decisions are made on the basis of genomic data, where understanding causality is critical for clinical acceptance. Techniques for explainable AI are developing, but there is a trade-off between performance and interpretability that must be managed.

4. Scalability and Real-World Deployment:

Translating AI models from controlled research environments to real-world clinical settings can uncover new technical deficits—such as reduced performance when confronted with heterogeneous data or unexpected outliers. Robust measures of model calibration, rigorous external validation, and prospective trials are required to ensure that the performance metrics indicated in research settings hold true in practical applications.

5. Data Quality and Annotation:

The quality of genomic data is often limited by technical variability in sequencing platforms and the inherent noise in biological systems. Many datasets suffer from missing or inconsistent data, and obtaining high-quality annotations is resource-intensive. Improving the quality of these datasets through standardization and robust data curation techniques is essential for training reliable AI models.

Future Research Directions

1. Enhanced Multimodal Modeling:

Future research should focus on refining models that integrate genomic data with other clinical inputs. This could involve the development of hybrid machine learning pipelines that seamlessly combine genomic, imaging, and clinical data to provide richer, more nuanced predictions of patient outcomes. Such integrated models could lead to a more holistic understanding of disease processes, ultimately facilitating more personalized treatment strategies.

2. Explainable AI and Causal Inference:

Further work is required to develop AI systems that provide not only high accuracy but also clear explanations of the reasoning behind predictions. This will be essential in clinical settings, where the inability to interpret a model’s output can impede trust and adoption. Integrating causal inference techniques with current predictive models may allow AI systems to suggest not only what is likely to happen but also why—a step forward in merging transparency with predictive power.

3. Large-Scale Prospective Studies and Clinical Trials:

To move AI models from the lab to the clinic, future research must incorporate real-world clinical trials that rigorously test model performance in diverse patient populations. Such studies should use external datasets collected prospectively from multiple institutions, incorporating robust metrics and ensuring that the predictive models generalize across settings. This will also help address issues of bias and validation that are critical for widespread clinical adoption.

4. Addressing Ethical and Legal Challenges:

Research into ethical AI should continue alongside technical development. Designing systems that ensure transparency, fairness, privacy, and accountability is essential. Future studies should aim to develop standardized protocols and guidelines for the use of genomic data in AI systems, ensuring that patient rights are safeguarded even as these technologies become more central to clinical practice. Collaborations between computer scientists, ethicists, and clinicians will be pivotal in formalizing regulatory and ethical guidelines.

5. Improved Data Curation and Standardization:

One critical area for future research is the enhancement of genomic data quality. Efforts should be directed toward creating well-annotated, centralized databases that can serve as training grounds for high-performance AI models. The development of automated data curation tools, coupled with standardized protocols for data collection and annotation, will greatly improve the robustness of AI predictions in genomic medicine.

6. Economic and Implementation Considerations:

Beyond technical refinement, future directions in AI-powered genomic prediction must address the economic viability and scalability of these solutions. Research should look into the cost-effectiveness of deploying AI systems in healthcare settings and evaluate how these systems can be seamlessly integrated into existing clinical workflows. This involves optimizing computational resources, ensuring scalability, and building user-friendly platforms that can be easily adopted by clinicians.

Conclusion

In summary, AI has demonstrated significant potential in predicting patient outcomes based on genomic data, weaving together intricate markers from a patient’s genetic blueprint with other clinical parameters to forecast survival probabilities, treatment responses, and disease progression. The integration of high-dimensional genomic data with advanced AI algorithms—ranging from traditional machine learning methods to state-of-the-art deep learning architectures—offers a promising avenue for personalized medicine. As discussed, the application of AI in genomics encompasses robust data processing and integration techniques, detailed evaluation of predictive performance using metrics such as AUROC, and the incorporation of multimodal data sources to enhance outcome prediction.

Ethical and privacy issues, including data protection, algorithmic transparency, bias mitigation, and accountability, must be addressed to ensure that these predictions are reliable and acceptable in clinical practice. Furthermore, technical challenges such as handling high-dimensional data, integrating multimodal inputs, and ensuring model explainability remain active areas for research. Future research directions include enhanced multimodal modeling, better methods for explainability, rigorous external validation through prospective studies and clinical trials, and careful consideration of data quality and economic factors.

Overall, the general benefits of AI in genomics lie in its ability to process vast amounts of data and provide clinically actionable insights. On a specific level, AI systems are increasingly capable of predicting patient outcomes by analyzing genomic signatures and integrating them with other patient data, which ultimately facilitates timely, evidence-based interventions. In a broader context, the continued evolution of AI methodologies and their ethical application in healthcare promises to transform patient care, leading to more personalized, efficient, and equitable treatment strategies. Thus, while significant challenges remain, the future of AI in predicting patient outcomes from genomic data looks both promising and transformative, ushering in a new era of precision medicine.

By bridging the gap between cutting-edge computational methods and real-world clinical needs, AI-driven genomic prediction has the potential to revolutionize healthcare—improving diagnostic accuracy, optimizing treatment plans, and ultimately enhancing patient outcomes on a global scale. Continued research, multidisciplinary collaboration, and rigorous ethical oversight are essential to fully realize the promise of this exciting field.

In today’s healthcare landscape, artificial intelligence (AI) has emerged as a transformative tool that can process vast volumes of complex data, offering solutions from early disease detection to personalized treatment strategies. One of the most promising domains is the prediction of patient outcomes using genomic data. By leveraging machine learning algorithms and advanced computational infrastructures, AI can uncover patterns and correlations in genomic datasets that can inform prognosis, predict treatment response, and even guide the design of novel therapies. This integration of AI and genomics is not only theoretically appealing but is already showing real-world promise through various applications and studies.

Basics of Artificial Intelligence

Artificial intelligence refers to the simulation of human intelligence in machines that are designed to think and act like humans. At its core, AI employs various algorithms—including supervised and unsupervised learning techniques, deep learning networks, support vector machines, random forests, evolutionary algorithms, and neural networks—to understand complex datasets and make predictions. In the biomedical context, these algorithms have advanced from simple regression and classification models to multifaceted deep learning systems that can handle multi-dimensional data. For instance, convolutional neural networks (CNNs) have been used in medical imaging diagnostics and, increasingly, in genomic data analyses to predict outcomes by learning intricate patterns that traditional statistical methods might miss.

As AI systems learn from annotated examples, they become proficient at recognizing subtle patterns—such as gene expression levels or mutation signatures—that correlate with disease progression. In the realm of genomics, where data dimensions can reach billions of datapoints for whole-genome analyses, AI provides the computational horsepower necessary to identify clinically relevant markers and patterns that could enable personalized prognostication and therapeutic decision-making.

Overview of Genomic Data

Genomic data refer to the complex information encoded within an individual’s DNA and RNA. This includes not only the entire sequence of nucleotides in the genome but also epigenetic markers, gene expression profiles, and mutation patterns that contribute to disease phenotypes. Modern next-generation sequencing (NGS) technologies generate massive amounts of such data, making it possible to capture the genetic blueprint underlying patient-specific disease processes.

The nature of genomic data is inherently high-dimensional and heterogeneous. It can include point mutations, structural variants, copy number alterations, and methylation status, among others, which together provide a holistic picture of how genetic alterations drive disease and influence treatment responses. Combining genomic data with other clinical parameters—such as imaging data, electronic health records, and pathology reports—further enriches the dataset and increases the potential for deep insight through AI-enabled analysis. This comprehensive view of a patient’s molecular profile holds immense potential for predicting outcomes ranging from survival probabilities to the likelihood of response to particular therapeutic regimens.

AI Techniques in Genomic Data Analysis

The application of AI to genomic data analysis has evolved rapidly in the past decade. By employing advanced algorithms to process and integrate disparate data types, AI systems are now capable of predicting patient outcomes with impressive accuracy. The techniques used involve both standard machine learning methods and novel deep learning architectures that can make sense of the high-dimensional space inherent in genomic datasets.

Common AI Algorithms

A wide range of AI algorithms have been employed to analyze genomic data. Some of the most common techniques include:

- Supervised Learning Algorithms: These include support vector machines (SVM), random forests, and ensemble methods that have been utilized to pinpoint gene signatures associated with disease outcomes. By training on labelled datasets (e.g., genomes from patients with known outcomes), these algorithms can learn the relationship between genomic features and prognosis.

- Deep Learning Architectures: Using neural networks, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), deep learning methods can uncover complex nonlinear relationships within genomic data. These methods have demonstrated superior performance in tasks such as variant calling and predicting treatment responses by integrating multi-omics data.

- Unsupervised Learning Techniques: Clustering methods like self-organizing maps and principal component analysis (PCA) help in discovering intrinsic structures in the data, allowing the classification of tumors into new subtypes based on their mutation profiles. These discoveries often lead to the identification of novel biomarkers correlated with patient outcomes.

- Hybrid Models: In some settings, AI algorithms combine supervised and unsupervised techniques, or integrate statistical models with machine learning, to manage the heterogeneity and high dimensionality of genomic data. For example, integrating gene regulatory network analyses with pattern recognition algorithms helps in reconstructing the pathways that drive disease progression.

These algorithms not only offer predictions regarding patient outcomes but also provide insights into the underlying biological mechanisms, thereby bridging the gap between computational predictions and biological interpretability.

Data Processing and Integration

One of the key strengths of AI lies in its ability to process and integrate heterogeneous datasets. When applied to genomic data, AI systems must handle data preprocessing, normalization, and integration of diverse datasets such as gene expression profiles, mutation catalogs, and epigenomic modifications.

- Data Preprocessing: Genomic datasets often require rigorous data cleaning, normalization, and dimensionality reduction to remove noise and focus on the most relevant features. AI pipelines automate many of these steps, ensuring that downstream analyses are both robust and efficient.

- Multimodal Data Integration: To improve the predictive performance, AI systems frequently combine genomic data with other clinical parameters. For example, digital pathology images, electronic health record (EHR) data, and genomic sequence data can be integrated to provide a more comprehensive view of the patient’s disease state. This multimodal integration is crucial because it empowers AI models to discern interactions between clinical features and molecular alterations.

- Feature Extraction and Representation Learning: Advanced deep learning models are capable of automatically extracting hierarchical features from raw data without the need for manual feature engineering. In the genomic context, features may include mutation burden, gene expression levels, or epigenetic modifications, which are then used to construct predictive models of patient outcomes.

- Knowledge Graphs and Ontologies: To enhance explanatory power, some systems integrate genomic data with curated databases and ontologies, constructing knowledge graphs that relate genetic variants to clinical outcomes. This aids not only in prediction but also in providing explainable insights into how a specific genetic alteration might be driving disease progression.

By processing large quantities of genomic data and combining them with other clinical variables, these AI techniques facilitate the discovery of previously unnoticed associations that are critical for predicting patient outcomes.

Applications in Predicting Patient Outcomes

The integration of AI with genomic data has given rise to several applications aimed at predicting patient outcomes. Clinical decision-making is increasingly enriched by AI models that use genomic signatures to forecast treatment responses, disease progression, survival probabilities, and adverse events. These applications span various stages of disease management—from early detection and prognostication to personalized therapy selection.

Case Studies and Examples

Evidence from numerous studies and patents supports the notion that AI can predict patient outcomes based on genomic data:

- Cancer Prognosis and Genomic Prediction: A number of studies have demonstrated that AI models can analyze the genomic sequences of cancer patients to predict life expectancy and treatment outcomes. For instance, systems described in patents have proposed AI methods that analyze genome sequences and cell biology alterations to determine patient survival probabilities, thereby integrating genomic markers with clinical data to predict prognosis. These systems leverage deep learning algorithms that analyze genetic variants and molecular patterns to provide actionable prognostic information.

- Multimodal Predictions: Work from research groups has shown that integrating genomic data with other diagnostic modalities (e.g., histology, imaging, and clinical records) leads to more comprehensive predictive models. A notable study combined genomic sequencing and pathology image analysis to yield a deep learning-based algorithm that predicts patient outcomes more accurately than single-source data models, demonstrating improvements in prognostic accuracy across multiple types of cancer. This kind of integration supports the view that while genomics alone provides significant insights, the combination with other modalities further enhances prediction quality.

- Predictive Models for Treatment Response: Patented systems have detailed methods for predicting treatment survival prospects using enriched subject-specific datasets that incorporate mutation profiles across cancer types. One example involves a system that not only predicts whether a patient is likely to respond favorably to a particular chemotherapy regimen but also checks if the underlying reasons comply with oncological guidelines, thereby providing a dual function of prediction and guideline validation. This approach highlights the practical utility of AI in personalized medicine.

- Genomic Signature Identification in Complex Diseases: In disorders other than cancer, AI techniques have been applied to large genomic datasets to identify signatures that are predictive of disease progression and outcome. For example, studies have applied AI methods to stratify patients with complex diseases such as cardiovascular disorders or neurodegenerative conditions based on their genomic profiles, linking specific genetic variants to estimated risk levels and treatment outcomes. In these applications, AI not only serves as a prediction tool but also informs the understanding of underlying disease mechanisms.

Each of these case studies illustrates that by harnessing the power of AI to analyze high-dimensional genomic data, clinicians and researchers can predict patient outcomes with increasing precision, support decision-making, and ultimately improve patient care.

Performance Metrics and Evaluation

Defining the success of AI-driven predictive models requires robust performance metrics and thorough evaluation protocols. In the context of genomic-based predictions:

- Area Under the Receiver Operating Characteristic Curve (AUROC): This is one of the most common metrics used to evaluate the discrimination ability of AI models. Several studies report AUROC values often exceeding 0.8 when predicting outcomes such as mortality or disease progression, indicating high predictive performance. For instance, one model measured an AUROC of 0.935 when predicting patient survival from genomic data.

- Precision, Recall, and F1-Score: These additional metrics help assess the sensitivity and specificity of the predictive model. High precision indicates that the model’s positive predictions are reliable, while high recall (or sensitivity) shows that the model is effective in detecting actual cases. Balancing these measures via the F1-score is essential in clinical applications where both false positives and false negatives can have serious consequences.

- Calibration Plots and Bland-Altman Analyses: To ensure that the predicted probabilities align with real outcome frequencies, calibration plots are used. These graphical methods allow researchers to understand if a model’s predictions overestimate or underestimate the true outcome probability. Such evaluation is critical when using AI to inform therapy decisions.

- Clinical Impact Metrics: Beyond statistical performance, models are increasingly evaluated using metrics that directly relate to patient outcomes. For example, improvement in overall survival, reduction in adverse event rates, and better resource allocation (e.g., ICU admissions) are important indicators of a model’s efficacy in a clinical environment. Furthermore, randomized controlled trials and prospective studies are now being emphasized as the gold standard for evaluating the real-world impact of AI predictions on patient care.

The rigorous evaluation of AI models, integrating both technical performance and clinical outcome measures, is central to validating the use of such systems in healthcare. The performance metrics not only showcase the predictive power of the AI system but also guide improvements and calibrations needed for accurate real-world deployment.

Ethical and Privacy Considerations

As AI increasingly becomes involved in predicting patient outcomes from genomic data, it raises several ethical and privacy-related issues. These concerns are particularly acute in genomics due to the unique sensitivity and permanence of genetic information. Ensuring patient data privacy, addressing algorithmic biases, and ensuring transparency are all critical to maintaining trust in AI applications.

Data Privacy Issues

Genomic data is inherently personal, and protecting patient privacy when using such data in AI models is paramount. The advent of robust privacy regulations such as HIPAA in the United States and GDPR in Europe has underscored the need for stringent safeguarding of personal data. AI systems that integrate genomic data must incorporate encryption, secure data storage, and anonymization techniques to prevent data breaches.

Moreover, ensuring the de-identification of patient data during the preprocessing phases is critical so that even if datasets are combined or analyzed, the individual identities are not compromised. Given that genomic information is often publicly accessible in some contexts, the potential for misuse or unintentional data disclosures poses distinct ethical challenges that demand careful handling by AI program developers and data custodians.

Ethical Implications in AI Predictions

Beyond privacy, the ethical implications of using AI to predict patient outcomes are wide-ranging:

- Algorithmic Transparency and Explainability: One of the most discussed ethical issues is the “black box” nature of many AI algorithms. Physicians and patients may be reluctant to rely on AI outputs if they cannot understand the basis of the predictions. Although techniques like explainable AI (XAI) are emerging to address these concerns, current implementations often do not convey the underlying rationale in a clear and interpretable manner. This lack of transparency can undermine trust when clinicians are unable to verify that the predictions are biologically plausible or clinically sound.

- Bias and Fairness: AI systems are only as good as the data on which they are trained. If genomic datasets are non-representative or biased towards certain populations, the predictions generated may inadvertently reinforce disparities in healthcare outcomes. It is essential that ethical guidelines incorporate measures to detect and mitigate bias in AI models. This includes ensuring diverse datasets, routine auditing for skewed outputs, and aligning algorithmic decisions with equitable clinical practices.

- Autonomy and Informed Consent: Using genomic data for predictive analytics raises questions about patient consent. Patients must be fully informed about how their genomic data are being used, especially when such data influence decisions that affect their clinical outcomes. Ethical frameworks must balance the need for data-driven insights with respect for individual autonomy and the right to privacy.

- Liability and Accountability: When AI predictions directly inform patient care, the question of who is responsible for errors or adverse outcomes becomes complex. Liability might be shared among AI developers, healthcare providers, and institutions. Without clear guidelines, the integration of AI in clinical decision-making may inadvertently expose patients to harm without consistent accountability measures in place.

By addressing these privacy and ethical considerations thoroughly, developers and clinicians can ensure that the deployment of AI-driven genomic prediction tools is both responsible and aligned with the fundamental principles of medical ethics.

Challenges and Future Directions

While AI has shown great promise in predicting patient outcomes using genomic data, several challenges remain that must be overcome for these methods to be widely integrated into clinical practice. Looking forward, research and development in this domain continue to address technical, ethical, and practical barriers.

Technical Challenges

1. High-Dimensional Data and Complexity:

Genomic datasets are enormous and complex, comprising billions of data points that describe genetic variants, expression profiles, methylation patterns, and more. Effectively managing this “big data” requires state-of-the-art computational infrastructures and innovative algorithms capable of processing large volumes of data without compromising on accuracy. Overfitting, noise, and high dimensionality remain persistent technical challenges that necessitate careful model selection, robust feature selection, and the integration of advanced dimensionality reduction techniques.

2. Integration of Multimodal Data:

One of the promises of personalized medicine is integrating genomic data with clinical records, imaging studies, and other biomarkers. However, merging datasets from such different sources poses significant technical challenges due to differences in data format, scale, and quality. Efficient data integration requires developing bespoke pipelines and novel algorithms that can harmonize these diverse sources, thereby improving the predictive performance of AI models.

3. Explainability and Model Transparency:

Despite advances in deep learning, many AI models remain “black boxes” that produce predictions without clear indications of which features contributed to the outcome. This lack of interpretability is particularly problematic when decisions are made on the basis of genomic data, where understanding causality is critical for clinical acceptance. Techniques for explainable AI are developing, but there is a trade-off between performance and interpretability that must be managed.

4. Scalability and Real-World Deployment:

Translating AI models from controlled research environments to real-world clinical settings can uncover new technical deficits—such as reduced performance when confronted with heterogeneous data or unexpected outliers. Robust measures of model calibration, rigorous external validation, and prospective trials are required to ensure that the performance metrics indicated in research settings hold true in practical applications.

5. Data Quality and Annotation:

The quality of genomic data is often limited by technical variability in sequencing platforms and the inherent noise in biological systems. Many datasets suffer from missing or inconsistent data, and obtaining high-quality annotations is resource-intensive. Improving the quality of these datasets through standardization and robust data curation techniques is essential for training reliable AI models.

Future Research Directions

1. Enhanced Multimodal Modeling:

Future research should focus on refining models that integrate genomic data with other clinical inputs. This could involve the development of hybrid machine learning pipelines that seamlessly combine genomic, imaging, and clinical data to provide richer, more nuanced predictions of patient outcomes. Such integrated models could lead to a more holistic understanding of disease processes, ultimately facilitating more personalized treatment strategies.

2. Explainable AI and Causal Inference:

Further work is required to develop AI systems that provide not only high accuracy but also clear explanations of the reasoning behind predictions. This will be essential in clinical settings, where the inability to interpret a model’s output can impede trust and adoption. Integrating causal inference techniques with current predictive models may allow AI systems to suggest not only what is likely to happen but also why—a step forward in merging transparency with predictive power.

3. Large-Scale Prospective Studies and Clinical Trials:

To move AI models from the lab to the clinic, future research must incorporate real-world clinical trials that rigorously test model performance in diverse patient populations. Such studies should use external datasets collected prospectively from multiple institutions, incorporating robust metrics and ensuring that the predictive models generalize across settings. This will also help address issues of bias and validation that are critical for widespread clinical adoption.

4. Addressing Ethical and Legal Challenges:

Research into ethical AI should continue alongside technical development. Designing systems that ensure transparency, fairness, privacy, and accountability is essential. Future studies should aim to develop standardized protocols and guidelines for the use of genomic data in AI systems, ensuring that patient rights are safeguarded even as these technologies become more central to clinical practice. Collaborations between computer scientists, ethicists, and clinicians will be pivotal in formalizing regulatory and ethical guidelines.

5. Improved Data Curation and Standardization:

One critical area for future research is the enhancement of genomic data quality. Efforts should be directed toward creating well-annotated, centralized databases that can serve as training grounds for high-performance AI models. The development of automated data curation tools, coupled with standardized protocols for data collection and annotation, will greatly improve the robustness of AI predictions in genomic medicine.

6. Economic and Implementation Considerations:

Beyond technical refinement, future directions in AI-powered genomic prediction must address the economic viability and scalability of these solutions. Research should look into the cost-effectiveness of deploying AI systems in healthcare settings and evaluate how these systems can be seamlessly integrated into existing clinical workflows. This involves optimizing computational resources, ensuring scalability, and building user-friendly platforms that can be easily adopted by clinicians.

Conclusion

In summary, AI has demonstrated significant potential in predicting patient outcomes based on genomic data, weaving together intricate markers from a patient’s genetic blueprint with other clinical parameters to forecast survival probabilities, treatment responses, and disease progression. The integration of high-dimensional genomic data with advanced AI algorithms—ranging from traditional machine learning methods to state-of-the-art deep learning architectures—offers a promising avenue for personalized medicine. As discussed, the application of AI in genomics encompasses robust data processing and integration techniques, detailed evaluation of predictive performance using metrics such as AUROC, and the incorporation of multimodal data sources to enhance outcome prediction.

Ethical and privacy issues, including data protection, algorithmic transparency, bias mitigation, and accountability, must be addressed to ensure that these predictions are reliable and acceptable in clinical practice. Furthermore, technical challenges such as handling high-dimensional data, integrating multimodal inputs, and ensuring model explainability remain active areas for research. Future research directions include enhanced multimodal modeling, better methods for explainability, rigorous external validation through prospective studies and clinical trials, and careful consideration of data quality and economic factors.

Overall, the general benefits of AI in genomics lie in its ability to process vast amounts of data and provide clinically actionable insights. On a specific level, AI systems are increasingly capable of predicting patient outcomes by analyzing genomic signatures and integrating them with other patient data, which ultimately facilitates timely, evidence-based interventions. In a broader context, the continued evolution of AI methodologies and their ethical application in healthcare promises to transform patient care, leading to more personalized, efficient, and equitable treatment strategies. Thus, while significant challenges remain, the future of AI in predicting patient outcomes from genomic data looks both promising and transformative, ushering in a new era of precision medicine.

By bridging the gap between cutting-edge computational methods and real-world clinical needs, AI-driven genomic prediction has the potential to revolutionize healthcare—improving diagnostic accuracy, optimizing treatment plans, and ultimately enhancing patient outcomes on a global scale. Continued research, multidisciplinary collaboration, and rigorous ethical oversight are essential to fully realize the promise of this exciting field.

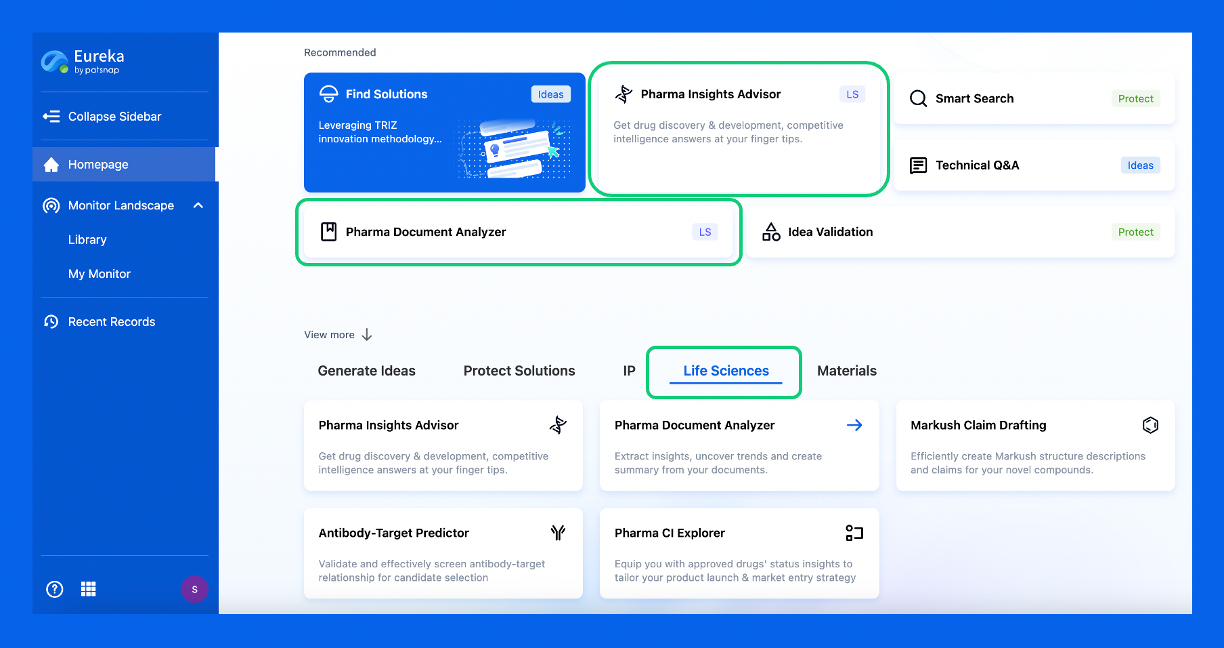

Discover Eureka LS: AI Agents Built for Biopharma Efficiency

Stop wasting time on biopharma busywork. Meet Eureka LS - your AI agent squad for drug discovery.

▶ See how 50+ research teams saved 300+ hours/month

From reducing screening time to simplifying Markush drafting, our AI Agents are ready to deliver immediate value. Explore Eureka LS today and unlock powerful capabilities that help you innovate with confidence.

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.