Request Demo

How can AI assist in the identification of biomarkers for personalized therapies?

21 March 2025

Introduction to Biomarkers and Personalized Therapies

Definition of Biomarkers

Biomarkers are objectively measurable indicators found in biological samples—such as blood, tissues, or other body fluids—that reflect a biological state or condition, often related to disease processes or therapeutic responses. They can be molecules (e.g., proteins, nucleic acids, metabolites), imaging features, or even specific patterns in omics data, which are used to indicate normal or pathogenic processes and to monitor responses to treatment. Their identification is crucial for diagnosing diseases, predicting outcomes, and monitoring therapeutic efficacy. In the context of personalized therapies, biomarkers are not merely diagnostic tools but are integral to tailoring medical interventions to the unique molecular, genetic, and phenotypic characteristics of an individual patient.

Importance of Personalized Therapies

Personalized therapies represent a paradigm shift in medicine where treatment plans are designed based on individual patient profiles rather than a one-size-fits-all approach. This approach is particularly essential in complex diseases such as cancer, where inter-patient heterogeneity demands therapy that is both patient-specific and adaptable to dynamic changes in disease progression. Personalized therapies can help optimize therapeutic efficacy, minimize adverse effects, and cut down on wasted healthcare expenditure by selecting the most promising treatment for a given individual. Biomarkers form the backbone of this approach; by accurately stratifying patients based on their disease characteristics and likely response to treatment, clinicians can make informed decisions that improve overall outcomes.

Role of AI in Biomarker Discovery

AI Technologies Used in Biomarker Identification

Artificial intelligence (AI) is revolutionizing the discovery of biomarkers by processing and interpreting massive amounts of heterogeneous biomedical data that would be impossible for humans to fully analyze. AI employs various subfields such as machine learning (ML), deep learning (DL), and natural language processing (NLP) to uncover patterns in genomics, proteomics, imaging, and electronic health record (EHR) datasets. For instance, deep learning algorithms have been successfully employed to predict peptide measurements from amino acid sequences and to integrate multi-omics data for the discovery of candidate biomarkers. NLP allows the extraction of relevant information from unstructured clinical texts and scientific literature—a process that can uncover potential biomarkers that are not captured in structured databases. In addition, AI models such as convolutional neural networks (CNNs) have been applied to analyze medical images and even flow cytometry data, thereby identifying subtle features and patterns that serve as biomarkers for disease progression or therapeutic response. Furthermore, reinforcement learning techniques enable the exploration of multi-dimensional biomolecular data, which can be used to identify predictive biomarkers through continuous learning and self-optimization.

Advantages over Traditional Methods

The traditional methods of biomarker discovery, which have relied heavily on manually driven experiments and isolated statistical tests, face significant limitations in sensitivity, specificity, and scalability. AI-driven approaches overcome these barriers by enabling high-throughput screening and integration of large-scale, multi-dimensional datasets. One of the key advantages is the ability of AI methods to detect subtle, non-linear patterns and interactions between variables that might be missed by more conventional statistical methods. For instance, machine learning algorithms can combine genotypic, proteomic, and metabolomic measurements to not only identify potential biomarkers but also to validate their predictive power across different patient cohorts. Moreover, AI models can adapt and refine their algorithms based on new data, thereby continuously improving the accuracy of biomarker identification. This self-improving nature of AI, coupled with its ability to integrate diverse datasets—from imaging to genetic sequences—provides a robust platform that enhances reproducibility and reduces the time from discovery to clinical implementation. Additionally, AI’s computational power not only increases the scale at which data can be analyzed but also facilitates the combination of data types, leading to the identification of multidimensional biomarker panels that are more reflective of the underlying disease biology than single-marker approaches.

Applications and Case Studies

Successful AI Applications in Biomarker Discovery

In recent years, numerous studies have demonstrated the successful application of AI in the realm of biomarker discovery. For example, automated deep learning frameworks have been developed to process genomic and proteomic datasets, leading to the identification of novel biomarkers that predict patient outcomes in complex diseases such as cancer. One compelling case is the use of AI to detect cancer-specific biomarkers by integrating histological imaging with genomic data, thereby improving diagnostic accuracy and guiding the selection of targeted therapies. AI has also been used in automated image analysis—for instance, CNNs have been successfully deployed to identify molecular patterns in radiographic images that correlate with specific tumor signatures. Such applications not only enhance the sensitivity and specificity of biomarker detection but also facilitate a more objective diagnosis compared to human interpretations, which are subject to observer variability. Furthermore, platforms like PandaOmics integrate multi-omics data and use machine learning algorithms to prioritize putative targets and biomarkers for personalized therapies, demonstrating the utility of AI in accelerating the drug discovery process while simultaneously identifying relevant biomarkers.

Case Studies in Personalized Therapies

There are several case studies that underline the role of AI in forging personalized therapeutic strategies. In oncology, AI algorithms have been applied to analyze large datasets from The Cancer Genome Atlas (TCGA), leading to the discovery of integrated biomarker signatures that combine genetic mutations, gene expression profiles, and imaging data. These biomarkers have been used to stratify patients and predict responses to targeted therapies in various types of cancer. For instance, a multi-modal AI system that merges data from radiological scans and genomic analyses has been able to accurately predict patient outcomes and guide treatment plans—demonstrating higher prognostic accuracy compared to methods relying on single data modalities alone. Additionally, AI-driven digital biomarker platforms—leveraging wearables and portable biosensors—are being used to monitor disease activity and predict the onset of adverse clinical events in real-time, which significantly supports the development of personalized intervention strategies. Another example can be seen in precision medicine initiatives targeting cardiovascular diseases, where AI-based bioinformatics platforms integrate clinical parameters with genetic and proteomic data to assess individual risk profiles and recommend tailored therapeutic strategies. These case studies exemplify the potential of AI to transition biomarker discovery from a basic research step to a fully integrated component of personalized therapeutic regimens.

Challenges and Ethical Considerations

Technical Challenges

Despite the promising capabilities of AI in biomarker discovery, several technical challenges remain that hinder its full clinical integration. One of the foremost issues is the quality and heterogeneity of biomedical data. High-throughput datasets often suffer from noise, missing values, and batch effects, which can limit the robustness of AI algorithms if not properly preprocessed. Moreover, the “black-box” nature of many deep learning models poses interpretability challenges; clinicians may be hesitant to trust AI-derived biomarkers without clear explanations about how predictions are made. Reliable validation across diverse patient populations remains a significant hurdle, especially given that existing annotated datasets are often limited or biased toward certain demographics. In addition, the computational infrastructure necessary for managing and flushing enormous volumes of multi-modal data is non-trivial. Maintaining data integrity during integration and ensuring that models perform consistently across different institution-specific setups are essential technical challenges that require continuous attention. Finally, breakthroughs in explainable AI (XAI) are needed to enhance transparency and facilitate clinical acceptance, allowing researchers and clinicians to understand the underlying logic of AI decision-making processes.

Ethical and Privacy Concerns

The deployment AI in healthcare, particularly in processes as critical as biomarker discovery, brings with it a host of ethical and privacy-related issues. AI systems rely on vast amounts of patient data, which raises significant data security issues and the potential for privacy breaches. Ensuring patient consent and protecting sensitive medical information while allowing data to be used for AI training and validation is a delicate balancing act. Moreover, biases in the data—whether demographic, socio-economic, or geographical—can lead to inequitable AI performance, potentially exacerbating health disparities rather than mitigating them. Legal frameworks and regulatory guidelines for AI in healthcare are still in their infancy, and the lack of standardized protocols for monitoring AI systems further complicates matters. Another ethical concern revolves around accountability—if an AI system misidentifies a biomarker that leads to ineffective treatment, determining liability becomes challenging. Finally, there is the philosophical aspect of human judgment versus machine decision-making; clinicians need to retain a level of oversight and intervention to ensure that AI recommendations are used as complementary tools rather than as unquestioned determinants of clinical action.

Future Directions

Emerging AI Technologies

The field of AI in biomarker discovery is rapidly evolving, with emerging technologies promising to further enhance the precision of personalized therapies. Advances in explainable AI (XAI) are anticipated to provide greater insights into how AI algorithms derive their conclusions, thereby increasing trust and facilitating clinical translation. In addition, the integration of generative models—such as variational autoencoders and generative adversarial networks—into biomarker discovery pipelines holds significant promise for augmenting current methodologies. These models are capable of synthesizing high-dimensional biological data and may help in simulating disease progression or therapeutic responses, which in turn can accelerate the identification of novel biomarkers. Another promising direction is the combination of optical nanosensors with AI, which is poised to revolutionize multi-omics profiling. This hybrid approach can detect subtle molecular interactions in real time and, when analyzed through advanced AI algorithms, can lead to the discovery of highly specific disease biomarkers. Furthermore, the convergence of wearable IoT technologies and AI-powered digital biomarker platforms will enable continuous, real-time health monitoring, thereby capturing dynamic biological changes that could serve as early indicators for personalized intervention.

Potential Impact on Personalized Medicine

The integration of AI-driven biomarker discovery into clinical workflows has the potential to dramatically reshape the landscape of personalized medicine. By identifying comprehensive panels of biomarkers that reflect the intricate interplay of genetic, proteomic, and environmental factors, AI can guide the customization of therapies to an unprecedented degree. In oncology, for example, AI algorithms can integrate data from tumor genomics, radiomics, and patient clinical history to not only diagnose cancer but to predict its behavior and response to treatment. This multi-dimensional approach enables the tailoring of therapeutic regimens that are both effective and minimally invasive. On the other hand, in areas such as cardiovascular disease, AI can integrate biomarkers derived from imaging, EHR data, and wearable sensors to generate personalized risk profiles and guide the management of chronic conditions. Furthermore, AI’s adaptability means it can continually refine patient stratification criteria as new data become available, thereby optimizing treatment selection over time. The ripple effect of these advancements will likely lead to more cost-effective healthcare by reducing trial-and-error in therapy selection and by minimizing adverse outcomes through early detection and intervention. As regulatory frameworks evolve to better accommodate AI in healthcare, the prospect of integrating AI-derived biomarkers into routine clinical practice becomes increasingly attainable, ultimately paving the way for truly individualized treatment strategies that not only improve patient outcomes but also enhance overall healthcare sustainability.

Conclusion

In summary, artificial intelligence is emerging as a transformative tool in the identification of biomarkers for personalized therapies. Beginning with a robust definition and understanding of biomarkers and their critical role in personalized treatments, AI technology leverages vast datasets across genomics, proteomics, imaging, and clinical records to uncover subtle and complex patterns that traditional methods may overlook. AI technologies—including machine learning, deep learning, and natural language processing—facilitate the integration of heterogeneous data, leading to the discovery of highly predictive biomarker panels that guide tailored therapeutic interventions. Numerous successful applications and case studies in oncology, cardiovascular disease, and other domains illustrate how AI can improve diagnostic accuracy, better predict treatment responses, and enable the individualization of therapy. However, as promising as these developments are, considerable challenges remain. Technical issues such as data quality, model interpretability, and computational integration, coupled with ethical concerns related to privacy, bias, and accountability, must be addressed to fully harness the potential of AI in this domain. Looking forward, emerging AI technologies such as generative models, explainable AI, and IoT-enabled biosensing are set to revolutionize the field further, with the potential to transform personalized medicine on a global scale. By enhancing the efficiency, accuracy, and practicality of biomarker discovery, AI plays a pivotal role in driving the future of individualized therapy, ultimately leading to improved patient outcomes and a more sustainable healthcare system.

Definition of Biomarkers

Biomarkers are objectively measurable indicators found in biological samples—such as blood, tissues, or other body fluids—that reflect a biological state or condition, often related to disease processes or therapeutic responses. They can be molecules (e.g., proteins, nucleic acids, metabolites), imaging features, or even specific patterns in omics data, which are used to indicate normal or pathogenic processes and to monitor responses to treatment. Their identification is crucial for diagnosing diseases, predicting outcomes, and monitoring therapeutic efficacy. In the context of personalized therapies, biomarkers are not merely diagnostic tools but are integral to tailoring medical interventions to the unique molecular, genetic, and phenotypic characteristics of an individual patient.

Importance of Personalized Therapies

Personalized therapies represent a paradigm shift in medicine where treatment plans are designed based on individual patient profiles rather than a one-size-fits-all approach. This approach is particularly essential in complex diseases such as cancer, where inter-patient heterogeneity demands therapy that is both patient-specific and adaptable to dynamic changes in disease progression. Personalized therapies can help optimize therapeutic efficacy, minimize adverse effects, and cut down on wasted healthcare expenditure by selecting the most promising treatment for a given individual. Biomarkers form the backbone of this approach; by accurately stratifying patients based on their disease characteristics and likely response to treatment, clinicians can make informed decisions that improve overall outcomes.

Role of AI in Biomarker Discovery

AI Technologies Used in Biomarker Identification

Artificial intelligence (AI) is revolutionizing the discovery of biomarkers by processing and interpreting massive amounts of heterogeneous biomedical data that would be impossible for humans to fully analyze. AI employs various subfields such as machine learning (ML), deep learning (DL), and natural language processing (NLP) to uncover patterns in genomics, proteomics, imaging, and electronic health record (EHR) datasets. For instance, deep learning algorithms have been successfully employed to predict peptide measurements from amino acid sequences and to integrate multi-omics data for the discovery of candidate biomarkers. NLP allows the extraction of relevant information from unstructured clinical texts and scientific literature—a process that can uncover potential biomarkers that are not captured in structured databases. In addition, AI models such as convolutional neural networks (CNNs) have been applied to analyze medical images and even flow cytometry data, thereby identifying subtle features and patterns that serve as biomarkers for disease progression or therapeutic response. Furthermore, reinforcement learning techniques enable the exploration of multi-dimensional biomolecular data, which can be used to identify predictive biomarkers through continuous learning and self-optimization.

Advantages over Traditional Methods

The traditional methods of biomarker discovery, which have relied heavily on manually driven experiments and isolated statistical tests, face significant limitations in sensitivity, specificity, and scalability. AI-driven approaches overcome these barriers by enabling high-throughput screening and integration of large-scale, multi-dimensional datasets. One of the key advantages is the ability of AI methods to detect subtle, non-linear patterns and interactions between variables that might be missed by more conventional statistical methods. For instance, machine learning algorithms can combine genotypic, proteomic, and metabolomic measurements to not only identify potential biomarkers but also to validate their predictive power across different patient cohorts. Moreover, AI models can adapt and refine their algorithms based on new data, thereby continuously improving the accuracy of biomarker identification. This self-improving nature of AI, coupled with its ability to integrate diverse datasets—from imaging to genetic sequences—provides a robust platform that enhances reproducibility and reduces the time from discovery to clinical implementation. Additionally, AI’s computational power not only increases the scale at which data can be analyzed but also facilitates the combination of data types, leading to the identification of multidimensional biomarker panels that are more reflective of the underlying disease biology than single-marker approaches.

Applications and Case Studies

Successful AI Applications in Biomarker Discovery

In recent years, numerous studies have demonstrated the successful application of AI in the realm of biomarker discovery. For example, automated deep learning frameworks have been developed to process genomic and proteomic datasets, leading to the identification of novel biomarkers that predict patient outcomes in complex diseases such as cancer. One compelling case is the use of AI to detect cancer-specific biomarkers by integrating histological imaging with genomic data, thereby improving diagnostic accuracy and guiding the selection of targeted therapies. AI has also been used in automated image analysis—for instance, CNNs have been successfully deployed to identify molecular patterns in radiographic images that correlate with specific tumor signatures. Such applications not only enhance the sensitivity and specificity of biomarker detection but also facilitate a more objective diagnosis compared to human interpretations, which are subject to observer variability. Furthermore, platforms like PandaOmics integrate multi-omics data and use machine learning algorithms to prioritize putative targets and biomarkers for personalized therapies, demonstrating the utility of AI in accelerating the drug discovery process while simultaneously identifying relevant biomarkers.

Case Studies in Personalized Therapies

There are several case studies that underline the role of AI in forging personalized therapeutic strategies. In oncology, AI algorithms have been applied to analyze large datasets from The Cancer Genome Atlas (TCGA), leading to the discovery of integrated biomarker signatures that combine genetic mutations, gene expression profiles, and imaging data. These biomarkers have been used to stratify patients and predict responses to targeted therapies in various types of cancer. For instance, a multi-modal AI system that merges data from radiological scans and genomic analyses has been able to accurately predict patient outcomes and guide treatment plans—demonstrating higher prognostic accuracy compared to methods relying on single data modalities alone. Additionally, AI-driven digital biomarker platforms—leveraging wearables and portable biosensors—are being used to monitor disease activity and predict the onset of adverse clinical events in real-time, which significantly supports the development of personalized intervention strategies. Another example can be seen in precision medicine initiatives targeting cardiovascular diseases, where AI-based bioinformatics platforms integrate clinical parameters with genetic and proteomic data to assess individual risk profiles and recommend tailored therapeutic strategies. These case studies exemplify the potential of AI to transition biomarker discovery from a basic research step to a fully integrated component of personalized therapeutic regimens.

Challenges and Ethical Considerations

Technical Challenges

Despite the promising capabilities of AI in biomarker discovery, several technical challenges remain that hinder its full clinical integration. One of the foremost issues is the quality and heterogeneity of biomedical data. High-throughput datasets often suffer from noise, missing values, and batch effects, which can limit the robustness of AI algorithms if not properly preprocessed. Moreover, the “black-box” nature of many deep learning models poses interpretability challenges; clinicians may be hesitant to trust AI-derived biomarkers without clear explanations about how predictions are made. Reliable validation across diverse patient populations remains a significant hurdle, especially given that existing annotated datasets are often limited or biased toward certain demographics. In addition, the computational infrastructure necessary for managing and flushing enormous volumes of multi-modal data is non-trivial. Maintaining data integrity during integration and ensuring that models perform consistently across different institution-specific setups are essential technical challenges that require continuous attention. Finally, breakthroughs in explainable AI (XAI) are needed to enhance transparency and facilitate clinical acceptance, allowing researchers and clinicians to understand the underlying logic of AI decision-making processes.

Ethical and Privacy Concerns

The deployment AI in healthcare, particularly in processes as critical as biomarker discovery, brings with it a host of ethical and privacy-related issues. AI systems rely on vast amounts of patient data, which raises significant data security issues and the potential for privacy breaches. Ensuring patient consent and protecting sensitive medical information while allowing data to be used for AI training and validation is a delicate balancing act. Moreover, biases in the data—whether demographic, socio-economic, or geographical—can lead to inequitable AI performance, potentially exacerbating health disparities rather than mitigating them. Legal frameworks and regulatory guidelines for AI in healthcare are still in their infancy, and the lack of standardized protocols for monitoring AI systems further complicates matters. Another ethical concern revolves around accountability—if an AI system misidentifies a biomarker that leads to ineffective treatment, determining liability becomes challenging. Finally, there is the philosophical aspect of human judgment versus machine decision-making; clinicians need to retain a level of oversight and intervention to ensure that AI recommendations are used as complementary tools rather than as unquestioned determinants of clinical action.

Future Directions

Emerging AI Technologies

The field of AI in biomarker discovery is rapidly evolving, with emerging technologies promising to further enhance the precision of personalized therapies. Advances in explainable AI (XAI) are anticipated to provide greater insights into how AI algorithms derive their conclusions, thereby increasing trust and facilitating clinical translation. In addition, the integration of generative models—such as variational autoencoders and generative adversarial networks—into biomarker discovery pipelines holds significant promise for augmenting current methodologies. These models are capable of synthesizing high-dimensional biological data and may help in simulating disease progression or therapeutic responses, which in turn can accelerate the identification of novel biomarkers. Another promising direction is the combination of optical nanosensors with AI, which is poised to revolutionize multi-omics profiling. This hybrid approach can detect subtle molecular interactions in real time and, when analyzed through advanced AI algorithms, can lead to the discovery of highly specific disease biomarkers. Furthermore, the convergence of wearable IoT technologies and AI-powered digital biomarker platforms will enable continuous, real-time health monitoring, thereby capturing dynamic biological changes that could serve as early indicators for personalized intervention.

Potential Impact on Personalized Medicine

The integration of AI-driven biomarker discovery into clinical workflows has the potential to dramatically reshape the landscape of personalized medicine. By identifying comprehensive panels of biomarkers that reflect the intricate interplay of genetic, proteomic, and environmental factors, AI can guide the customization of therapies to an unprecedented degree. In oncology, for example, AI algorithms can integrate data from tumor genomics, radiomics, and patient clinical history to not only diagnose cancer but to predict its behavior and response to treatment. This multi-dimensional approach enables the tailoring of therapeutic regimens that are both effective and minimally invasive. On the other hand, in areas such as cardiovascular disease, AI can integrate biomarkers derived from imaging, EHR data, and wearable sensors to generate personalized risk profiles and guide the management of chronic conditions. Furthermore, AI’s adaptability means it can continually refine patient stratification criteria as new data become available, thereby optimizing treatment selection over time. The ripple effect of these advancements will likely lead to more cost-effective healthcare by reducing trial-and-error in therapy selection and by minimizing adverse outcomes through early detection and intervention. As regulatory frameworks evolve to better accommodate AI in healthcare, the prospect of integrating AI-derived biomarkers into routine clinical practice becomes increasingly attainable, ultimately paving the way for truly individualized treatment strategies that not only improve patient outcomes but also enhance overall healthcare sustainability.

Conclusion

In summary, artificial intelligence is emerging as a transformative tool in the identification of biomarkers for personalized therapies. Beginning with a robust definition and understanding of biomarkers and their critical role in personalized treatments, AI technology leverages vast datasets across genomics, proteomics, imaging, and clinical records to uncover subtle and complex patterns that traditional methods may overlook. AI technologies—including machine learning, deep learning, and natural language processing—facilitate the integration of heterogeneous data, leading to the discovery of highly predictive biomarker panels that guide tailored therapeutic interventions. Numerous successful applications and case studies in oncology, cardiovascular disease, and other domains illustrate how AI can improve diagnostic accuracy, better predict treatment responses, and enable the individualization of therapy. However, as promising as these developments are, considerable challenges remain. Technical issues such as data quality, model interpretability, and computational integration, coupled with ethical concerns related to privacy, bias, and accountability, must be addressed to fully harness the potential of AI in this domain. Looking forward, emerging AI technologies such as generative models, explainable AI, and IoT-enabled biosensing are set to revolutionize the field further, with the potential to transform personalized medicine on a global scale. By enhancing the efficiency, accuracy, and practicality of biomarker discovery, AI plays a pivotal role in driving the future of individualized therapy, ultimately leading to improved patient outcomes and a more sustainable healthcare system.

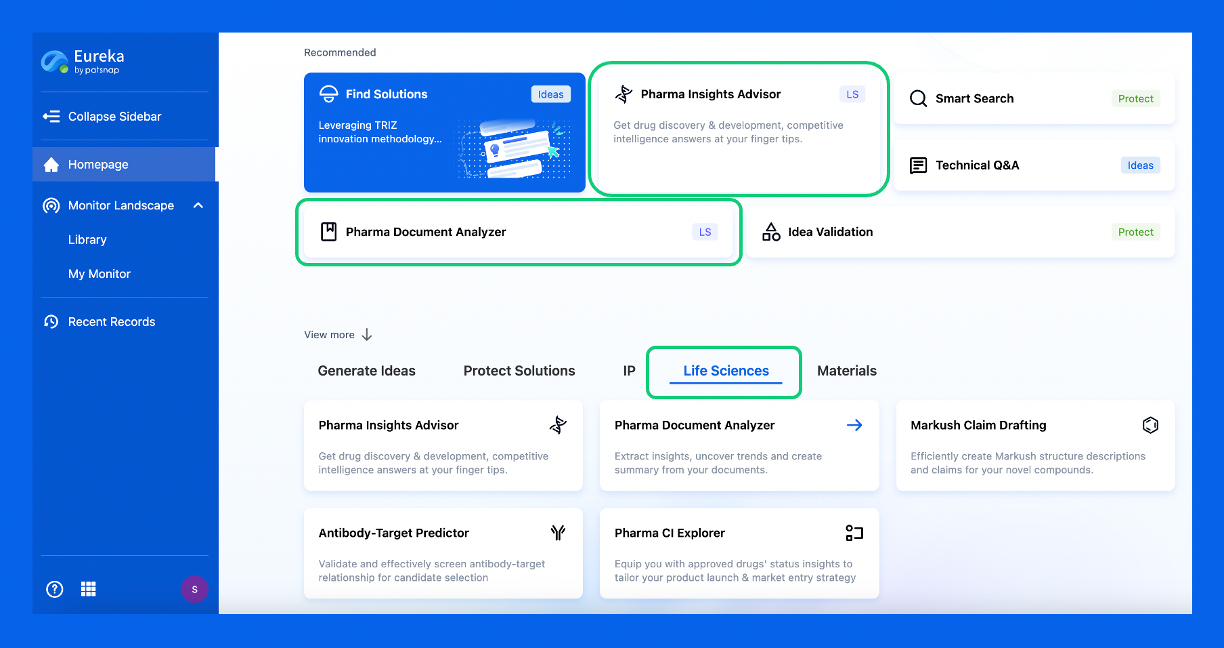

Discover Eureka LS: AI Agents Built for Biopharma Efficiency

Stop wasting time on biopharma busywork. Meet Eureka LS - your AI agent squad for drug discovery.

▶ See how 50+ research teams saved 300+ hours/month

From reducing screening time to simplifying Markush drafting, our AI Agents are ready to deliver immediate value. Explore Eureka LS today and unlock powerful capabilities that help you innovate with confidence.

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.