Request Demo

How can AI predict the efficacy of drug candidates during the early stages of development?

21 March 2025

Introduction to AI in Drug Development

In recent years, artificial intelligence (AI) has become a cornerstone of modern drug discovery, revolutionizing the speed, efficiency, and accuracy with which new therapeutic candidates are identified and developed. AI’s predictive capacity is especially valuable in the early stages of drug development, where it can forecast the efficacy of drug candidates long before costly preclinical and clinical studies commence. By leveraging machine learning (ML) and deep learning (DL) algorithms along with vast amounts of multi-dimensional data, researchers are now able to simulate, predict, and optimize drug-target interactions and pharmacokinetic/pharmacodynamic properties with unprecedented precision.

Role of AI in Modern Drug Discovery

AI-driven decision-making stands at the forefront of modern drug discovery. It is used to mine extensive biological, chemical, and clinical datasets to identify patterns that traditional computational methods might miss. AI systems have been implemented to perform tasks ranging from virtual screening of chemical libraries, prediction of binding affinities, and safety/toxicity assessments to optimizing clinical trial designs. The power of AI derives from its ability to learn complex, non-linear relationships from diverse datasets, thereby enabling early prediction of drug efficacy. For example, AI can model how a candidate interacts with target proteins at the molecular level or how it might behave in complex biological systems even when experimental data are scarce. Moreover, AI-driven platforms often integrate complementary techniques such as natural language processing (NLP) to extract insights from scientific literature and patient records, bridging gaps between experimental results and clinical outcomes.

Overview of Drug Development Stages

The development of any new drug can be broadly divided into several key phases: discovery, preclinical development, clinical trials, and regulatory approval. The early stages—discovery and preclinical development—are characterized by identifying therapeutic targets, designing drug candidates, and assessing their basic pharmacological profiles. In these phases, the primary challenges include predicting which chemical entities will exhibit both efficacy and safety, optimizing lead compounds, and efficiently screening large libraries of molecules. AI’s ability to integrate expression data, biochemical assay results, and structural information is particularly useful during these initial stages, where it helps to prioritize candidates that are more likely to be effective in vivo. By focusing on translating in vitro and in silico predictions into viable candidates for further development, AI serves as a vital tool in reducing the financial burden and risk associated with the later stages of drug development.

AI Techniques for Predicting Drug Efficacy

AI approaches for drug efficacy prediction are multifaceted, combining both traditional machine learning models and advanced deep learning architectures. These methods empower researchers to forecast how a potential drug will perform based solely on computational predictions before engaging in expensive experimental validations.

Machine Learning Models

Machine learning techniques, which include algorithms such as support vector machines (SVM), random forests (RF), Bayesian networks, and ensemble methods, have been invaluable in predicting drug efficacy. These models operate by identifying patterns in historical and experimental datasets:

- Pattern Recognition and QSAR Modeling: ML models are widely used in quantitative structure–activity relationships (QSAR) to predict the biological activity of compounds based on their chemical structure and physicochemical properties. By training on large datasets of compounds with known activity profiles, these models can extrapolate the likely efficacy of new molecules. For instance, by using regression models or classification algorithms, researchers can predict which small molecules will have strong target affinity and suitable pharmacokinetic properties.

- Integrative Data Analytics: ML models combine chemical, biological, and genomic information to generate a more holistic understanding of drug efficacy. These systems often integrate datasets from public repositories like PubChem, ChEMBL, and DrugBank with experimental preclinical data. Through feature selection and dimensionality reduction, ML models highlight crucial molecular descriptors that correlate with efficacy, reducing noise and improving predictive performance.

- Ensemble and Hybrid Models: In many cases, combining several ML algorithms—each tuned to specific aspects of the dataset—has led to more robust predictions. Ensemble methods, which aggregate predictions from multiple algorithms, have demonstrated enhanced accuracy in forecasting drug efficacy. The use of stacking and boosting techniques, where weaker models are combined to create a strong overall predictor, results in more reliable outcomes, particularly when dealing with heterogeneous data types.

Deep Learning Approaches

Deep learning, a subfield of machine learning, employs multi-layered neural networks capable of learning hierarchical representations from raw data. DL models are becoming increasingly popular for drug efficacy prediction because of their ability to model complex, non-linear relationships inherent in biological systems:

- Neural Network Architectures: Convolutional neural networks (CNNs), recurrent neural networks (RNNs), and graph neural networks (GNNs) are among the most common DL architectures used in drug discovery. CNNs are particularly useful for processing image-based data and spatial representations of chemical structures, while RNNs excel at sequential data like molecular sequences and patient time-series data. GNNs can effectively model structured data such as molecular graphs, predicting how molecular interactions translate into drug efficacy.

- De Novo Drug Design and Virtual Screening: Deep learning models contribute not only to efficacy prediction but also to the de novo generation of drug candidates. For example, variational autoencoders and generative adversarial networks (GANs) have been adopted to generate novel molecular structures with optimized properties. These models learn latent representations of chemical space and design molecules that satisfy specific efficacy criteria, thus streamlining the virtual screening process. This approach allows the prediction of efficacy before any synthesis or in vitro screening is performed.

- Simulation and Molecular Docking Prediction: Advanced DL algorithms have been integrated with traditional molecular docking simulations. They help predict binding affinities between drugs and their target proteins by learning from the structural data available in protein databases. This integration augments the classical docking techniques by providing fast, accurate predictions of drug-target interactions and can identify subtle efficacy-influencing conformational changes.

- Time-Series Predictions for Efficacy: Some DL models are specifically tailored to predict dynamic responses over time, such as changes in biomarker levels in response to a drug candidate. These models can be trained on longitudinal data from cell-based assays, predicting how a candidate’s efficacy evolves during the treatment period. This helps in identifying candidates that not only have initial efficacy but also maintain therapeutic benefits during prolonged exposure.

Data Requirements and Integration

AI models rely heavily on the quality, volume, and integration of data. Accurate prediction of drug candidate efficacy is directly tied to how well these models are trained using diverse and comprehensive datasets.

Types of Data Used

The efficacy of AI predictions in drug development depends on the integration of multiple types of data:

- Chemical Structure Data: Detailed molecular representations, including 2D and 3D structures, molecular fingerprints, and physicochemical descriptors, form the backbone of many efficacy prediction models. These data allow AI systems to assess chemical properties and infer potential biological activity.

- Biological and Genomic Data: Information on target proteins, genetic pathways, and cellular biomarkers is critical. Data from high-throughput sequencing, gene expression profiling, proteomics, and other omics technologies provide insights into the biological context in which a drug candidate operates. Differentiated neuronal cultures, for instance, have been used to predict the efficacy profiles of antisense oligonucleotides in high-throughput screening assays, demonstrating the utility of biological data in efficacy prediction.

- Pharmacokinetic and Toxicological Data: To predict efficacy accurately, AI models also integrate data on absorption, distribution, metabolism, excretion, and toxicity (ADMET). Early-stage prediction models analyze these properties to ensure that candidates not only bind to their targets effectively but also reach the right concentration levels in the body without causing adverse effects.

- Clinical and Real-World Data: Even during early development, historical clinical data and real-world evidence (RWD) play a role. Although such data become more prominent in later stages, incorporating them early helps refine the predictive models. AI systems use data from previous clinical trials and electronic health records (EHRs) to simulate potential clinical outcomes and identify patient subpopulations likely to respond to specific therapies.

- Imaging and Phenotypic Data: Advanced AI models also leverage imaging data from cell-based assays and digital biomarkers. These data types are valuable in predicting how a drug candidate influences cellular morphology and function, thereby serving as surrogate markers for efficacy.

Data Integration Challenges

While the potential for AI-driven efficacy prediction is enormous, several challenges must be addressed:

- Heterogeneity and Quality of Data: Data sourced from different experiments, labs, or clinical settings often vary in quality, format, and resolution. Standardization and normalization are essential to ensure that the integrated data does not introduce biases in the models.

- Volume and Dimensionality: The vast amount of data involved, from high-throughput screening to multi-omics datasets, creates a significant challenge in terms of computational resources and data management. Advanced big data strategies, including distributed computing and cloud-based infrastructures, are required to process such datasets efficiently.

- Handling Missing Data: Often, datasets in drug discovery suffer from missing values, partly due to the inherent variability in experimental conditions. AI models must incorporate mechanisms like data imputation or semi-supervised learning to handle incomplete datasets while maintaining robust predictions.

- Data Integration Across Disciplines: Integrating chemical, biological, pharmacokinetic, and clinical data requires sophisticated algorithms that can reconcile differences in data structure and scale. Multimodal learning techniques, which combine various data types within a unified framework, are increasingly being developed to meet this challenge.

- Interpretability and Explainability: One of the critical challenges faced by AI models, especially deep learning models, is explainability—understanding how the model arrived at a given prediction. This is paramount in drug development where regulatory bodies and researchers need to trust AI predictions. Techniques such as Explainable AI (XAI) are being further researched to address these issues and make the model outputs more interpretable for human experts.

Case Studies and Applications

Practical applications and case studies of AI in early drug development have demonstrated promising results, although challenges remain. The integration of AI in predicting drug efficacy has already led to successful applications and at the same time highlighted limitations that must be overcome.

Successful Applications of AI in Drug Efficacy Prediction

There are numerous examples where AI has successfully predicted the efficacy profiles of drug candidates:

- High-Throughput Screening Using Standardized Neural Cultures: Patents describe methods where differentiated neural cell cultures from primate species are used in in vitro assays to screen antisense oligonucleotides. These methods rely on dissociation, reseeding, and robust cell culture formats that are amenable to high-throughput screening. AI systems integrated into these screening assays help predict which candidates have the highest efficacy based on detailed cellular responses and screening data.

- Computational Prediction of Pharmacokinetics and Toxicity: AI models have been employed to predict ADMET properties effectively at an early stage. By simulating drug interactions in computational models based on historical data, these systems forecast not only the efficacy but also anticipate potential adverse effects. Advanced deep learning approaches have enabled pharmaceutical researchers to prioritize compounds that show a favorable balance of efficacy and safety, reducing the need for extensive animal testing and early-phase clinical experiments.

- Virtual Screening and De Novo Design: Deep learning models that integrate generative adversarial networks (GANs) and reinforcement learning have been used to generate novel molecular structures with predicted efficacy against specific biological targets. For instance, AI models have been demonstrated to perform virtual screening on millions of compounds and then propose candidate molecules with optimized binding affinities and predicted in vivo performance. These techniques have advanced the field of de novo drug design and have already shown promising results in early-stage drug discovery pipelines.

- Predictive Modeling Using Multi-Omics Data: AI approaches that incorporate data from genomics, transcriptomics, and proteomics have been utilized to predict the sensitivity of cancer cells to specific drug candidates. By building models that interpret cellular response patterns to anti-cancer agents, researchers can forecast which candidates are likely to be efficacious in specific patient subpopulations. This has ushered in the era of precision oncology, where drug efficacy is predicted more reliably, leading to personalized therapeutic strategies.

- Real-World Data Integration for Early Predictions: Although the comprehensive use of clinical data predominantly occurs in later stages, early-stage AI models also utilize preclinical and pilot clinical trial data to simulate drug efficacy. In addition, public databases with historical efficacy outcomes and compound screening results support the training of these models, ensuring that the predictions are grounded in real-world evidence.

Limitations and Challenges

Despite these successes, several limitations persist in using AI to predict drug efficacy during early development:

- Data Quality and Completeness: Incomplete or noisy data remain a significant hurdle. Even though AI models can work with large volumes of data, the heterogeneity and quality of the input often affect prediction accuracy. In cases where data is sparse, such as for novel targets or rare diseases, the predictive performance may suffer.

- Model Interpretability and Trust: One of the recurring challenges in AI drug discovery is understanding and explaining how models arrive at a prediction. Regulatory agencies and clinical researchers require transparent models, but many DL approaches remain “black boxes,” limiting their broader acceptance in decision-making.

- Generalizability Across Different Drug Classes: AI models trained on specific datasets may not generalize well to all chemical classes or therapeutic areas. For example, models effective for predicting efficacy in oncology might not translate directly to antiviral therapies. This calls for continuous retraining and adaptation of models as new data become available.

- Integration of Multi-Modal Data: Successfully integrating chemical, biological, and clinical data into a single predictive framework remains complex. While multi-modal learning frameworks show promise, ensuring that the diverse datasets interact harmoniously without losing critical information is still a work in progress.

- Computational Resource Demands: Many advanced DL techniques require significant computational power, particularly when processing terabytes of data. The performance of these models is directly tied to the infrastructure available, which can be a limiting factor for smaller research institutions.

Future Directions and Innovations

The evolving landscape of AI in early-stage drug development suggests both exciting prospects and areas where further innovation is needed. As AI methodologies continue to mature, several emerging trends and potential advancements promise to further enhance the prediction of drug candidate efficacy.

Emerging Technologies

- Explainable AI (XAI): One of the most critical future directions is the development of explainable AI systems. XAI aims to provide transparency in model decision processes, allowing researchers to understand which features influenced a prediction. This is crucial in drug development, where trust in AI predictions can determine the career trajectory of a candidate drug. As research into XAI matures, regulatory acceptance of AI-driven decisions will likely improve, paving the way for broader integration of these models in preclinical and clinical decision-making.

- Advanced Graph Neural Networks (GNNs): The application of GNNs in predicting drug efficacy, particularly in modeling complex drug–target networks, is gaining traction. GNNs can capture the intrinsic topological and interaction properties of molecular graphs, offering more precise predictions of binding affinities and efficacy outcomes. New architectures and training techniques will improve the performance and scalability of these networks, allowing for more comprehensive virtual screening.

- Integration of Real-Time Data Streams: As digital healthcare continues to evolve, AI models will increasingly integrate real-time data from wearable devices, electronic health records, and other digital biomarkers. These continuous data streams can inform models of dynamic drug responses, thereby predicting not only initial efficacy but also the durability of a drug candidate’s therapeutic effects. Such systems could continuously update predictions as new data emerges, transitioning from static models to dynamic, adaptive prediction frameworks.

- Simulation and Virtual Clinical Trials: Enhanced simulation models that combine AI with traditional pharmacokinetic/pharmacodynamic (PK/PD) modeling offer the prospect of virtual clinical trials. These systems can mimic human responses to a drug candidate in silico, allowing researchers to test multiple dosing strategies and predict efficacy outcomes before real-world trials are conducted. This approach not only saves time and money but also minimizes risk by identifying potential failures earlier in the development process.

Potential Advancements in AI for Drug Development

- Automated Data Curation and Standardization: One of the current challenges is the heterogeneity of datasets. Future advancements are likely to focus on automated systems for data cleaning, normalization, and integration that seamlessly combine data from diverse sources. Such solutions will ensure that AI models are trained on high-quality, standardized datasets, thereby enhancing their predictive accuracy.

- Hybrid Models Combining ML and Mechanistic Simulations: Integrating traditional mechanistic models of drug behavior with machine learning approaches can yield hybrid models that combine the best of both worlds. Such models will use physics-based simulations to anchor predictions in biological reality while utilizing AI to handle complex patterns that are difficult to capture with mechanistic models alone. This combination is expected to improve the overall performance of drug efficacy predictions and streamline drug development pipelines.

- Personalized Medicine and Precision Oncology: With the increasing availability of genomics and proteomics data, AI systems will be tailored to predict drug efficacy on a patient-specific basis. This personalized approach will harness multi-omics data to identify biomarkers that correlate with drug response, thereby enabling more targeted therapies. As the field of precision oncology expands, AI models will play a crucial role in selecting optimal therapeutic regimens for individual patients based on their unique molecular profiles.

- Cloud and High-Performance Computing Integration: The computational intensity of deep learning models necessitates robust computing infrastructure. Future integration with cloud-based platforms and high-performance computing clusters will facilitate the processing of massive datasets, accelerating model training and inference. This will democratize access to advanced AI tools in drug development, enabling smaller institutions to participate in cutting-edge research.

- Regulatory and Collaborative Frameworks: To integrate AI predictions into the decision-making process for drug efficacy, regulatory agencies will need frameworks that assess and validate AI methodologies. Collaborative efforts between academic institutions, biotech companies, and regulatory bodies are expected to establish standardized protocols for AI in drug discovery, ensuring that the predictions are reproducible, transparent, and scientifically sound.

Conclusion

In conclusion, AI has already made significant inroads into predicting the efficacy of drug candidates during the early stages of development. The integration of machine learning and deep learning techniques enables the modeling of complex molecular interactions and biological systems with high precision, reducing the time and cost associated with traditional experimental methods. AI leverages a diverse array of data—from chemical structures and multi-omics profiles to real-world clinical data—to forecast which compounds are most likely to be efficacious, thereby streamlining the drug discovery pipeline.

From the perspective of machine learning, traditional models such as support vector machines, random forests, and ensemble methods have proven effective in QSAR and related analyses by extrapolating the relationships between molecular properties and therapeutic outcomes. Deep learning approaches further advance these capabilities through sophisticated neural network architectures that capture non-linear interactions and complex spatial relationships in molecular data.

Data integration remains a critical challenge, with the need to reconcile heterogeneous data sources and ensure data quality for robust model training. Disparate information ranging from chemical descriptors to high-dimensional genomic profiles must be normalized and combined to fuel AI models that can provide accurate, clinically relevant predictions. Addressing issues such as missing data, variability in experimental conditions, and multi-modal data fusion is essential for the continued success of these systems.

There are already many illustrative case studies demonstrating successful applications of AI in early-stage efficacy prediction—from virtual screening and de novo compound design to in vitro cellular assays using standardized systems. However, challenges such as interpretability, generalizability, and computational resource demands remain. Future directions are anticipated to include advancements in explainable AI, the integration of real-time data streams, the development of hybrid models that combine mechanistic and AI-based predictions, and the establishment of standardized regulatory frameworks.

Overall, the future of AI in drug development is extremely promising. With emerging technologies and innovative data integration methods, AI will continue to enhance the predictive accuracy of drug candidate efficacy, paving the way for more effective and personalized therapies. The continued collaboration between AI experts, biopharmaceutical researchers, and regulatory bodies is essential to fully realize the potential of these technologies, ultimately accelerating the transition from bench to bedside and improving patient outcomes through more informed drug development decisions.

By addressing current limitations and continually advancing computational methods, AI is poised to transform early-stage drug development into a more predictive, cost-effective, and efficient process—a transformation that holds great promise for the future of healthcare and personalized medicine.

In recent years, artificial intelligence (AI) has become a cornerstone of modern drug discovery, revolutionizing the speed, efficiency, and accuracy with which new therapeutic candidates are identified and developed. AI’s predictive capacity is especially valuable in the early stages of drug development, where it can forecast the efficacy of drug candidates long before costly preclinical and clinical studies commence. By leveraging machine learning (ML) and deep learning (DL) algorithms along with vast amounts of multi-dimensional data, researchers are now able to simulate, predict, and optimize drug-target interactions and pharmacokinetic/pharmacodynamic properties with unprecedented precision.

Role of AI in Modern Drug Discovery

AI-driven decision-making stands at the forefront of modern drug discovery. It is used to mine extensive biological, chemical, and clinical datasets to identify patterns that traditional computational methods might miss. AI systems have been implemented to perform tasks ranging from virtual screening of chemical libraries, prediction of binding affinities, and safety/toxicity assessments to optimizing clinical trial designs. The power of AI derives from its ability to learn complex, non-linear relationships from diverse datasets, thereby enabling early prediction of drug efficacy. For example, AI can model how a candidate interacts with target proteins at the molecular level or how it might behave in complex biological systems even when experimental data are scarce. Moreover, AI-driven platforms often integrate complementary techniques such as natural language processing (NLP) to extract insights from scientific literature and patient records, bridging gaps between experimental results and clinical outcomes.

Overview of Drug Development Stages

The development of any new drug can be broadly divided into several key phases: discovery, preclinical development, clinical trials, and regulatory approval. The early stages—discovery and preclinical development—are characterized by identifying therapeutic targets, designing drug candidates, and assessing their basic pharmacological profiles. In these phases, the primary challenges include predicting which chemical entities will exhibit both efficacy and safety, optimizing lead compounds, and efficiently screening large libraries of molecules. AI’s ability to integrate expression data, biochemical assay results, and structural information is particularly useful during these initial stages, where it helps to prioritize candidates that are more likely to be effective in vivo. By focusing on translating in vitro and in silico predictions into viable candidates for further development, AI serves as a vital tool in reducing the financial burden and risk associated with the later stages of drug development.

AI Techniques for Predicting Drug Efficacy

AI approaches for drug efficacy prediction are multifaceted, combining both traditional machine learning models and advanced deep learning architectures. These methods empower researchers to forecast how a potential drug will perform based solely on computational predictions before engaging in expensive experimental validations.

Machine Learning Models

Machine learning techniques, which include algorithms such as support vector machines (SVM), random forests (RF), Bayesian networks, and ensemble methods, have been invaluable in predicting drug efficacy. These models operate by identifying patterns in historical and experimental datasets:

- Pattern Recognition and QSAR Modeling: ML models are widely used in quantitative structure–activity relationships (QSAR) to predict the biological activity of compounds based on their chemical structure and physicochemical properties. By training on large datasets of compounds with known activity profiles, these models can extrapolate the likely efficacy of new molecules. For instance, by using regression models or classification algorithms, researchers can predict which small molecules will have strong target affinity and suitable pharmacokinetic properties.

- Integrative Data Analytics: ML models combine chemical, biological, and genomic information to generate a more holistic understanding of drug efficacy. These systems often integrate datasets from public repositories like PubChem, ChEMBL, and DrugBank with experimental preclinical data. Through feature selection and dimensionality reduction, ML models highlight crucial molecular descriptors that correlate with efficacy, reducing noise and improving predictive performance.

- Ensemble and Hybrid Models: In many cases, combining several ML algorithms—each tuned to specific aspects of the dataset—has led to more robust predictions. Ensemble methods, which aggregate predictions from multiple algorithms, have demonstrated enhanced accuracy in forecasting drug efficacy. The use of stacking and boosting techniques, where weaker models are combined to create a strong overall predictor, results in more reliable outcomes, particularly when dealing with heterogeneous data types.

Deep Learning Approaches

Deep learning, a subfield of machine learning, employs multi-layered neural networks capable of learning hierarchical representations from raw data. DL models are becoming increasingly popular for drug efficacy prediction because of their ability to model complex, non-linear relationships inherent in biological systems:

- Neural Network Architectures: Convolutional neural networks (CNNs), recurrent neural networks (RNNs), and graph neural networks (GNNs) are among the most common DL architectures used in drug discovery. CNNs are particularly useful for processing image-based data and spatial representations of chemical structures, while RNNs excel at sequential data like molecular sequences and patient time-series data. GNNs can effectively model structured data such as molecular graphs, predicting how molecular interactions translate into drug efficacy.

- De Novo Drug Design and Virtual Screening: Deep learning models contribute not only to efficacy prediction but also to the de novo generation of drug candidates. For example, variational autoencoders and generative adversarial networks (GANs) have been adopted to generate novel molecular structures with optimized properties. These models learn latent representations of chemical space and design molecules that satisfy specific efficacy criteria, thus streamlining the virtual screening process. This approach allows the prediction of efficacy before any synthesis or in vitro screening is performed.

- Simulation and Molecular Docking Prediction: Advanced DL algorithms have been integrated with traditional molecular docking simulations. They help predict binding affinities between drugs and their target proteins by learning from the structural data available in protein databases. This integration augments the classical docking techniques by providing fast, accurate predictions of drug-target interactions and can identify subtle efficacy-influencing conformational changes.

- Time-Series Predictions for Efficacy: Some DL models are specifically tailored to predict dynamic responses over time, such as changes in biomarker levels in response to a drug candidate. These models can be trained on longitudinal data from cell-based assays, predicting how a candidate’s efficacy evolves during the treatment period. This helps in identifying candidates that not only have initial efficacy but also maintain therapeutic benefits during prolonged exposure.

Data Requirements and Integration

AI models rely heavily on the quality, volume, and integration of data. Accurate prediction of drug candidate efficacy is directly tied to how well these models are trained using diverse and comprehensive datasets.

Types of Data Used

The efficacy of AI predictions in drug development depends on the integration of multiple types of data:

- Chemical Structure Data: Detailed molecular representations, including 2D and 3D structures, molecular fingerprints, and physicochemical descriptors, form the backbone of many efficacy prediction models. These data allow AI systems to assess chemical properties and infer potential biological activity.

- Biological and Genomic Data: Information on target proteins, genetic pathways, and cellular biomarkers is critical. Data from high-throughput sequencing, gene expression profiling, proteomics, and other omics technologies provide insights into the biological context in which a drug candidate operates. Differentiated neuronal cultures, for instance, have been used to predict the efficacy profiles of antisense oligonucleotides in high-throughput screening assays, demonstrating the utility of biological data in efficacy prediction.

- Pharmacokinetic and Toxicological Data: To predict efficacy accurately, AI models also integrate data on absorption, distribution, metabolism, excretion, and toxicity (ADMET). Early-stage prediction models analyze these properties to ensure that candidates not only bind to their targets effectively but also reach the right concentration levels in the body without causing adverse effects.

- Clinical and Real-World Data: Even during early development, historical clinical data and real-world evidence (RWD) play a role. Although such data become more prominent in later stages, incorporating them early helps refine the predictive models. AI systems use data from previous clinical trials and electronic health records (EHRs) to simulate potential clinical outcomes and identify patient subpopulations likely to respond to specific therapies.

- Imaging and Phenotypic Data: Advanced AI models also leverage imaging data from cell-based assays and digital biomarkers. These data types are valuable in predicting how a drug candidate influences cellular morphology and function, thereby serving as surrogate markers for efficacy.

Data Integration Challenges

While the potential for AI-driven efficacy prediction is enormous, several challenges must be addressed:

- Heterogeneity and Quality of Data: Data sourced from different experiments, labs, or clinical settings often vary in quality, format, and resolution. Standardization and normalization are essential to ensure that the integrated data does not introduce biases in the models.

- Volume and Dimensionality: The vast amount of data involved, from high-throughput screening to multi-omics datasets, creates a significant challenge in terms of computational resources and data management. Advanced big data strategies, including distributed computing and cloud-based infrastructures, are required to process such datasets efficiently.

- Handling Missing Data: Often, datasets in drug discovery suffer from missing values, partly due to the inherent variability in experimental conditions. AI models must incorporate mechanisms like data imputation or semi-supervised learning to handle incomplete datasets while maintaining robust predictions.

- Data Integration Across Disciplines: Integrating chemical, biological, pharmacokinetic, and clinical data requires sophisticated algorithms that can reconcile differences in data structure and scale. Multimodal learning techniques, which combine various data types within a unified framework, are increasingly being developed to meet this challenge.

- Interpretability and Explainability: One of the critical challenges faced by AI models, especially deep learning models, is explainability—understanding how the model arrived at a given prediction. This is paramount in drug development where regulatory bodies and researchers need to trust AI predictions. Techniques such as Explainable AI (XAI) are being further researched to address these issues and make the model outputs more interpretable for human experts.

Case Studies and Applications

Practical applications and case studies of AI in early drug development have demonstrated promising results, although challenges remain. The integration of AI in predicting drug efficacy has already led to successful applications and at the same time highlighted limitations that must be overcome.

Successful Applications of AI in Drug Efficacy Prediction

There are numerous examples where AI has successfully predicted the efficacy profiles of drug candidates:

- High-Throughput Screening Using Standardized Neural Cultures: Patents describe methods where differentiated neural cell cultures from primate species are used in in vitro assays to screen antisense oligonucleotides. These methods rely on dissociation, reseeding, and robust cell culture formats that are amenable to high-throughput screening. AI systems integrated into these screening assays help predict which candidates have the highest efficacy based on detailed cellular responses and screening data.

- Computational Prediction of Pharmacokinetics and Toxicity: AI models have been employed to predict ADMET properties effectively at an early stage. By simulating drug interactions in computational models based on historical data, these systems forecast not only the efficacy but also anticipate potential adverse effects. Advanced deep learning approaches have enabled pharmaceutical researchers to prioritize compounds that show a favorable balance of efficacy and safety, reducing the need for extensive animal testing and early-phase clinical experiments.

- Virtual Screening and De Novo Design: Deep learning models that integrate generative adversarial networks (GANs) and reinforcement learning have been used to generate novel molecular structures with predicted efficacy against specific biological targets. For instance, AI models have been demonstrated to perform virtual screening on millions of compounds and then propose candidate molecules with optimized binding affinities and predicted in vivo performance. These techniques have advanced the field of de novo drug design and have already shown promising results in early-stage drug discovery pipelines.

- Predictive Modeling Using Multi-Omics Data: AI approaches that incorporate data from genomics, transcriptomics, and proteomics have been utilized to predict the sensitivity of cancer cells to specific drug candidates. By building models that interpret cellular response patterns to anti-cancer agents, researchers can forecast which candidates are likely to be efficacious in specific patient subpopulations. This has ushered in the era of precision oncology, where drug efficacy is predicted more reliably, leading to personalized therapeutic strategies.

- Real-World Data Integration for Early Predictions: Although the comprehensive use of clinical data predominantly occurs in later stages, early-stage AI models also utilize preclinical and pilot clinical trial data to simulate drug efficacy. In addition, public databases with historical efficacy outcomes and compound screening results support the training of these models, ensuring that the predictions are grounded in real-world evidence.

Limitations and Challenges

Despite these successes, several limitations persist in using AI to predict drug efficacy during early development:

- Data Quality and Completeness: Incomplete or noisy data remain a significant hurdle. Even though AI models can work with large volumes of data, the heterogeneity and quality of the input often affect prediction accuracy. In cases where data is sparse, such as for novel targets or rare diseases, the predictive performance may suffer.

- Model Interpretability and Trust: One of the recurring challenges in AI drug discovery is understanding and explaining how models arrive at a prediction. Regulatory agencies and clinical researchers require transparent models, but many DL approaches remain “black boxes,” limiting their broader acceptance in decision-making.

- Generalizability Across Different Drug Classes: AI models trained on specific datasets may not generalize well to all chemical classes or therapeutic areas. For example, models effective for predicting efficacy in oncology might not translate directly to antiviral therapies. This calls for continuous retraining and adaptation of models as new data become available.

- Integration of Multi-Modal Data: Successfully integrating chemical, biological, and clinical data into a single predictive framework remains complex. While multi-modal learning frameworks show promise, ensuring that the diverse datasets interact harmoniously without losing critical information is still a work in progress.

- Computational Resource Demands: Many advanced DL techniques require significant computational power, particularly when processing terabytes of data. The performance of these models is directly tied to the infrastructure available, which can be a limiting factor for smaller research institutions.

Future Directions and Innovations

The evolving landscape of AI in early-stage drug development suggests both exciting prospects and areas where further innovation is needed. As AI methodologies continue to mature, several emerging trends and potential advancements promise to further enhance the prediction of drug candidate efficacy.

Emerging Technologies

- Explainable AI (XAI): One of the most critical future directions is the development of explainable AI systems. XAI aims to provide transparency in model decision processes, allowing researchers to understand which features influenced a prediction. This is crucial in drug development, where trust in AI predictions can determine the career trajectory of a candidate drug. As research into XAI matures, regulatory acceptance of AI-driven decisions will likely improve, paving the way for broader integration of these models in preclinical and clinical decision-making.

- Advanced Graph Neural Networks (GNNs): The application of GNNs in predicting drug efficacy, particularly in modeling complex drug–target networks, is gaining traction. GNNs can capture the intrinsic topological and interaction properties of molecular graphs, offering more precise predictions of binding affinities and efficacy outcomes. New architectures and training techniques will improve the performance and scalability of these networks, allowing for more comprehensive virtual screening.

- Integration of Real-Time Data Streams: As digital healthcare continues to evolve, AI models will increasingly integrate real-time data from wearable devices, electronic health records, and other digital biomarkers. These continuous data streams can inform models of dynamic drug responses, thereby predicting not only initial efficacy but also the durability of a drug candidate’s therapeutic effects. Such systems could continuously update predictions as new data emerges, transitioning from static models to dynamic, adaptive prediction frameworks.

- Simulation and Virtual Clinical Trials: Enhanced simulation models that combine AI with traditional pharmacokinetic/pharmacodynamic (PK/PD) modeling offer the prospect of virtual clinical trials. These systems can mimic human responses to a drug candidate in silico, allowing researchers to test multiple dosing strategies and predict efficacy outcomes before real-world trials are conducted. This approach not only saves time and money but also minimizes risk by identifying potential failures earlier in the development process.

Potential Advancements in AI for Drug Development

- Automated Data Curation and Standardization: One of the current challenges is the heterogeneity of datasets. Future advancements are likely to focus on automated systems for data cleaning, normalization, and integration that seamlessly combine data from diverse sources. Such solutions will ensure that AI models are trained on high-quality, standardized datasets, thereby enhancing their predictive accuracy.

- Hybrid Models Combining ML and Mechanistic Simulations: Integrating traditional mechanistic models of drug behavior with machine learning approaches can yield hybrid models that combine the best of both worlds. Such models will use physics-based simulations to anchor predictions in biological reality while utilizing AI to handle complex patterns that are difficult to capture with mechanistic models alone. This combination is expected to improve the overall performance of drug efficacy predictions and streamline drug development pipelines.

- Personalized Medicine and Precision Oncology: With the increasing availability of genomics and proteomics data, AI systems will be tailored to predict drug efficacy on a patient-specific basis. This personalized approach will harness multi-omics data to identify biomarkers that correlate with drug response, thereby enabling more targeted therapies. As the field of precision oncology expands, AI models will play a crucial role in selecting optimal therapeutic regimens for individual patients based on their unique molecular profiles.

- Cloud and High-Performance Computing Integration: The computational intensity of deep learning models necessitates robust computing infrastructure. Future integration with cloud-based platforms and high-performance computing clusters will facilitate the processing of massive datasets, accelerating model training and inference. This will democratize access to advanced AI tools in drug development, enabling smaller institutions to participate in cutting-edge research.

- Regulatory and Collaborative Frameworks: To integrate AI predictions into the decision-making process for drug efficacy, regulatory agencies will need frameworks that assess and validate AI methodologies. Collaborative efforts between academic institutions, biotech companies, and regulatory bodies are expected to establish standardized protocols for AI in drug discovery, ensuring that the predictions are reproducible, transparent, and scientifically sound.

Conclusion

In conclusion, AI has already made significant inroads into predicting the efficacy of drug candidates during the early stages of development. The integration of machine learning and deep learning techniques enables the modeling of complex molecular interactions and biological systems with high precision, reducing the time and cost associated with traditional experimental methods. AI leverages a diverse array of data—from chemical structures and multi-omics profiles to real-world clinical data—to forecast which compounds are most likely to be efficacious, thereby streamlining the drug discovery pipeline.

From the perspective of machine learning, traditional models such as support vector machines, random forests, and ensemble methods have proven effective in QSAR and related analyses by extrapolating the relationships between molecular properties and therapeutic outcomes. Deep learning approaches further advance these capabilities through sophisticated neural network architectures that capture non-linear interactions and complex spatial relationships in molecular data.

Data integration remains a critical challenge, with the need to reconcile heterogeneous data sources and ensure data quality for robust model training. Disparate information ranging from chemical descriptors to high-dimensional genomic profiles must be normalized and combined to fuel AI models that can provide accurate, clinically relevant predictions. Addressing issues such as missing data, variability in experimental conditions, and multi-modal data fusion is essential for the continued success of these systems.

There are already many illustrative case studies demonstrating successful applications of AI in early-stage efficacy prediction—from virtual screening and de novo compound design to in vitro cellular assays using standardized systems. However, challenges such as interpretability, generalizability, and computational resource demands remain. Future directions are anticipated to include advancements in explainable AI, the integration of real-time data streams, the development of hybrid models that combine mechanistic and AI-based predictions, and the establishment of standardized regulatory frameworks.

Overall, the future of AI in drug development is extremely promising. With emerging technologies and innovative data integration methods, AI will continue to enhance the predictive accuracy of drug candidate efficacy, paving the way for more effective and personalized therapies. The continued collaboration between AI experts, biopharmaceutical researchers, and regulatory bodies is essential to fully realize the potential of these technologies, ultimately accelerating the transition from bench to bedside and improving patient outcomes through more informed drug development decisions.

By addressing current limitations and continually advancing computational methods, AI is poised to transform early-stage drug development into a more predictive, cost-effective, and efficient process—a transformation that holds great promise for the future of healthcare and personalized medicine.

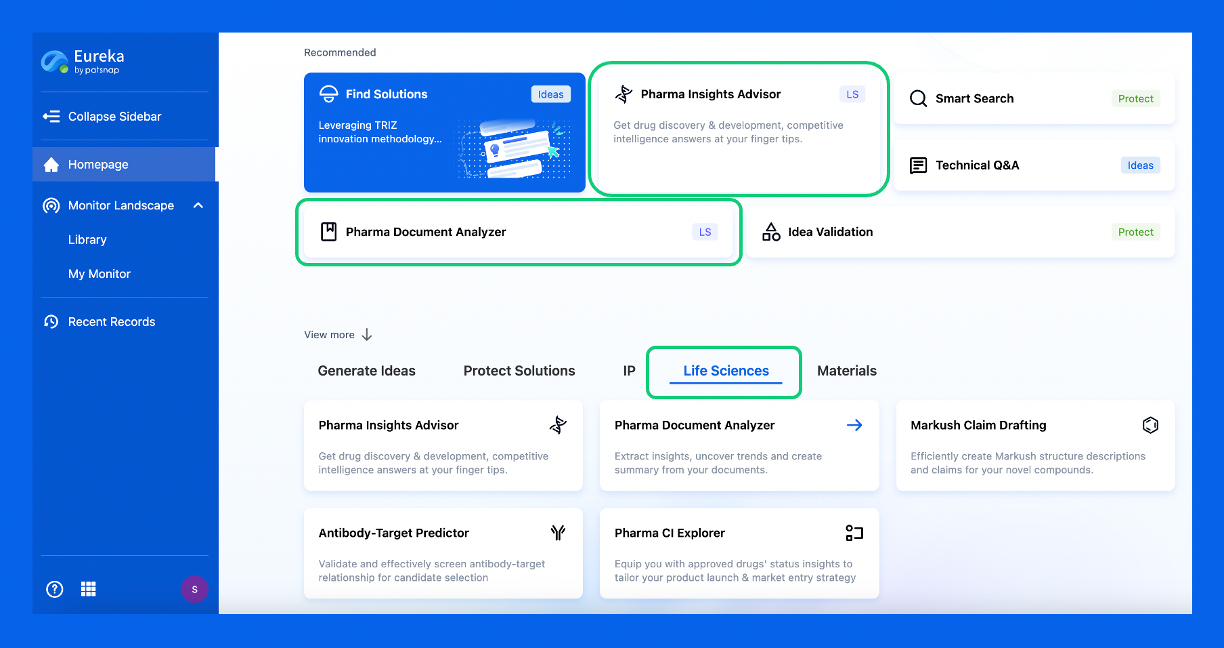

Discover Eureka LS: AI Agents Built for Biopharma Efficiency

Stop wasting time on biopharma busywork. Meet Eureka LS - your AI agent squad for drug discovery.

▶ See how 50+ research teams saved 300+ hours/month

From reducing screening time to simplifying Markush drafting, our AI Agents are ready to deliver immediate value. Explore Eureka LS today and unlock powerful capabilities that help you innovate with confidence.

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.