Request Demo

How can computer science be helpful in drug discovery and development?

21 March 2025

Introduction to Drug Discovery and Development

Drug discovery and development is an inherently complex, interdisciplinary process that spans from the identification of novel therapeutic targets to the eventual market approval of a drug. Over the past few decades, the field has witnessed a tremendous shift—transitioning from traditional trial‐and‐error laboratory methods toward more integrated approaches where computer science plays an increasingly crucial role in optimizing every stage of the process. In this section, we provide an overview of the traditional drug discovery process and discuss its intrinsic challenges.

Overview of Drug Discovery Process

The traditional process of drug discovery is a multi‐step, high‐cost, and lengthy endeavor, starting with target identification and validation, followed by hit identification, lead optimization, preclinical evaluations, and multiple phases of clinical trials before a drug can reach the market. Initially, researchers use biochemical and cellular assays to identify potential compounds that interact with a particular biological target—typically proteins involved in disease pathology. Once these lead compounds are identified, extensive optimization, structural refinement, and safety/toxicity testing are undertaken to improve their efficacy and pharmacokinetic properties.

Historically, the synthesis and screening of enormous libraries of chemical compounds were the driving forces of early drug discovery. However, as the chemical space (which potentially includes over 10^50 small molecules) became recognized as vastly underexplored, it soon became clear that random screening was not efficient. Over time, structure-based drug design (SBDD) emerged when the three-dimensional structures of target proteins became more readily available via X-ray crystallography and nuclear magnetic resonance spectroscopy (NMR). This allowed researchers to predict and model the interaction between targets and candidate molecules at the atomic level, significantly improving the hit rate for promising candidates and streamlining the subsequent optimization steps.

The modern drug discovery process increasingly integrates computational methods with traditional experimental procedures. High-throughput screening (HTS) platforms, automated synthesis, and robust in silico models now work in tandem, enabling the rapid filtration, scoring, and selection of candidate molecules from virtual libraries before entering the costly phases of laboratory synthesis and experimental validation. These computational techniques provide a means to simulate binding affinity, predict potential off-target effects, and assess ADMET properties—thereby dramatically reducing development timelines and failure rates.

Challenges in Traditional Drug Discovery

Despite the advancements in molecular biology and medicinal chemistry, traditional methods of drug discovery face several significant challenges. One of the foremost issues is the high rate of attrition; even after considerable investment, only a small fraction of compounds that enter clinical trials become approved drugs, often due to unforeseen toxicity, inadequate efficacy, or poor pharmacokinetics. Such failures not only represent financial losses of billions of dollars but also delay the availability of life-saving treatments.

Another challenge is the inherently time-consuming and labor-intensive nature of conventional screening methods. Classical techniques rely heavily on repeated chemical syntheses, in vitro assays, and animal testing, which can take over a decade from concept to market. Furthermore, traditional experimental approaches provide limited insights into complex molecular interactions and dynamic processes such as conformational changes, binding kinetics, and allosteric effects—parameters that are critically important for understanding drug mechanism of action.

The sheer vastness of chemical space also poses a problem; without computational guidance, researchers are left to explore only a minuscule fraction of potentially active compounds. This inadequacy in addressing the chemical diversity of drug candidates is compounded by the difficulties in predicting off-target effects and polypharmacology, where a drug might interact with multiple proteins, leading to unintended side effects. Finally, the regulatory landscape adds an additional layer of complexity. Meeting stringent safety and efficacy standards often requires extensive and repetitious clinical trials, further elongating the development cycle and contributing to high overall costs.

Computer Science Techniques in Drug Discovery

Computer science has introduced an arsenal of powerful techniques that have revolutionized drug discovery and development. These methods not only address the challenges inherent to traditional processes but also introduce efficiency, precision, and innovation that were previously unimaginable. By bridging the gap between raw biological data and actionable insights, computer science contributes substantially to every phase of drug development.

Bioinformatics and Computational Biology

Bioinformatics and computational biology play a central role in modern drug discovery by leveraging tools for sequence analysis, structural prediction, and pathway modeling. These fields enable researchers to collect, analyze, and integrate large-scale biological data—from genomic sequences to protein structures—thereby facilitating the identification of novel drug targets with unprecedented accuracy.

For example, bioinformatics allows the construction of extensive databases for protein–ligand interactions, enabling virtual docking experiments and the identification of candidate binding sites on target proteins through techniques like molecular dynamics (MD) simulation and quantitative structure-activity relationship (QSAR) analyses. MD simulations help researchers model the dynamic behavior of biomolecules, offering insights into binding affinities, kinetics, and thermodynamic properties—all of which are critical for rational drug design. Furthermore, these computational models are continually refined by incorporating experimental data from techniques such as X-ray crystallography and NMR, ensuring that the predicted interactions closely mimic real-life biological phenomena.

In addition, bioinformatics facilitates the detailed study of biological networks and pathways, which is essential for understanding diseases at a systems level. Such network-based methods help in identifying key nodes (e.g., proteins, genes) whose perturbation can lead to therapeutic effects. The integration of diverse omics data—genomics, transcriptomics, proteomics, and metabolomics—into computational platforms provides a holistic picture of disease mechanisms, allowing for the prioritization of molecular targets that might have been overlooked in traditional approaches. These systems-level insights have proven particularly valuable in complex diseases like cancer and neurodegenerative disorders.

Machine Learning and Artificial Intelligence

Machine learning (ML) and artificial intelligence (AI) are perhaps the most transformative technologies currently redefining the drug discovery landscape. With machine learning, researchers can design predictive models that learn from vast datasets of chemical structures and biological activities to identify patterns and make predictions about the efficacy, toxicity, and pharmacokinetics of potential drug candidates. These technologies are adept at handling high-dimensional data, enabling the rapid screening of millions of molecules against multiple targets simultaneously.

Deep learning, a subset of AI, has particularly shown great promise in de novo drug design and virtual screening. Convolutional neural networks (CNNs) and recurrent neural networks (RNNs) capture complex, nonlinear relationships in data, often surpassing traditional machine learning models in accuracy. These deep learning models can predict drug-target interactions with higher precision, optimize ligand binding affinities, and even generate novel chemical entities with desired properties by exploring unexplored regions of the chemical space. For instance, an AI-driven approach might first screen a vast chemical library virtually and then synthesize only a fraction of these candidates for further experimental validation, drastically reducing time and cost.

Moreover, ML approaches have been applied to predict adverse drug reactions by modeling the polypharmacological behavior of drugs through supervised learning techniques. By learning from historical data related to drug side effects, these models can flag potentially harmful compounds early in the development process, thereby enhancing patient safety and reducing the risk associated with clinical trials. Such predictive capabilities have even been extended to prescribe personalized medicine regimens by correlating individual patient genetic profiles with drug response.

The integration of AI with other computational methods has further enhanced the drug discovery process. For example, coupling molecular docking with machine learning has led to significant improvements in predicting binding free energies with better accuracy and reliability. In parallel, generative models based on AI can design entirely new molecular scaffolds, pushing the boundaries of traditional medicinal chemistry. This novel combination of generative design and rigorous screening is one of the key drivers behind recent successful drug discovery projects.

Data Mining and Big Data Analytics

The advent of big data in drug discovery has transformed the way researchers approach both target identification and candidate screening. Modern drug discovery relies on vast databases that contain chemical structures, biological assay data, patient genomic information, and clinical outcomes. Data mining and big data analytics provide the tools to extract patterns, correlations, and actionable insights from these heterogeneous datasets, which would be impossible to decipher manually.

Advanced data mining techniques enable the integration of diverse data types from multiple sources such as public databases (e.g., PubChem, ChEMBL) and proprietary datasets. These integrated approaches facilitate the rapid identification of molecular targets, prediction of drug efficacy, and discovery of potential off-target interactions that could lead to adverse effects. Moreover, machine learning algorithms operating on these large databases can discover novel drug-disease associations by analyzing network-centric data—thereby expanding our understanding of the interconnections between biological systems and chemical entities.

Further, big data analytics support the use of predictive models in clinical trial design. By analyzing historical trial data, researchers can simulate trial outcomes, optimize patient recruitment strategies, and even predict which patient subgroups will likely benefit from a new therapy. This predictive power is invaluable in reducing the time and cost associated with clinical testing by enabling more informed decision-making before actual trials commence. In addition, structured data platforms and curated databases facilitate reproducible research practices—ensuring that findings can be validated and built on by the broader scientific community.

Impact on Drug Development

The integration of computer science techniques in drug discovery and development has led to significant advancements in efficiency, cost reduction, and overall process optimization. By addressing the key limitations of traditional experimental methods, computational technologies have not only accelerated the pace of research but also improved the precision and reliability of predictions regarding drug efficacy and safety.

Efficiency and Cost Reduction

One of the most notable impacts of computer science in drug development is the drastic reduction of time and costs. Traditional drug discovery processes are characterized by high attrition rates and the need for extensive empirical testing, which can take over a decade and involve billions of dollars in investment. Computational methods streamline this process by enabling in silico screening that filters out ineffective compounds early in the pipeline. For example, using high-throughput virtual screening, researchers can simulate docking of millions of molecules to a target protein, identifying the most promising candidates before any wet-lab synthesis is conducted. This approach reduces the need for costly and time-consuming laboratory experiments.

Moreover, the use of AI-driven predictive models further refines this process. Machine learning algorithms, by analyzing large datasets, can estimate binding affinities, predict pharmacokinetic properties, and flag potential toxicity issues with remarkable accuracy. Such predictive analytics enable pharmaceutical companies to focus resources on a smaller subset of highly promising drug candidates. The overall effect is a reduction in research costs and a shorter time-to-market for new drugs—a critical advantage in a competitive industry where development costs often exceed $2.6 billion per drug.

Computational methods also offer the benefit of scalability. The increased availability of supercomputing and cloud-based solutions means that even large-scale simulations and deep learning models are accessible to both academic researchers and industrial laboratories. This democratization of computational power allows a broader range of scientists to contribute to drug discovery efforts, further accelerating innovation. Additionally, successful applications of computer-aided drug design have led to tangible success stories where drugs discovered primarily through computational methods have reached clinical approval, demonstrating dramatic improvements in efficiency.

Case Studies and Success Stories

Several case studies highlight the profound impact of computer science on drug development. For example, the development of Crizotinib—an effective inhibitor targeting c-Met/ALK—illustrates the successful integration of structure-based design with computational chemistry and machine learning. By leveraging detailed co-crystal structure analyses and molecular docking simulations, researchers were able to design and optimize Crizotinib’s binding interactions, resulting in a potent drug with improved pharmacokinetic properties that gained FDA approval in 2011.

Similarly, advances in deep learning have enabled the rapid discovery of novel compounds with desirable properties. Companies have reported that AI-assisted platforms have been used to propose new chemical structures that were later validated experimentally, effectively reducing the experimental workload and associated costs. In another example, a hybrid computational system combining classical and quantum computing methods has been proposed to further refine drug discovery processes by accurately predicting binding thermodynamics and kinetics. These case studies illustrate that computational approaches not only streamline early-stage discovery but also enhance the overall success rate in later clinical phases by reducing unforeseen side effects and optimizing drug formulations.

Furthermore, big data analytics have played a significant role in several success stories by enabling the integration of clinical, genomic, and chemical data to predict drug response in patient populations. For instance, AI-powered systems have been used to analyze electronic health records (EHRs) and multiomics data to tailor personalized treatment regimens, thus ensuring that patients receive drugs that are both efficacious and have minimal side effects. In these ways, computer science has directly contributed to both the economic and clinical success of drug development initiatives.

Ethical and Regulatory Considerations

While computer science techniques in drug discovery promise substantial benefits, they also introduce unique ethical and regulatory challenges. Addressing these considerations is essential to ensure that the advancements in computational methods translate into safe, effective, and ethically sound medical treatments.

Ethical Implications

The integration of advanced computational methods such as AI and machine learning in drug discovery raises important ethical questions related to data privacy, bias, and accountability. The reliance on vast amounts of personal and genomic data necessitates strict safeguards to protect patient privacy. Moreover, data-driven decision-making can inadvertently perpetuate biases present in the datasets if they are not carefully curated and balanced. For example, if a machine learning model is trained primarily on data from a specific population, its predictions may not be generalizable to other demographic groups, thus leading to inequitable healthcare outcomes.

Furthermore, there is an ethical imperative to maintain transparency in the methodologies used. The “black-box” nature of many deep learning algorithms can make it difficult for clinicians and patients to understand how certain decisions are reached. This lack of transparency can affect trust and accountability, especially when critical decisions, such as predicting adverse drug reactions or determining patient eligibility for a clinical trial, are made solely on computational predictions. Therefore, it is crucial to develop explainable AI frameworks that can offer insights into the decision-making process of these models, ensuring that ethical standards are upheld throughout the drug discovery and development pipeline.

Ethical issues also arise in the context of intellectual property and data sharing. As computational models and databases become increasingly central to drug discovery, questions about data ownership, sharing policies, and the proprietary nature of AI algorithms become prominent. Balancing open science ideals with commercial interests is a complex challenge that requires clear guidelines and policies to ensure that the benefits of computational advances are equitably distributed.

Regulatory Challenges

The accelerating pace of computational drug discovery has, in many ways, outpaced the regulatory frameworks that govern drug approval and clinical trials. Regulatory bodies such as the FDA have traditionally relied on extensive experimental data to evaluate new drugs, and the integration of in silico data poses new challenges in terms of validation and standardization. For instance, when a drug candidate is primarily developed through computational predictions, regulators must assess how these in silico findings correlate with in vitro and in vivo results. This creates a need for rigorous validation protocols and guidelines to ensure that computational models are accurate, reproducible, and reliable.

Moreover, the implementation of AI and machine learning in drug development requires that models are continuously updated and refined as new data become available. Regulatory agencies must therefore develop frameworks that allow for adaptive approvals, where computational models can be updated post-market while still maintaining safety and efficacy standards. The dynamic nature of AI-driven systems also raises concerns about quality control, software reliability, and cybersecurity, all of which must be addressed before regulators can fully endorse these methodologies.

Another important aspect is the reproducibility of computational research. As highlighted in recent studies, the reproducibility crisis in science is not limited to experimental data—it also affects computational models. Establishing standardized benchmarking, transparent reporting guidelines, and open-access repositories for code and data are crucial steps to foster confidence in computational approaches among regulators and the broader scientific community. In summary, while computer science offers transformative potential for drug development, robust ethical and regulatory frameworks must be established to ensure safe, fair, and accountable translation of these methods into clinical practice.

Future Directions and Challenges

Looking forward, computer science is poised to continue its transformative influence on drug discovery and development. However, several emerging technologies and current limitations must be addressed to fully realize the potential of computational methods in this field.

Emerging Technologies

The development of hybrid computational systems that integrate classical and quantum computing represents one of the most exciting frontiers in drug discovery. Quantum computing, with its capacity to process complex chemical interactions at unprecedented speeds, promises to overcome limitations of conventional simulation methods in predicting binding energies and conformational dynamics accurately. Combining quantum computing with traditional molecular dynamics and AI-driven prediction models could lead to a new era of precision drug design, where the entire process—from molecule generation to clinical outcome prediction—is optimized and streamlined.

Furthermore, advancements in robotics and automation are poised to work in synergy with computational methods. For example, automated synthesis and high-throughput screening platforms, when coupled with real-time data analytics and machine learning algorithms, could revolutionize the early stages of drug discovery. This integration would allow for nearly autonomous drug discovery pipelines that not only generate and test candidate compounds at a rapid pace but also adapt based on continuous feedback from computational models.

Virtual reality (VR) and augmented reality (AR) technologies also present novel opportunities for drug discovery. These immersive technologies can provide researchers with three-dimensional visualizations of molecular interactions, enabling real-time manipulation and analysis of complex biomolecular structures. Such tools have the potential to make structure-based drug design more intuitive and comprehensible—even for those who are not experts in computational modeling.

Another emerging technology is the use of blockchain for secure data sharing and verification. Given the ethical and regulatory concerns surrounding patient data and proprietary research, blockchain may offer a solution for ensuring data integrity, transparency, and secure collaboration across the global research community. This technology could foster an environment of open innovation while still protecting sensitive information and intellectual property rights.

Current Limitations and Research Needs

Despite the remarkable progress achieved through computer science applications in drug discovery, several challenges remain. One persistent limitation is the accuracy and reliability of computational models in predicting complex biological interactions. Although machine learning models have demonstrated high accuracy in vitro, translating these predictions into in vivo outcomes is still a major challenge due to the dynamic and multifactorial nature of living systems. Researchers continue to work on integrating more realistic physiological parameters into their models, such as cellular heterogeneity, tissue-level interactions, and pharmacokinetics, to improve predictive power.

Data quality and availability also limit the potential of computational approaches. The effectiveness of AI and machine learning models depends heavily on high-quality, comprehensive datasets. However, many datasets contain noise, are incomplete, or suffer from sampling biases. Standardizing data collection methods, enhancing public databases, and promoting data sharing initiatives are vital future research needs that could significantly improve the performance of computational models.

Furthermore, while deep learning methods have provided unprecedented capabilities for pattern recognition and molecule generation, these models are often criticized for their lack of interpretability. Developing explainable AI (XAI) frameworks that can elucidate the reasoning behind a model’s predictions is a critical research need. Such transparency not only increases trust in computational results among regulatory agencies and clinicians but also aids researchers in understanding the underlying biological mechanisms.

Lastly, the integration and interoperability of various computational platforms remain a challenge. The drug discovery process involves a myriad of data types—from genomic sequences and protein structures to chemical properties and patient records. Creating a cohesive ecosystem where these diverse data sources and computational tools can seamlessly interact is essential for realizing the full potential of computer science in drug discovery. Future research must focus on developing standardized protocols, common data formats, and integrated software solutions that can handle the complexity of modern drug development pipelines.

Conclusion

In conclusion, computer science has emerged as an indispensable driver in modern drug discovery and development. Beginning with the comprehensive reimagining of the traditional drug discovery process—from target identification to clinical trials—computational methods now enable researchers to overcome longstanding challenges such as high attrition rates, extensive timeframes, and exorbitant costs. Through bioinformatics and computational biology, scientists can integrate vast and diverse biological data to identify new drug targets, while machine learning and artificial intelligence provide powerful predictive models that enhance the selection and optimization of drug candidates. Likewise, data mining and big data analytics harness large volumes of experimental and clinical data to refine decision-making processes and predict clinical outcomes with unprecedented accuracy.

The impact of these computational techniques is already evident in numerous success stories—crizotinib being a prime example—where synergistic applications of molecular simulation, structural modeling, and deep learning have accelerated the drug discovery process and delivered effective therapeutic agents. However, alongside these technical achievements, important ethical and regulatory considerations must be addressed. Issues such as data privacy, algorithmic transparency, and the need for standardized validation protocols underscore the importance of establishing robust frameworks that ensure both patient safety and scientific accountability.

Looking ahead, emerging technologies like quantum computing, automated synthesis, and immersive visualization tools present promising avenues for further innovation, while current limitations such as model interpretability, data quality, and system integration continue to inspire ongoing research. The future of drug discovery and development will undoubtedly be shaped by the evolving landscape of computer science—driving faster, more efficient, and cost-effective solutions that bring novel therapies to market and improve patient outcomes worldwide.

Thus, computer science not only complements but actively transforms drug discovery by offering multifaceted perspectives—from detailed molecular interactions and predictive analytics to scalable data integration and ethical oversight. This integration of disciplinary expertise presents a promising, sustainable, and ultimately life-enhancing paradigm for the future of pharmaceutical research and clinical application.

Drug discovery and development is an inherently complex, interdisciplinary process that spans from the identification of novel therapeutic targets to the eventual market approval of a drug. Over the past few decades, the field has witnessed a tremendous shift—transitioning from traditional trial‐and‐error laboratory methods toward more integrated approaches where computer science plays an increasingly crucial role in optimizing every stage of the process. In this section, we provide an overview of the traditional drug discovery process and discuss its intrinsic challenges.

Overview of Drug Discovery Process

The traditional process of drug discovery is a multi‐step, high‐cost, and lengthy endeavor, starting with target identification and validation, followed by hit identification, lead optimization, preclinical evaluations, and multiple phases of clinical trials before a drug can reach the market. Initially, researchers use biochemical and cellular assays to identify potential compounds that interact with a particular biological target—typically proteins involved in disease pathology. Once these lead compounds are identified, extensive optimization, structural refinement, and safety/toxicity testing are undertaken to improve their efficacy and pharmacokinetic properties.

Historically, the synthesis and screening of enormous libraries of chemical compounds were the driving forces of early drug discovery. However, as the chemical space (which potentially includes over 10^50 small molecules) became recognized as vastly underexplored, it soon became clear that random screening was not efficient. Over time, structure-based drug design (SBDD) emerged when the three-dimensional structures of target proteins became more readily available via X-ray crystallography and nuclear magnetic resonance spectroscopy (NMR). This allowed researchers to predict and model the interaction between targets and candidate molecules at the atomic level, significantly improving the hit rate for promising candidates and streamlining the subsequent optimization steps.

The modern drug discovery process increasingly integrates computational methods with traditional experimental procedures. High-throughput screening (HTS) platforms, automated synthesis, and robust in silico models now work in tandem, enabling the rapid filtration, scoring, and selection of candidate molecules from virtual libraries before entering the costly phases of laboratory synthesis and experimental validation. These computational techniques provide a means to simulate binding affinity, predict potential off-target effects, and assess ADMET properties—thereby dramatically reducing development timelines and failure rates.

Challenges in Traditional Drug Discovery

Despite the advancements in molecular biology and medicinal chemistry, traditional methods of drug discovery face several significant challenges. One of the foremost issues is the high rate of attrition; even after considerable investment, only a small fraction of compounds that enter clinical trials become approved drugs, often due to unforeseen toxicity, inadequate efficacy, or poor pharmacokinetics. Such failures not only represent financial losses of billions of dollars but also delay the availability of life-saving treatments.

Another challenge is the inherently time-consuming and labor-intensive nature of conventional screening methods. Classical techniques rely heavily on repeated chemical syntheses, in vitro assays, and animal testing, which can take over a decade from concept to market. Furthermore, traditional experimental approaches provide limited insights into complex molecular interactions and dynamic processes such as conformational changes, binding kinetics, and allosteric effects—parameters that are critically important for understanding drug mechanism of action.

The sheer vastness of chemical space also poses a problem; without computational guidance, researchers are left to explore only a minuscule fraction of potentially active compounds. This inadequacy in addressing the chemical diversity of drug candidates is compounded by the difficulties in predicting off-target effects and polypharmacology, where a drug might interact with multiple proteins, leading to unintended side effects. Finally, the regulatory landscape adds an additional layer of complexity. Meeting stringent safety and efficacy standards often requires extensive and repetitious clinical trials, further elongating the development cycle and contributing to high overall costs.

Computer Science Techniques in Drug Discovery

Computer science has introduced an arsenal of powerful techniques that have revolutionized drug discovery and development. These methods not only address the challenges inherent to traditional processes but also introduce efficiency, precision, and innovation that were previously unimaginable. By bridging the gap between raw biological data and actionable insights, computer science contributes substantially to every phase of drug development.

Bioinformatics and Computational Biology

Bioinformatics and computational biology play a central role in modern drug discovery by leveraging tools for sequence analysis, structural prediction, and pathway modeling. These fields enable researchers to collect, analyze, and integrate large-scale biological data—from genomic sequences to protein structures—thereby facilitating the identification of novel drug targets with unprecedented accuracy.

For example, bioinformatics allows the construction of extensive databases for protein–ligand interactions, enabling virtual docking experiments and the identification of candidate binding sites on target proteins through techniques like molecular dynamics (MD) simulation and quantitative structure-activity relationship (QSAR) analyses. MD simulations help researchers model the dynamic behavior of biomolecules, offering insights into binding affinities, kinetics, and thermodynamic properties—all of which are critical for rational drug design. Furthermore, these computational models are continually refined by incorporating experimental data from techniques such as X-ray crystallography and NMR, ensuring that the predicted interactions closely mimic real-life biological phenomena.

In addition, bioinformatics facilitates the detailed study of biological networks and pathways, which is essential for understanding diseases at a systems level. Such network-based methods help in identifying key nodes (e.g., proteins, genes) whose perturbation can lead to therapeutic effects. The integration of diverse omics data—genomics, transcriptomics, proteomics, and metabolomics—into computational platforms provides a holistic picture of disease mechanisms, allowing for the prioritization of molecular targets that might have been overlooked in traditional approaches. These systems-level insights have proven particularly valuable in complex diseases like cancer and neurodegenerative disorders.

Machine Learning and Artificial Intelligence

Machine learning (ML) and artificial intelligence (AI) are perhaps the most transformative technologies currently redefining the drug discovery landscape. With machine learning, researchers can design predictive models that learn from vast datasets of chemical structures and biological activities to identify patterns and make predictions about the efficacy, toxicity, and pharmacokinetics of potential drug candidates. These technologies are adept at handling high-dimensional data, enabling the rapid screening of millions of molecules against multiple targets simultaneously.

Deep learning, a subset of AI, has particularly shown great promise in de novo drug design and virtual screening. Convolutional neural networks (CNNs) and recurrent neural networks (RNNs) capture complex, nonlinear relationships in data, often surpassing traditional machine learning models in accuracy. These deep learning models can predict drug-target interactions with higher precision, optimize ligand binding affinities, and even generate novel chemical entities with desired properties by exploring unexplored regions of the chemical space. For instance, an AI-driven approach might first screen a vast chemical library virtually and then synthesize only a fraction of these candidates for further experimental validation, drastically reducing time and cost.

Moreover, ML approaches have been applied to predict adverse drug reactions by modeling the polypharmacological behavior of drugs through supervised learning techniques. By learning from historical data related to drug side effects, these models can flag potentially harmful compounds early in the development process, thereby enhancing patient safety and reducing the risk associated with clinical trials. Such predictive capabilities have even been extended to prescribe personalized medicine regimens by correlating individual patient genetic profiles with drug response.

The integration of AI with other computational methods has further enhanced the drug discovery process. For example, coupling molecular docking with machine learning has led to significant improvements in predicting binding free energies with better accuracy and reliability. In parallel, generative models based on AI can design entirely new molecular scaffolds, pushing the boundaries of traditional medicinal chemistry. This novel combination of generative design and rigorous screening is one of the key drivers behind recent successful drug discovery projects.

Data Mining and Big Data Analytics

The advent of big data in drug discovery has transformed the way researchers approach both target identification and candidate screening. Modern drug discovery relies on vast databases that contain chemical structures, biological assay data, patient genomic information, and clinical outcomes. Data mining and big data analytics provide the tools to extract patterns, correlations, and actionable insights from these heterogeneous datasets, which would be impossible to decipher manually.

Advanced data mining techniques enable the integration of diverse data types from multiple sources such as public databases (e.g., PubChem, ChEMBL) and proprietary datasets. These integrated approaches facilitate the rapid identification of molecular targets, prediction of drug efficacy, and discovery of potential off-target interactions that could lead to adverse effects. Moreover, machine learning algorithms operating on these large databases can discover novel drug-disease associations by analyzing network-centric data—thereby expanding our understanding of the interconnections between biological systems and chemical entities.

Further, big data analytics support the use of predictive models in clinical trial design. By analyzing historical trial data, researchers can simulate trial outcomes, optimize patient recruitment strategies, and even predict which patient subgroups will likely benefit from a new therapy. This predictive power is invaluable in reducing the time and cost associated with clinical testing by enabling more informed decision-making before actual trials commence. In addition, structured data platforms and curated databases facilitate reproducible research practices—ensuring that findings can be validated and built on by the broader scientific community.

Impact on Drug Development

The integration of computer science techniques in drug discovery and development has led to significant advancements in efficiency, cost reduction, and overall process optimization. By addressing the key limitations of traditional experimental methods, computational technologies have not only accelerated the pace of research but also improved the precision and reliability of predictions regarding drug efficacy and safety.

Efficiency and Cost Reduction

One of the most notable impacts of computer science in drug development is the drastic reduction of time and costs. Traditional drug discovery processes are characterized by high attrition rates and the need for extensive empirical testing, which can take over a decade and involve billions of dollars in investment. Computational methods streamline this process by enabling in silico screening that filters out ineffective compounds early in the pipeline. For example, using high-throughput virtual screening, researchers can simulate docking of millions of molecules to a target protein, identifying the most promising candidates before any wet-lab synthesis is conducted. This approach reduces the need for costly and time-consuming laboratory experiments.

Moreover, the use of AI-driven predictive models further refines this process. Machine learning algorithms, by analyzing large datasets, can estimate binding affinities, predict pharmacokinetic properties, and flag potential toxicity issues with remarkable accuracy. Such predictive analytics enable pharmaceutical companies to focus resources on a smaller subset of highly promising drug candidates. The overall effect is a reduction in research costs and a shorter time-to-market for new drugs—a critical advantage in a competitive industry where development costs often exceed $2.6 billion per drug.

Computational methods also offer the benefit of scalability. The increased availability of supercomputing and cloud-based solutions means that even large-scale simulations and deep learning models are accessible to both academic researchers and industrial laboratories. This democratization of computational power allows a broader range of scientists to contribute to drug discovery efforts, further accelerating innovation. Additionally, successful applications of computer-aided drug design have led to tangible success stories where drugs discovered primarily through computational methods have reached clinical approval, demonstrating dramatic improvements in efficiency.

Case Studies and Success Stories

Several case studies highlight the profound impact of computer science on drug development. For example, the development of Crizotinib—an effective inhibitor targeting c-Met/ALK—illustrates the successful integration of structure-based design with computational chemistry and machine learning. By leveraging detailed co-crystal structure analyses and molecular docking simulations, researchers were able to design and optimize Crizotinib’s binding interactions, resulting in a potent drug with improved pharmacokinetic properties that gained FDA approval in 2011.

Similarly, advances in deep learning have enabled the rapid discovery of novel compounds with desirable properties. Companies have reported that AI-assisted platforms have been used to propose new chemical structures that were later validated experimentally, effectively reducing the experimental workload and associated costs. In another example, a hybrid computational system combining classical and quantum computing methods has been proposed to further refine drug discovery processes by accurately predicting binding thermodynamics and kinetics. These case studies illustrate that computational approaches not only streamline early-stage discovery but also enhance the overall success rate in later clinical phases by reducing unforeseen side effects and optimizing drug formulations.

Furthermore, big data analytics have played a significant role in several success stories by enabling the integration of clinical, genomic, and chemical data to predict drug response in patient populations. For instance, AI-powered systems have been used to analyze electronic health records (EHRs) and multiomics data to tailor personalized treatment regimens, thus ensuring that patients receive drugs that are both efficacious and have minimal side effects. In these ways, computer science has directly contributed to both the economic and clinical success of drug development initiatives.

Ethical and Regulatory Considerations

While computer science techniques in drug discovery promise substantial benefits, they also introduce unique ethical and regulatory challenges. Addressing these considerations is essential to ensure that the advancements in computational methods translate into safe, effective, and ethically sound medical treatments.

Ethical Implications

The integration of advanced computational methods such as AI and machine learning in drug discovery raises important ethical questions related to data privacy, bias, and accountability. The reliance on vast amounts of personal and genomic data necessitates strict safeguards to protect patient privacy. Moreover, data-driven decision-making can inadvertently perpetuate biases present in the datasets if they are not carefully curated and balanced. For example, if a machine learning model is trained primarily on data from a specific population, its predictions may not be generalizable to other demographic groups, thus leading to inequitable healthcare outcomes.

Furthermore, there is an ethical imperative to maintain transparency in the methodologies used. The “black-box” nature of many deep learning algorithms can make it difficult for clinicians and patients to understand how certain decisions are reached. This lack of transparency can affect trust and accountability, especially when critical decisions, such as predicting adverse drug reactions or determining patient eligibility for a clinical trial, are made solely on computational predictions. Therefore, it is crucial to develop explainable AI frameworks that can offer insights into the decision-making process of these models, ensuring that ethical standards are upheld throughout the drug discovery and development pipeline.

Ethical issues also arise in the context of intellectual property and data sharing. As computational models and databases become increasingly central to drug discovery, questions about data ownership, sharing policies, and the proprietary nature of AI algorithms become prominent. Balancing open science ideals with commercial interests is a complex challenge that requires clear guidelines and policies to ensure that the benefits of computational advances are equitably distributed.

Regulatory Challenges

The accelerating pace of computational drug discovery has, in many ways, outpaced the regulatory frameworks that govern drug approval and clinical trials. Regulatory bodies such as the FDA have traditionally relied on extensive experimental data to evaluate new drugs, and the integration of in silico data poses new challenges in terms of validation and standardization. For instance, when a drug candidate is primarily developed through computational predictions, regulators must assess how these in silico findings correlate with in vitro and in vivo results. This creates a need for rigorous validation protocols and guidelines to ensure that computational models are accurate, reproducible, and reliable.

Moreover, the implementation of AI and machine learning in drug development requires that models are continuously updated and refined as new data become available. Regulatory agencies must therefore develop frameworks that allow for adaptive approvals, where computational models can be updated post-market while still maintaining safety and efficacy standards. The dynamic nature of AI-driven systems also raises concerns about quality control, software reliability, and cybersecurity, all of which must be addressed before regulators can fully endorse these methodologies.

Another important aspect is the reproducibility of computational research. As highlighted in recent studies, the reproducibility crisis in science is not limited to experimental data—it also affects computational models. Establishing standardized benchmarking, transparent reporting guidelines, and open-access repositories for code and data are crucial steps to foster confidence in computational approaches among regulators and the broader scientific community. In summary, while computer science offers transformative potential for drug development, robust ethical and regulatory frameworks must be established to ensure safe, fair, and accountable translation of these methods into clinical practice.

Future Directions and Challenges

Looking forward, computer science is poised to continue its transformative influence on drug discovery and development. However, several emerging technologies and current limitations must be addressed to fully realize the potential of computational methods in this field.

Emerging Technologies

The development of hybrid computational systems that integrate classical and quantum computing represents one of the most exciting frontiers in drug discovery. Quantum computing, with its capacity to process complex chemical interactions at unprecedented speeds, promises to overcome limitations of conventional simulation methods in predicting binding energies and conformational dynamics accurately. Combining quantum computing with traditional molecular dynamics and AI-driven prediction models could lead to a new era of precision drug design, where the entire process—from molecule generation to clinical outcome prediction—is optimized and streamlined.

Furthermore, advancements in robotics and automation are poised to work in synergy with computational methods. For example, automated synthesis and high-throughput screening platforms, when coupled with real-time data analytics and machine learning algorithms, could revolutionize the early stages of drug discovery. This integration would allow for nearly autonomous drug discovery pipelines that not only generate and test candidate compounds at a rapid pace but also adapt based on continuous feedback from computational models.

Virtual reality (VR) and augmented reality (AR) technologies also present novel opportunities for drug discovery. These immersive technologies can provide researchers with three-dimensional visualizations of molecular interactions, enabling real-time manipulation and analysis of complex biomolecular structures. Such tools have the potential to make structure-based drug design more intuitive and comprehensible—even for those who are not experts in computational modeling.

Another emerging technology is the use of blockchain for secure data sharing and verification. Given the ethical and regulatory concerns surrounding patient data and proprietary research, blockchain may offer a solution for ensuring data integrity, transparency, and secure collaboration across the global research community. This technology could foster an environment of open innovation while still protecting sensitive information and intellectual property rights.

Current Limitations and Research Needs

Despite the remarkable progress achieved through computer science applications in drug discovery, several challenges remain. One persistent limitation is the accuracy and reliability of computational models in predicting complex biological interactions. Although machine learning models have demonstrated high accuracy in vitro, translating these predictions into in vivo outcomes is still a major challenge due to the dynamic and multifactorial nature of living systems. Researchers continue to work on integrating more realistic physiological parameters into their models, such as cellular heterogeneity, tissue-level interactions, and pharmacokinetics, to improve predictive power.

Data quality and availability also limit the potential of computational approaches. The effectiveness of AI and machine learning models depends heavily on high-quality, comprehensive datasets. However, many datasets contain noise, are incomplete, or suffer from sampling biases. Standardizing data collection methods, enhancing public databases, and promoting data sharing initiatives are vital future research needs that could significantly improve the performance of computational models.

Furthermore, while deep learning methods have provided unprecedented capabilities for pattern recognition and molecule generation, these models are often criticized for their lack of interpretability. Developing explainable AI (XAI) frameworks that can elucidate the reasoning behind a model’s predictions is a critical research need. Such transparency not only increases trust in computational results among regulatory agencies and clinicians but also aids researchers in understanding the underlying biological mechanisms.

Lastly, the integration and interoperability of various computational platforms remain a challenge. The drug discovery process involves a myriad of data types—from genomic sequences and protein structures to chemical properties and patient records. Creating a cohesive ecosystem where these diverse data sources and computational tools can seamlessly interact is essential for realizing the full potential of computer science in drug discovery. Future research must focus on developing standardized protocols, common data formats, and integrated software solutions that can handle the complexity of modern drug development pipelines.

Conclusion

In conclusion, computer science has emerged as an indispensable driver in modern drug discovery and development. Beginning with the comprehensive reimagining of the traditional drug discovery process—from target identification to clinical trials—computational methods now enable researchers to overcome longstanding challenges such as high attrition rates, extensive timeframes, and exorbitant costs. Through bioinformatics and computational biology, scientists can integrate vast and diverse biological data to identify new drug targets, while machine learning and artificial intelligence provide powerful predictive models that enhance the selection and optimization of drug candidates. Likewise, data mining and big data analytics harness large volumes of experimental and clinical data to refine decision-making processes and predict clinical outcomes with unprecedented accuracy.

The impact of these computational techniques is already evident in numerous success stories—crizotinib being a prime example—where synergistic applications of molecular simulation, structural modeling, and deep learning have accelerated the drug discovery process and delivered effective therapeutic agents. However, alongside these technical achievements, important ethical and regulatory considerations must be addressed. Issues such as data privacy, algorithmic transparency, and the need for standardized validation protocols underscore the importance of establishing robust frameworks that ensure both patient safety and scientific accountability.

Looking ahead, emerging technologies like quantum computing, automated synthesis, and immersive visualization tools present promising avenues for further innovation, while current limitations such as model interpretability, data quality, and system integration continue to inspire ongoing research. The future of drug discovery and development will undoubtedly be shaped by the evolving landscape of computer science—driving faster, more efficient, and cost-effective solutions that bring novel therapies to market and improve patient outcomes worldwide.

Thus, computer science not only complements but actively transforms drug discovery by offering multifaceted perspectives—from detailed molecular interactions and predictive analytics to scalable data integration and ethical oversight. This integration of disciplinary expertise presents a promising, sustainable, and ultimately life-enhancing paradigm for the future of pharmaceutical research and clinical application.

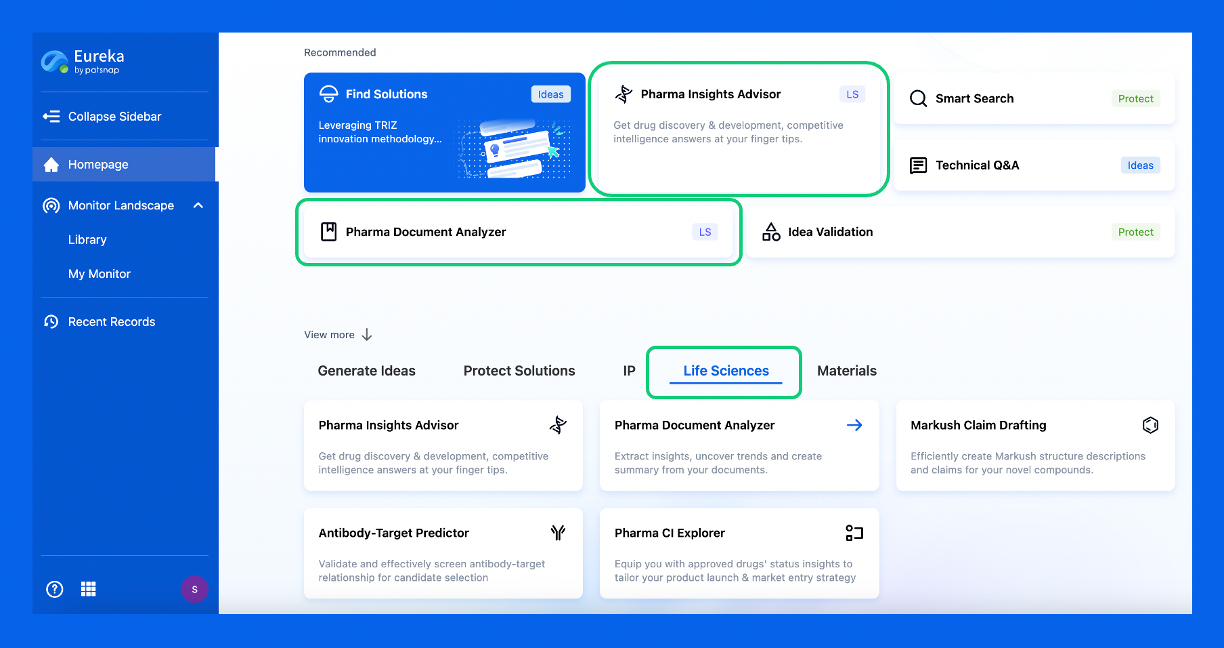

Discover Eureka LS: AI Agents Built for Biopharma Efficiency

Stop wasting time on biopharma busywork. Meet Eureka LS - your AI agent squad for drug discovery.

▶ See how 50+ research teams saved 300+ hours/month

From reducing screening time to simplifying Markush drafting, our AI Agents are ready to deliver immediate value. Explore Eureka LS today and unlock powerful capabilities that help you innovate with confidence.

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.