Request Demo

How does AI assist in the discovery of small molecule drugs?

21 March 2025

Introduction to AI in Drug Discovery

Artificial intelligence (AI) has emerged as a transformative force in drug discovery by leveraging machine learning (ML), deep learning (DL), and other computational techniques that integrate large volumes of data from genomic, chemical, and clinical sources. These AI technologies have revolutionized the traditional drug discovery paradigm—a notoriously time‐consuming and expensive process—and have accelerated the identification, design, and optimization of small molecule drugs. By combining robust data resources with sophisticated algorithms, AI systems can extract patterns, predict outcomes, and provide insights that were previously unattainable with conventional methods.

Overview of AI Technologies

AI technologies in drug discovery generally encompass a variety of methods, from supervised learning algorithms such as support vector machines (SVM) and random forests (RF) to unsupervised learning methods and neural network architectures including deep neural networks (DNNs), generative adversarial networks (GANs), variational autoencoders (VAEs), and one-shot learning techniques. These methods are adept at handling diverse types of input data such as molecular descriptors, protein sequences, or even three-dimensional structures for molecular docking. Advanced computational techniques now enable the transformation of raw experimental and clinical data into machine-readable formats—using molecular fingerprints, graph-based methods, and sequence-based representations—allowing for the prediction of bioactivity, toxicity, and pharmacokinetic properties.

Furthermore, AI in drug discovery leverages virtual screening, which utilizes docking simulations and energy-based models to rapidly scan huge chemical libraries to identify promising candidate molecules. In addition to virtual screening, AI models like quantitative structure–activity relationship (QSAR) methods have been enhanced by deep learning, which extracts high-level features directly from raw chemical data without manual feature engineering. Overall, the integration of these cutting-edge techniques has created a highly interdisciplinary framework that not only facilitates the identification of promising drug candidates but also continuously optimizes the drug design process.

Historical Context of AI in Drug Discovery

Historically, drug discovery was heavily reliant on serendipitous findings and laborious experimental screening that spanned decades and required substantial financial investments. Early approaches depended principally on high-throughput screening (HTS) of chemical libraries with limited predictive capability. As computational power and data availability increased, researchers started to adopt classical machine learning techniques to model structure–activity relationships; however, these initial methods were often constrained by the size and quality of available data.

Recent years have witnessed a paradigm shift in drug discovery, where AI has evolved from a theoretical promise into a practical tool actively used by pharmaceutical companies. Collaborations between large pharmaceutical firms and tech companies have accelerated the adoption of AI in drug research and development, with several case studies now demonstrating significant improvements in the prediction accuracy of target binding and compound optimization. This historical evolution—from traditional experimental methods to AI-enhanced discovery—has laid the groundwork for the modern, digitally driven approach that now defines small molecule drug discovery.

Role of AI in Small Molecule Drug Discovery

AI plays a multifaceted role in small molecule drug discovery by significantly reducing the time and costs involved while increasing predictive accuracy and enabling the design of novel molecules with optimal properties. Its role can be broken down into three main pillars: target identification, lead compound optimization, and predictive modeling.

Target Identification

One of the primary applications of AI in drug discovery is the identification of novel therapeutic targets. Target identification involves sifting through vast omics datasets—including genomic, proteomic, and metabolomic data—to pinpoint proteins or genes that play a crucial role in disease pathogenesis. AI algorithms capitalise on network analysis methods and feature engineering techniques to discover target-disease associations that might be missed by conventional experiments.

For example, various machine learning techniques can be used to integrate heterogeneous data sources like clinical records, literature databases, and biological interaction networks to generate knowledge graphs that identify novel protein targets or validate existing ones. By applying SVMs, random forests, or neural networks to such integrated data, researchers are able to generate hypotheses about the involvement of specific proteins or pathways in disease and predict potential druggable targets with enhanced specificity. AI-driven target identification is not only more cost-effective but often yields results with higher confidence, laying a robust foundation for subsequent stages of drug development.

Lead Compound Optimization

After target identification comes the stage of lead discovery and subsequent optimization. In this phase, AI assists chemists in refining chemical structures to enhance both efficacy and safety profiles. Traditional lead optimization depends heavily on iterative cycles of chemical synthesis and experimental testing, which are resource-intensive. AI techniques facilitate a more focused approach by leveraging predictive models to simulate how modifications to a molecule’s scaffold will affect its activity, ADME (absorption, distribution, metabolism, and excretion) profile, and toxicity.

For instance, deep learning models can analyze structural information and predict binding affinities to target proteins by simulating molecular docking processes. Generative models such as VAEs and GANs are being employed to design novel chemical entities that meet specific criteria, such as high potency and desirable pharmacokinetics. Moreover, methods like one-shot learning have been introduced to fine-tune drug design processes, particularly in scenarios with limited experimental data. This AI-driven optimization reduces the number of synthesis iterations and experimental validations required, thereby accelerating the discovery process and significantly lowering costs.

Predictive Modeling

Predictive modeling constitutes another cornerstone of AI in small molecule drug discovery. It involves using AI algorithms to predict various properties of candidate molecules even before they are synthesized in the laboratory. QSAR models enhanced by deep learning predict chemical and biological properties such as toxicity, bioavailability, and drug-target interactions with high accuracy. These predictions allow researchers to eliminate compounds with unfavorable profiles early in the drug discovery pipeline.

AI-powered predictive models are not only effective in forecasting target binding affinities but also in anticipating the outcomes of complex biological interactions during in vivo studies. For example, ML algorithms trained on multi-omics datasets can predict the metabolic stability of compounds and forecast adverse drug reactions, thereby guiding medicinal chemists in selecting and refining promising candidates. By incorporating features from molecular docking simulations, pharmacophores, and computed descriptors, AI models offer an integrated approach that ensures a compound’s predicted performance aligns closely with its real-world behavior.

Impact and Effectiveness

The practical impact of AI in small molecule drug discovery is evident through numerous case studies and successful real-world applications. Its capabilities are often directly compared with, and tend to surpass, traditional screening methods in terms of speed, cost-efficiency, and predictive precision.

Case Studies and Real-world Applications

Several high-profile case studies have demonstrated the effectiveness of AI in discovering small molecule drugs. For example, projects employing AI have led to the rapid identification of novel inhibitors for key targets such as MEK in cancer treatment and beta-secretase (BACE1) in Alzheimer’s disease. In one notable instance, a deep learning algorithm was trained on large datasets of known compounds, leading to the discovery of novel candidates with high therapeutic potential that subsequently entered clinical trials. Such successes illustrate AI’s capacity to integrate vast amounts of experimental and clinical data into actionable insights, significantly reducing the traditional timelines of drug discovery from years to mere months.

Moreover, companies like Exscientia and Insilico Medicine have showcased how AI-driven platforms can pinpoint lead compounds in record time, thereby enabling a more efficient transition from the bench to the clinic. AI also facilitates drug repurposing, where existing drugs are re-evaluated for new therapeutic indications by predicting their interactions with altered biological targets, thus opening new avenues for expanding clinical applications without starting from scratch. These examples, supported by successful partnerships between AI startups and major pharmaceutical firms, underscore the transformative impact of AI on the small molecule drug discovery process.

Comparison with Traditional Methods

Traditional drug discovery methods largely rely on empirical high-throughput screening and serendipitous discoveries. While these methods have been foundational, they are typically associated with high costs, longer timelines, and significant attrition rates during clinical trials. In contrast, AI systems utilize computational efficiency and predictive accuracy to narrow down large chemical spaces and prioritize candidates that are more likely to succeed in clinical trials.

In addition, traditional methods often require extensive manual optimization, whereas AI-driven processes can automatically generate virtual libraries of compounds, simulate binding kinetics, and refine structures iteratively using advanced predictive models. This approach not only results in a drastic reduction in the number of compounds that must be synthesized and tested but also substantially lowers the overall cost and time of drug development. With AI-based techniques validating and complementing experimental findings, a synergistic effect is achieved that dramatically improves both the speed and success rate of drug discovery efforts.

Challenges and Considerations

Despite the transformative potential and successful applications of AI in small molecule drug discovery, several challenges and considerations must be addressed to fully harness its capabilities. These challenges span technical, scientific, ethical, and regulatory domains.

Technical and Scientific Challenges

One major technical challenge is the quality and heterogeneity of data. AI models require large, high-quality datasets to be trained effectively, and discrepancies in data sources—such as those arising from inconsistent experimental protocols or varying assay conditions—can limit the performance of predictive models. Additionally, the creation of robust computational models demands that the input data be accurately converted into meaningful molecular representations. Although techniques such as molecular fingerprints, graph-based representations, and sequence-based descriptors have advanced considerably, they still sometimes fail to capture subtle but critical chemical interactions.

Another challenge is the interpretability of complex AI models. Deep neural networks and other non-linear models often act as “black boxes,” making it difficult for researchers to understand how exactly a given prediction was derived. This lack of transparency can hinder trust, especially when the models are used to inform high-stakes decisions in drug optimisation and clinical development. Moreover, the adaptability of AI algorithms to new and unforeseen molecular structures adds another layer of complexity. Even though methods like one-shot learning and transfer learning have been introduced to cope with low-data scenarios, ensuring that the models can generalize to entirely novel chemical spaces remains an ongoing research problem.

From a scientific perspective, integrating different kinds of data—such as omics data, imaging, and clinical records—into a single cohesive model represents a formidable computational and statistical challenge. Balancing this multi-modal data while avoiding overfitting and ensuring model robustness requires sophisticated architectures and rigorous validation protocols. Furthermore, while AI models can predict drug-target interactions with high accuracy, they are sometimes limited by incomplete knowledge of biological pathways and the dynamic nature of biological systems, which are not easily captured by static models.

Ethical and Regulatory Issues

Ethical and regulatory considerations play a critical role in the deployment of AI in drug discovery. As AI models become more integrated into drug development pipelines, ensuring data privacy and securing intellectual property rights is paramount. The proprietary nature of many datasets used in predictive modeling introduces challenges related to data sharing, standardisation, and bias, which may lead to inequitable research results or potential misuse of data.

Moreover, the use of AI in clinical trials and drug development must adhere to evolving regulatory frameworks established by agencies such as the FDA and EMA. As these agencies continue to develop comprehensive guidance on AI-based methodologies, companies must adapt their research practices to align with regulatory standards. Failure to do so could result in delays or even rejections during the approval process. Additionally, the interpretability issues of “black box” AI models raise ethical questions regarding accountability for adverse outcomes, especially when predictions significantly influence patient safety and treatment efficacy.

There is also the potential for ethical dilemmas stemming from biased data. If training datasets predominantly represent certain populations, the resulting AI systems may inadvertently favour drugs that are more effective in those groups while neglecting others. This can lead to disparities in healthcare provision, thereby emphasizing the need for diverse and representative data in AI-driven drug discovery. Ensuring ethical standards and regulatory compliance is not only necessary for public trust but also essential for the long-term success and sustainability of AI in drug discovery.

Future Directions

Looking ahead, AI is poised to have an even greater impact on the field of small molecule drug discovery. As computational methods become more sophisticated and datasets grow larger and more diverse, new trends and opportunities for innovation are emerging, promising a future where drug discovery is faster, more efficient, and more personalized.

Emerging Trends

One significant emerging trend is the use of generative AI models to create entirely novel chemical structures that do not exist in current chemical libraries. These models, including GANs and VAEs, can be trained on vast datasets of known molecules, enabling them to extrapolate and propose innovative candidates with desired properties that traditional methods might overlook. Such generative approaches not only expedite the identification of viable leads but also push the boundaries of chemical space exploration, ultimately increasing the likelihood of finding breakthrough therapeutics.

Another trend is the increasing integration of multi-omics data and real-world evidence with AI predictive models. By incorporating genomic, proteomic, metabolomic, and clinical data, AI systems can achieve a more comprehensive understanding of disease mechanisms and individual patient variability. This allows for the design of drugs that are tailored to specific patient subpopulations, promoting the development of personalized medicine. Advances in sensor technology and digital health platforms further facilitate the collection of such high-dimensional data, leading to more robust and context-specific AI models.

Alongside data integration, another emerging trend is the use of explainable AI (XAI) techniques. These approaches aim to demystify the “black box” nature of many AI models, thereby enhancing transparency and trust among researchers and regulatory bodies. Explainable AI can provide insights into how particular molecular features contribute to predicted bioactivity or toxicity, aiding in both drug optimization and regulatory evaluation. Additionally, interactive AI platforms that combine human expertise with machine intelligence are becoming more common, enabling iterative feedback that continually refines predictive accuracy and optimizes lead candidates.

Potential for Innovation

The future of small molecule drug discovery powered by AI holds extraordinary potential for innovation across multiple facets of the pharmaceutical value chain. One key area of innovation is in bridging the gap between in silico predictions and in vitro/in vivo validations. As AI models improve in accuracy and interpretability, they will increasingly be used to design integrated workflows that seamlessly transition from virtual screening to experimental testing, thereby reducing the cycle time of drug development.

Furthermore, advancements in computational hardware, such as quantum computing and cloud-based supercomputing, are expected to further accelerate AI model training and simulation processes. Such developments will enable the handling of even larger datasets and more complex molecular simulations, enhancing the predictive power of AI systems. Additionally, tighter integration between pharmaceutical companies and AI startups is anticipated, driven by collaborative initiatives that leverage complementary expertise in medicine, computational science, and data analytics.

Another promising avenue is the implementation of active learning and iterative optimization strategies that prioritize data-laden compounds for synthesis and testing. This not only reduces the number of iterations required for lead discovery and optimization but also maximizes the efficiency of resource allocation throughout the R&D process. The successful integration of AI-assisted predictive models with robotic laboratory automation holds the promise of developing an entirely automated discovery pipeline that requires minimal human intervention, thereby revolutionizing both the speed and scale at which novel therapeutic candidates are generated.

Finally, there is a clear potential for AI to contribute to regulatory sciences. As AI models continue to mature and demonstrate their reliability, they may be incorporated into the decision-making processes of regulatory agencies, thereby streamlining the approval process. Researchers are already exploring ways to use AI to predict adverse drug reactions, optimize dosing regimens, and assess drug safety profiles, which could lead to earlier identification of potential issues during clinical trials and reduce the high attrition rates historically associated with drug development.

Conclusion

In summary, AI assists in the discovery of small molecule drugs by fundamentally transforming each stage of the drug development process—from target identification and lead optimization to predictive modeling and clinical translation. Initially, AI technologies such as SVMs, DNNs, GANs, and VAEs enable the conversion of vast amounts of heterogeneous biomedical data into meaningful representations, thereby uncovering previously hidden relationships between biological targets and chemical structures. AI-driven target identification leverages integration of omics data, network analysis, and literature mining to detect promising therapeutic targets with enhanced specificity and confidence.

Subsequently, in the realm of lead compound optimization, AI models predict how subtle chemical modifications affect a drug’s potency, selectivity, and ADME properties, thereby significantly reducing the number of iterative synthesis and testing cycles required. Deep learning-based predictive modeling further validates the bioactivity and safety profiles of compounds in silico before committing to expensive laboratory experiments, increasing both the speed and success rate of drug discovery. These advances are illustrated by numerous case studies where AI has shortened the timeline from lead discovery to clinical trials, leading to the identification of novel therapeutic candidates that would be challenging to detect using traditional methodologies alone.

Despite these significant achievements, there remain technical, scientific, ethical, and regulatory challenges that must be addressed. Data quality, interpretability of complex AI architectures, integration of multi-modal information, and potential biases are ongoing technical hurdles. At the same time, ethical and regulatory considerations relating to patient safety, data privacy, and transparency must be rigorously managed to ensure the safe and equitable implementation of AI methodologies. Addressing these challenges is crucial for the sustained integration of AI in clinical practices and to avoid pitfalls associated with “black box” systems.

Looking towards the future, emerging trends such as generative AI for novel chemical design, multi-omics data integration for precision medicine, and explainable AI techniques are set to further revolutionize small molecule drug discovery. Advancements in computational infrastructure and more robust collaborative frameworks between industry and academia are poised to further reduce the drug discovery cycle time and improve the overall efficiency of the development pipeline. Active learning, iterative optimization, and the integration of robotic automation in laboratory processes are among the key innovations promising to drive the next wave of breakthroughs in medicinal chemistry.

In conclusion, AI’s contribution to small molecule drug discovery is multifaceted and profound. It accelerates target identification, optimizes compound design, and enhances predictive accuracy, thus providing a more efficient, cost-effective, and innovative approach than traditional methods. While challenges remain, ongoing research, collaboration among stakeholders, and emerging technological advancements promise an exciting future where AI will not only supplement but also potentially redefine the entire landscape of drug discovery. The continued evolution of AI technologies, coupled with rigorous ethical and regulatory oversight, will be essential in translating computational predictions into impactful, safe, and effective therapies for patients worldwide.

Artificial intelligence (AI) has emerged as a transformative force in drug discovery by leveraging machine learning (ML), deep learning (DL), and other computational techniques that integrate large volumes of data from genomic, chemical, and clinical sources. These AI technologies have revolutionized the traditional drug discovery paradigm—a notoriously time‐consuming and expensive process—and have accelerated the identification, design, and optimization of small molecule drugs. By combining robust data resources with sophisticated algorithms, AI systems can extract patterns, predict outcomes, and provide insights that were previously unattainable with conventional methods.

Overview of AI Technologies

AI technologies in drug discovery generally encompass a variety of methods, from supervised learning algorithms such as support vector machines (SVM) and random forests (RF) to unsupervised learning methods and neural network architectures including deep neural networks (DNNs), generative adversarial networks (GANs), variational autoencoders (VAEs), and one-shot learning techniques. These methods are adept at handling diverse types of input data such as molecular descriptors, protein sequences, or even three-dimensional structures for molecular docking. Advanced computational techniques now enable the transformation of raw experimental and clinical data into machine-readable formats—using molecular fingerprints, graph-based methods, and sequence-based representations—allowing for the prediction of bioactivity, toxicity, and pharmacokinetic properties.

Furthermore, AI in drug discovery leverages virtual screening, which utilizes docking simulations and energy-based models to rapidly scan huge chemical libraries to identify promising candidate molecules. In addition to virtual screening, AI models like quantitative structure–activity relationship (QSAR) methods have been enhanced by deep learning, which extracts high-level features directly from raw chemical data without manual feature engineering. Overall, the integration of these cutting-edge techniques has created a highly interdisciplinary framework that not only facilitates the identification of promising drug candidates but also continuously optimizes the drug design process.

Historical Context of AI in Drug Discovery

Historically, drug discovery was heavily reliant on serendipitous findings and laborious experimental screening that spanned decades and required substantial financial investments. Early approaches depended principally on high-throughput screening (HTS) of chemical libraries with limited predictive capability. As computational power and data availability increased, researchers started to adopt classical machine learning techniques to model structure–activity relationships; however, these initial methods were often constrained by the size and quality of available data.

Recent years have witnessed a paradigm shift in drug discovery, where AI has evolved from a theoretical promise into a practical tool actively used by pharmaceutical companies. Collaborations between large pharmaceutical firms and tech companies have accelerated the adoption of AI in drug research and development, with several case studies now demonstrating significant improvements in the prediction accuracy of target binding and compound optimization. This historical evolution—from traditional experimental methods to AI-enhanced discovery—has laid the groundwork for the modern, digitally driven approach that now defines small molecule drug discovery.

Role of AI in Small Molecule Drug Discovery

AI plays a multifaceted role in small molecule drug discovery by significantly reducing the time and costs involved while increasing predictive accuracy and enabling the design of novel molecules with optimal properties. Its role can be broken down into three main pillars: target identification, lead compound optimization, and predictive modeling.

Target Identification

One of the primary applications of AI in drug discovery is the identification of novel therapeutic targets. Target identification involves sifting through vast omics datasets—including genomic, proteomic, and metabolomic data—to pinpoint proteins or genes that play a crucial role in disease pathogenesis. AI algorithms capitalise on network analysis methods and feature engineering techniques to discover target-disease associations that might be missed by conventional experiments.

For example, various machine learning techniques can be used to integrate heterogeneous data sources like clinical records, literature databases, and biological interaction networks to generate knowledge graphs that identify novel protein targets or validate existing ones. By applying SVMs, random forests, or neural networks to such integrated data, researchers are able to generate hypotheses about the involvement of specific proteins or pathways in disease and predict potential druggable targets with enhanced specificity. AI-driven target identification is not only more cost-effective but often yields results with higher confidence, laying a robust foundation for subsequent stages of drug development.

Lead Compound Optimization

After target identification comes the stage of lead discovery and subsequent optimization. In this phase, AI assists chemists in refining chemical structures to enhance both efficacy and safety profiles. Traditional lead optimization depends heavily on iterative cycles of chemical synthesis and experimental testing, which are resource-intensive. AI techniques facilitate a more focused approach by leveraging predictive models to simulate how modifications to a molecule’s scaffold will affect its activity, ADME (absorption, distribution, metabolism, and excretion) profile, and toxicity.

For instance, deep learning models can analyze structural information and predict binding affinities to target proteins by simulating molecular docking processes. Generative models such as VAEs and GANs are being employed to design novel chemical entities that meet specific criteria, such as high potency and desirable pharmacokinetics. Moreover, methods like one-shot learning have been introduced to fine-tune drug design processes, particularly in scenarios with limited experimental data. This AI-driven optimization reduces the number of synthesis iterations and experimental validations required, thereby accelerating the discovery process and significantly lowering costs.

Predictive Modeling

Predictive modeling constitutes another cornerstone of AI in small molecule drug discovery. It involves using AI algorithms to predict various properties of candidate molecules even before they are synthesized in the laboratory. QSAR models enhanced by deep learning predict chemical and biological properties such as toxicity, bioavailability, and drug-target interactions with high accuracy. These predictions allow researchers to eliminate compounds with unfavorable profiles early in the drug discovery pipeline.

AI-powered predictive models are not only effective in forecasting target binding affinities but also in anticipating the outcomes of complex biological interactions during in vivo studies. For example, ML algorithms trained on multi-omics datasets can predict the metabolic stability of compounds and forecast adverse drug reactions, thereby guiding medicinal chemists in selecting and refining promising candidates. By incorporating features from molecular docking simulations, pharmacophores, and computed descriptors, AI models offer an integrated approach that ensures a compound’s predicted performance aligns closely with its real-world behavior.

Impact and Effectiveness

The practical impact of AI in small molecule drug discovery is evident through numerous case studies and successful real-world applications. Its capabilities are often directly compared with, and tend to surpass, traditional screening methods in terms of speed, cost-efficiency, and predictive precision.

Case Studies and Real-world Applications

Several high-profile case studies have demonstrated the effectiveness of AI in discovering small molecule drugs. For example, projects employing AI have led to the rapid identification of novel inhibitors for key targets such as MEK in cancer treatment and beta-secretase (BACE1) in Alzheimer’s disease. In one notable instance, a deep learning algorithm was trained on large datasets of known compounds, leading to the discovery of novel candidates with high therapeutic potential that subsequently entered clinical trials. Such successes illustrate AI’s capacity to integrate vast amounts of experimental and clinical data into actionable insights, significantly reducing the traditional timelines of drug discovery from years to mere months.

Moreover, companies like Exscientia and Insilico Medicine have showcased how AI-driven platforms can pinpoint lead compounds in record time, thereby enabling a more efficient transition from the bench to the clinic. AI also facilitates drug repurposing, where existing drugs are re-evaluated for new therapeutic indications by predicting their interactions with altered biological targets, thus opening new avenues for expanding clinical applications without starting from scratch. These examples, supported by successful partnerships between AI startups and major pharmaceutical firms, underscore the transformative impact of AI on the small molecule drug discovery process.

Comparison with Traditional Methods

Traditional drug discovery methods largely rely on empirical high-throughput screening and serendipitous discoveries. While these methods have been foundational, they are typically associated with high costs, longer timelines, and significant attrition rates during clinical trials. In contrast, AI systems utilize computational efficiency and predictive accuracy to narrow down large chemical spaces and prioritize candidates that are more likely to succeed in clinical trials.

In addition, traditional methods often require extensive manual optimization, whereas AI-driven processes can automatically generate virtual libraries of compounds, simulate binding kinetics, and refine structures iteratively using advanced predictive models. This approach not only results in a drastic reduction in the number of compounds that must be synthesized and tested but also substantially lowers the overall cost and time of drug development. With AI-based techniques validating and complementing experimental findings, a synergistic effect is achieved that dramatically improves both the speed and success rate of drug discovery efforts.

Challenges and Considerations

Despite the transformative potential and successful applications of AI in small molecule drug discovery, several challenges and considerations must be addressed to fully harness its capabilities. These challenges span technical, scientific, ethical, and regulatory domains.

Technical and Scientific Challenges

One major technical challenge is the quality and heterogeneity of data. AI models require large, high-quality datasets to be trained effectively, and discrepancies in data sources—such as those arising from inconsistent experimental protocols or varying assay conditions—can limit the performance of predictive models. Additionally, the creation of robust computational models demands that the input data be accurately converted into meaningful molecular representations. Although techniques such as molecular fingerprints, graph-based representations, and sequence-based descriptors have advanced considerably, they still sometimes fail to capture subtle but critical chemical interactions.

Another challenge is the interpretability of complex AI models. Deep neural networks and other non-linear models often act as “black boxes,” making it difficult for researchers to understand how exactly a given prediction was derived. This lack of transparency can hinder trust, especially when the models are used to inform high-stakes decisions in drug optimisation and clinical development. Moreover, the adaptability of AI algorithms to new and unforeseen molecular structures adds another layer of complexity. Even though methods like one-shot learning and transfer learning have been introduced to cope with low-data scenarios, ensuring that the models can generalize to entirely novel chemical spaces remains an ongoing research problem.

From a scientific perspective, integrating different kinds of data—such as omics data, imaging, and clinical records—into a single cohesive model represents a formidable computational and statistical challenge. Balancing this multi-modal data while avoiding overfitting and ensuring model robustness requires sophisticated architectures and rigorous validation protocols. Furthermore, while AI models can predict drug-target interactions with high accuracy, they are sometimes limited by incomplete knowledge of biological pathways and the dynamic nature of biological systems, which are not easily captured by static models.

Ethical and Regulatory Issues

Ethical and regulatory considerations play a critical role in the deployment of AI in drug discovery. As AI models become more integrated into drug development pipelines, ensuring data privacy and securing intellectual property rights is paramount. The proprietary nature of many datasets used in predictive modeling introduces challenges related to data sharing, standardisation, and bias, which may lead to inequitable research results or potential misuse of data.

Moreover, the use of AI in clinical trials and drug development must adhere to evolving regulatory frameworks established by agencies such as the FDA and EMA. As these agencies continue to develop comprehensive guidance on AI-based methodologies, companies must adapt their research practices to align with regulatory standards. Failure to do so could result in delays or even rejections during the approval process. Additionally, the interpretability issues of “black box” AI models raise ethical questions regarding accountability for adverse outcomes, especially when predictions significantly influence patient safety and treatment efficacy.

There is also the potential for ethical dilemmas stemming from biased data. If training datasets predominantly represent certain populations, the resulting AI systems may inadvertently favour drugs that are more effective in those groups while neglecting others. This can lead to disparities in healthcare provision, thereby emphasizing the need for diverse and representative data in AI-driven drug discovery. Ensuring ethical standards and regulatory compliance is not only necessary for public trust but also essential for the long-term success and sustainability of AI in drug discovery.

Future Directions

Looking ahead, AI is poised to have an even greater impact on the field of small molecule drug discovery. As computational methods become more sophisticated and datasets grow larger and more diverse, new trends and opportunities for innovation are emerging, promising a future where drug discovery is faster, more efficient, and more personalized.

Emerging Trends

One significant emerging trend is the use of generative AI models to create entirely novel chemical structures that do not exist in current chemical libraries. These models, including GANs and VAEs, can be trained on vast datasets of known molecules, enabling them to extrapolate and propose innovative candidates with desired properties that traditional methods might overlook. Such generative approaches not only expedite the identification of viable leads but also push the boundaries of chemical space exploration, ultimately increasing the likelihood of finding breakthrough therapeutics.

Another trend is the increasing integration of multi-omics data and real-world evidence with AI predictive models. By incorporating genomic, proteomic, metabolomic, and clinical data, AI systems can achieve a more comprehensive understanding of disease mechanisms and individual patient variability. This allows for the design of drugs that are tailored to specific patient subpopulations, promoting the development of personalized medicine. Advances in sensor technology and digital health platforms further facilitate the collection of such high-dimensional data, leading to more robust and context-specific AI models.

Alongside data integration, another emerging trend is the use of explainable AI (XAI) techniques. These approaches aim to demystify the “black box” nature of many AI models, thereby enhancing transparency and trust among researchers and regulatory bodies. Explainable AI can provide insights into how particular molecular features contribute to predicted bioactivity or toxicity, aiding in both drug optimization and regulatory evaluation. Additionally, interactive AI platforms that combine human expertise with machine intelligence are becoming more common, enabling iterative feedback that continually refines predictive accuracy and optimizes lead candidates.

Potential for Innovation

The future of small molecule drug discovery powered by AI holds extraordinary potential for innovation across multiple facets of the pharmaceutical value chain. One key area of innovation is in bridging the gap between in silico predictions and in vitro/in vivo validations. As AI models improve in accuracy and interpretability, they will increasingly be used to design integrated workflows that seamlessly transition from virtual screening to experimental testing, thereby reducing the cycle time of drug development.

Furthermore, advancements in computational hardware, such as quantum computing and cloud-based supercomputing, are expected to further accelerate AI model training and simulation processes. Such developments will enable the handling of even larger datasets and more complex molecular simulations, enhancing the predictive power of AI systems. Additionally, tighter integration between pharmaceutical companies and AI startups is anticipated, driven by collaborative initiatives that leverage complementary expertise in medicine, computational science, and data analytics.

Another promising avenue is the implementation of active learning and iterative optimization strategies that prioritize data-laden compounds for synthesis and testing. This not only reduces the number of iterations required for lead discovery and optimization but also maximizes the efficiency of resource allocation throughout the R&D process. The successful integration of AI-assisted predictive models with robotic laboratory automation holds the promise of developing an entirely automated discovery pipeline that requires minimal human intervention, thereby revolutionizing both the speed and scale at which novel therapeutic candidates are generated.

Finally, there is a clear potential for AI to contribute to regulatory sciences. As AI models continue to mature and demonstrate their reliability, they may be incorporated into the decision-making processes of regulatory agencies, thereby streamlining the approval process. Researchers are already exploring ways to use AI to predict adverse drug reactions, optimize dosing regimens, and assess drug safety profiles, which could lead to earlier identification of potential issues during clinical trials and reduce the high attrition rates historically associated with drug development.

Conclusion

In summary, AI assists in the discovery of small molecule drugs by fundamentally transforming each stage of the drug development process—from target identification and lead optimization to predictive modeling and clinical translation. Initially, AI technologies such as SVMs, DNNs, GANs, and VAEs enable the conversion of vast amounts of heterogeneous biomedical data into meaningful representations, thereby uncovering previously hidden relationships between biological targets and chemical structures. AI-driven target identification leverages integration of omics data, network analysis, and literature mining to detect promising therapeutic targets with enhanced specificity and confidence.

Subsequently, in the realm of lead compound optimization, AI models predict how subtle chemical modifications affect a drug’s potency, selectivity, and ADME properties, thereby significantly reducing the number of iterative synthesis and testing cycles required. Deep learning-based predictive modeling further validates the bioactivity and safety profiles of compounds in silico before committing to expensive laboratory experiments, increasing both the speed and success rate of drug discovery. These advances are illustrated by numerous case studies where AI has shortened the timeline from lead discovery to clinical trials, leading to the identification of novel therapeutic candidates that would be challenging to detect using traditional methodologies alone.

Despite these significant achievements, there remain technical, scientific, ethical, and regulatory challenges that must be addressed. Data quality, interpretability of complex AI architectures, integration of multi-modal information, and potential biases are ongoing technical hurdles. At the same time, ethical and regulatory considerations relating to patient safety, data privacy, and transparency must be rigorously managed to ensure the safe and equitable implementation of AI methodologies. Addressing these challenges is crucial for the sustained integration of AI in clinical practices and to avoid pitfalls associated with “black box” systems.

Looking towards the future, emerging trends such as generative AI for novel chemical design, multi-omics data integration for precision medicine, and explainable AI techniques are set to further revolutionize small molecule drug discovery. Advancements in computational infrastructure and more robust collaborative frameworks between industry and academia are poised to further reduce the drug discovery cycle time and improve the overall efficiency of the development pipeline. Active learning, iterative optimization, and the integration of robotic automation in laboratory processes are among the key innovations promising to drive the next wave of breakthroughs in medicinal chemistry.

In conclusion, AI’s contribution to small molecule drug discovery is multifaceted and profound. It accelerates target identification, optimizes compound design, and enhances predictive accuracy, thus providing a more efficient, cost-effective, and innovative approach than traditional methods. While challenges remain, ongoing research, collaboration among stakeholders, and emerging technological advancements promise an exciting future where AI will not only supplement but also potentially redefine the entire landscape of drug discovery. The continued evolution of AI technologies, coupled with rigorous ethical and regulatory oversight, will be essential in translating computational predictions into impactful, safe, and effective therapies for patients worldwide.

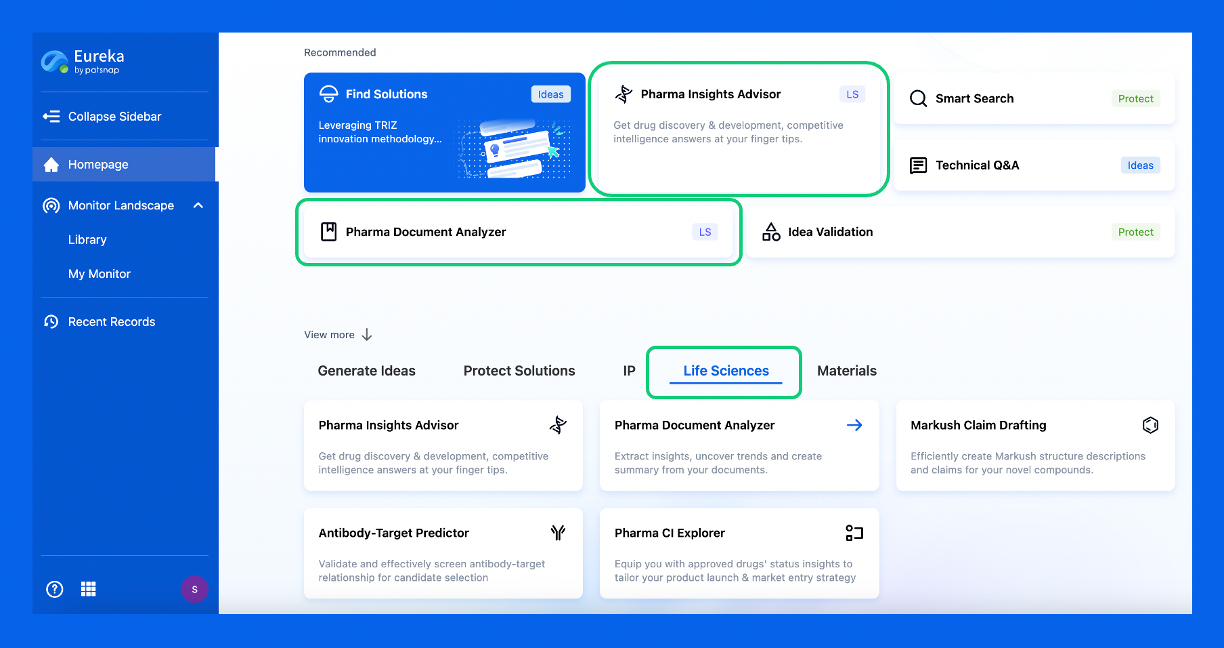

Discover Eureka LS: AI Agents Built for Biopharma Efficiency

Stop wasting time on biopharma busywork. Meet Eureka LS - your AI agent squad for drug discovery.

▶ See how 50+ research teams saved 300+ hours/month

From reducing screening time to simplifying Markush drafting, our AI Agents are ready to deliver immediate value. Explore Eureka LS today and unlock powerful capabilities that help you innovate with confidence.

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.