Request Demo

How does AI assist in the early detection of drug toxicity?

21 March 2025

Introduction to AI in Drug Safety

Definition and Basic Concepts of AI

Artificial intelligence (AI) refers to a collection of computational approaches that enable machines to perform tasks which would otherwise require human intelligence. These tasks include pattern recognition, classification, prediction, and decision‐making. In drug discovery and safety, AI harnesses techniques such as machine learning (ML), deep learning (DL), natural language processing (NLP), and generative models, among others, to analyze complex and heterogeneous data sets. These data sets span numerical data, images, gene expression profiles, and even unstructured text from scientific literature. The goal is to emulate and sometimes exceed human capability when it comes to analyzing patterns and extracting actionable insights in less time and at lower cost. AI-based technologies have evolved rapidly due to increased computing power, the availability of powerful algorithms, and the explosive growth in biomedical data accumulation. This convergence has led to AI being integrated throughout the drug development pipeline—from target identification to safety evaluations—transforming the traditional paradigms and accelerating decision‐making processes.

Overview of Drug Toxicity and Its Challenges

Drug toxicity refers to the harmful effects that drugs can have on the body, ranging from mild adverse reactions to severe life-threatening conditions. The early detection of toxicity is paramount because adverse effects are one of the primary reasons for drug candidate failures during preclinical and clinical testing phases. Traditional toxicity testing, such as in vitro cell assays and in vivo animal testing, can be laborious, time‑consuming, and expensive. Moreover, these methods sometimes have issues with reproducibility and may not reliably predict toxicity in humans.

A major challenge is that toxicity is a multifaceted phenomenon; a compound might be non‑toxic in one assay but elicit adverse responses in another system or organ. Drug-induced liver injury (DILI), for instance, is notoriously difficult to detect early because its manifestations may only become apparent after accumulation of toxic metabolites or extended exposure. Furthermore, the biological mechanisms underlying toxicity are complex and involve interactions at multiple biological levels, from molecular interactions to systemic physiological responses. Thus, an integrated approach that combines experimental data, omics data, and computational predictions is essential for accurately forecasting toxicity profiles across different platforms. AI addresses these challenges by mining large datasets, integrating heterogeneous data sources, and reconstructing complex relationships that may not be evident using conventional methods.

AI Technologies for Detecting Drug Toxicity

Common AI Techniques Used

One of the major contributions of AI in the realm of drug safety is the ability to predict toxicity profiles by employing a suite of advanced algorithms. Various AI-based methods have been developed and refined over the past few years.

1. Deep Neural Networks (DNNs) and Ensemble Models:

Deep learning models, such as deep neural networks and ensemble approaches, are widely used to predict toxicity endpoints. For example, the DeepTox model employs a three-layer DNN that leverages molecular descriptors from 0D to 3D to predict toxicity effects reliably. These models are capable of capturing nonlinear relationships between molecular structure features and toxicity outcomes, often outperforming traditional machine learning models in terms of accuracy and sensitivity.

2. Graph Neural Networks (GNNs):

GNNs analyze molecules represented as graphs where atoms serve as nodes and bonds serve as edges. This approach brings the added advantage of capturing the spatial and relational information inherent in chemical structures. By using message-passing operations, GNNs can learn high-dimensional representations of molecules which are then used to predict adverse effects like hepatotoxicity or QT prolongation.

3. Support Vector Machines (SVMs) and Random Forests (RF):

While deep learning models are gaining momentum, traditional machine learning methods like support vector machines and random forests are still in use, particularly for their relative ease of interpretation and efficient performance on smaller datasets. These techniques have been applied extensively in toxicity prediction tasks and work well when combined with appropriate molecular descriptors.

4. Generative Models and Variational Autoencoders:

Generative models such as generative adversarial networks (GANs) and variational autoencoders (VAEs) have shown promise in developing novel compounds with reduced toxicity profiles. By learning the underlying distribution of existing non-toxic molecules, these models can propose novel chemical structures that are predicted to have favorable safety profiles while preserving bioactivity.

5. Natural Language Processing (NLP):

AI also leverages NLP to mine scientific literature and electronic health records, extracting valuable information related to adverse drug reactions (ADRs) and suspected toxicity pathways. NLP techniques help in consolidating dispersed data, including case reports, and legal documents to understand patterns that might indicate toxicity before clinical trials.

6. Multitask Learning Models:

Toxicity is inherently a multilinear phenomenon. Multitask deep learning models have been developed to predict various toxicity endpoints simultaneously, such as liver toxicity, cardiotoxicity, and neuronal toxicity. These approaches benefit from shared features across different tasks and often achieve higher predictive performances than models trained on a single task. An example of this is the multitask DeepTox model, which simultaneously predicts multiple toxicity endpoints from a single set of molecular descriptors.

Comparison and Suitability of Different Techniques

The suitability of any AI technique for toxicity detection primarily depends on the complexity of the data model, the data quality, data volume, the specificity of the endpoints, and the requirement for interpretability.

- Interpretability vs. Accuracy:

Deep neural networks and generative models tend to offer superior predictive power but often suffer from a lack of interpretability—they operate as "black boxes". In contrast, methods such as SVMs or random forests provide easier interpretability but might not capture complex nonlinear relationships as effectively. Selecting the proper balance is critical in drug safety where understanding why a drug may be toxic is as important as predicting that it is likely to be so.

- Data Requirements:

Models like DNNs require extensive data to perform well. In datasets where data is sparse or imbalanced (as often seen with rare toxic events), traditional models or hybrid approaches that incorporate data-augmentation techniques are more fitting.

- Computational Efficiency:

Techniques such as GNNs might demand significant computational resources, especially when dealing with high-throughput screening datasets. However, with the advent of cloud computing and GPUs, these computational challenges are being mitigated over time, allowing for faster predictions and model training.

- Task Specificity:

Certain AI techniques have been designed specifically for particular types of toxicity. For example, specialized models have been built to evaluate mitochondrial toxicity using high-content imaging and mitochondrial membrane potential analyses. Additionally, models that predict genomic toxicity or ADME (absorption, distribution, metabolism, excretion) related toxicities are tailored for those specific endpoints and use combinations of biochemical data and molecular simulations.

Impact on Early Detection of Drug Toxicity

Improvements in Detection Accuracy and Speed

AI-assisted toxicity detection has several transformative impacts on the early detection process:

1. Enhanced Predictive Accuracy:

AI models can process vast chemical spaces and incorporate multiple sources of data—ranging from molecular property descriptors to high-content screening images—thereby improving the detection of subtle toxicity signals that traditional assays might miss. The use of ensemble DNN models like DeepTox and multitask learning frameworks allows the capture of complex dose-response relationships, predicting toxic events with higher sensitivity and specificity than traditional methods.

2. Reduction in Time and Cost:

Traditional toxicity assays, especially in vivo studies, can take months or even years to complete and are resource-intensive. With AI, predictive models can rapidly screen hundreds of thousands of compounds, effectively triaging which candidates may lead to adverse effects and thereby reducing the overall cost and time associated with drug development. This accelerated pipeline enables researchers to focus laboratory resources on the most promising candidates early in the process.

3. Early Identification of Adverse Effects:

AI systems are capable of detecting early molecular signals of toxicity, such as changes in gene expression or metabolic disruptions, long before overt symptoms occur in vivo. This preemptive identification is crucial for avoiding expensive late-stage failures and reducing the risk associated with advancing toxic compounds into clinical trials.

4. Integration of Heterogeneous Data:

One of AI’s greatest assets is its ability to integrate data from multiple sources. For instance, high-throughput screening data, electronic health records, genomic information, and chemical structure data can be synthesized to provide a comprehensive toxicity profile of a compound. This holistic view strengthens predictive capabilities and allows for cross-validation of signals that indicate potential adverse effects.

Case Studies and Examples

Several case studies and published research illustrate the potential of AI in early toxicity detection:

1. Drug Toxicity Evaluation Platforms:

A patent from Yokohama City University and Takeda Pharmaceutical described a method for evaluating drug toxicity using a co-culture system of an organoid with blood cells, where the addition of a drug is followed by assessing toxicity on the organoid. This approach, when combined with AI algorithms, can be used to quantify subtle cellular changes and predict toxic endpoints with high accuracy.

2. DeepTox and Multitask Modeling:

The development of models such as DeepTox, which employs a deep neural network trained on in vitro toxicity datasets like Tox21, has shown excellent predictive performance by comparing favorably to traditional machine learning approaches. These models are capable of predicting endpoints like hepatotoxicity and cardiotoxicity, thereby enabling early flagging of compounds that may fail due to toxicity in clinical trials.

3. Mitochondrial Toxicity Detection:

A patent describing a drug mitochondrial toxicity detection method uses high-content imaging, mitochondrial membrane potential assessment, and fluorescent probe labeling to evaluate toxicity effects. AI algorithms further interpret these rich data streams to provide a rapid and accurate toxicity profile that is applicable for both preclinical safety evaluations and toxicological research.

4. Neuronal Toxicity Detection:

Other patents detail systems and methods for assessing neuronal toxicity. These approaches integrate multi-parametric data from neuronal cell assays with machine learning models to predict whether a compound induces neuronal damage. Automation of this evaluation not only decreases the turnaround time but also enhances the overall sensitivity of the detection process.

5. Comparative Analyses:

Studies comparing deep learning approaches with simpler statistical methods consistently show that AI methods outperform traditional screening protocols in terms of both detection accuracy and speed. For example, in studies predicting in vitro toxic responses, AI models not only provided a higher accuracy for compounds deemed toxic but also demonstrated the ability to detect minor structural features responsible for the toxicity.

Ethical and Regulatory Considerations

Ethical Issues in AI Use

The integration of AI into drug toxicity detection is not without ethical challenges. Since AI models can sometimes function as "black boxes," transparency in the decision-making process is critical. Researchers and regulatory authorities are particularly concerned about:

1. Interpretability and Explainability:

When AI models predict that a drug candidate is toxic, it is essential to understand the rationale behind that prediction. Lack of interpretability can lead to mistrust among clinical researchers and decision-makers, which may impede the adoption of AI solutions. Efforts are underway to develop explainable AI (XAI) techniques to demystify these models, thereby ensuring that their conclusions can be transparently evaluated and validated by human experts.

2. Data Bias and Quality:

AI’s performance is highly dependent on the quality and diversity of the data used during training. In areas where the toxicity data may be imbalanced—where few compounds exhibit significant toxicity—there is a risk of overfitting or biased predictions. Addressing these issues requires carefully curated training datasets and the employment of techniques such as data augmentation and rigorous cross-validation to mitigate biases.

3. Privacy and Security:

Many AI applications in drug safety involve patient data, especially when integrating real-world evidence from electronic health records (EHRs). Ensuring data privacy and protecting sensitive health information is of egregious ethical importance. Healthcare providers and researchers must implement robust data encryption, anonymization methods, and ensure compliance with relevant data protection regulations to maintain the confidentiality of patient data.

Regulatory Requirements for AI in Drug Safety

The burgeoning role of AI in early toxicity detection has led to the need for regulatory frameworks that ensure both efficacy and safety in AI-driven methods:

1. Validation and Standardization:

Regulatory agencies such as the FDA have recognized the potential of AI in drug safety but require that these AI models meet high standards of validation. This involves rigorous benchmarking against standardized datasets (e.g., Tox21) and demonstrating reproducible performance across different studies. Standardization initiatives, including open-source toolkits and public data repositories, are being developed to facilitate better validation procedures among AI models for toxicity detection.

2. Documentation and Transparency:

For regulatory approval, it is essential to document the AI model’s performance, training processes, data sources, and decision logic comprehensively. Detailed documentation helps regulators assess the robustness of the AI’s predictions and minimizes potential liabilities that might arise later in the drug development process. Clear guidelines for exposing the inner workings of these algorithms are helping foster trust and wider acceptance.

3. Post-Market Surveillance:

Even once an AI-driven toxicity screening method is approved, regulators require ongoing post-market surveillance to ensure that the model continues to perform accurately as it encounters new chemical entities and real-world data. Continuous monitoring and periodic re-validation of the model are now integral requirements to maintain regulatory compliance in dynamic environments.

4. Ethical Oversight Committees:

Given the ethical challenges related to bias, transparency, and data security, many institutions have established ethical oversight committees to govern the development and deployment of AI technologies. These committees work closely with regulatory bodies to ensure that the AI models not only comply with technical standards but also adhere to ethical expectations in patient care and drug safety.

Challenges and Future Directions

Current Challenges in AI Implementation

Despite the impressive advances, several challenges remain:

1. Data Quality and Availability:

One of the most significant challenges is the availability of high-quality, diverse datasets. Many toxicity prediction models are hindered by data imbalances, limited positive instances of adverse toxicity events, and heterogeneity in data recording methods. AI models must be designed to handle these deficiencies effectively through techniques such as data augmentation and transfer learning.

2. Interpretability and Trust:

The black-box nature of many deep learning models continues to be a barrier. While interpretability methods are advancing, making AI decisions more transparent is critical, particularly when these decisions influence regulatory and clinical make‑up processes. The challenge is not only to predict that a compound is toxic, but also to elucidate the biological pathways or structural alerts that lead to such predictions.

3. Integration with Traditional Methods:

Integrating AI predictions with traditional experimental methods requires reconciliation between in silico models and in vitro/in vivo toxicology assays. AI will most effectively support early toxicity detection if it can complement established methods. Developing frameworks that seamlessly integrate predictive models with experimental validation is an ongoing area of research.

4. Computational Resource Demands:

Advanced models, particularly those utilizing deep learning or graph neural networks, demand considerable computational resources. Although cloud computing and specialized hardware (such as GPUs) have alleviated some of this burden, the scalability of such systems remains a concern, especially for smaller research institutions or startups.

5. Ethical and Regulatory Hesitation:

As mentioned earlier, the ethical implications of AI decisions, including issues of bias and data privacy, as well as the stringent requirements of regulatory agencies, often slow the adoption of AI methodologies in drug safety. Overcoming these challenges requires collaboration across disciplines, as well as investments not only in technology but also in developing comprehensive legal frameworks.

Future Prospects and Research Directions in AI for Drug Toxicity

Looking forward, several promising avenues and research directions can further enhance the role of AI in early toxicity detection:

1. Advancements in Explainable AI (XAI):

Future research will likely focus on developing more robust XAI techniques that provide clear explanations for toxicity predictions, thereby increasing trust among regulators, clinicians, and researchers alike. Improved interpretability will also help in refining the models by pinpointing specific molecular substructures that contribute to toxicity, leading to better drug design and safety profiles.

2. Integration of Multi-Omics Data:

The next frontier involves integrating multi-omics datasets—such as genomics, proteomics, and metabolomics—with traditional chemical data. By doing so, AI models can capture the multi-layered nature of toxicity, enabling predictions that encompass not only chemical structure but also biological context and patient-specific factors. This holistic approach is expected to enhance both predictive accuracy and clinical relevance.

3. Enhanced Multitask and Hybrid Models:

Hybrid models that combine the strengths of deep learning with traditional statistical methods may overcome current limitations. These multitask models can simultaneously predict several toxicity endpoints and, when combined with mechanistic models that account for biological pathways, can provide more comprehensive safety evaluations. Future iterations may include hierarchical models that mimic biological organization—from molecular to system-level toxicity.

4. Integration With Laboratory Automation:

As laboratory automation increases in prevalence, AI models can be continually refined in near real-time by feeding back experimental data from high-throughput screens. This continuous learning environment—sometimes referred to as a “closed-loop” drug discovery approach—will further shorten the cycle times from prediction to experimental validation, making early detection of toxicity more dynamic and responsive.

5. Digital Twin Technology:

The concept of digital twins—virtual counterparts to physical systems—may soon be applied to toxicology. Digital twins of organs or even complete human systems might be generated using multi-scale data, allowing AI systems to simulate and predict how drugs interact with human biology in real-time. This would revolutionize early toxicity detection, shifting from static assay-based predictions to dynamic, personalized simulations.

6. Collaborative Platforms and Open Data Initiatives:

Moving toward open, collaborative platforms is essential. Initiatives that encourage data sharing among academic institutions, pharmaceutical companies, and regulatory bodies will enable the development of more generalized and robust models. Government and industry partnerships could standardize data collection protocols, which in turn would foster improved AI model development for toxicity detection.

7. Regulatory Innovation:

As AI models become more integral to early toxicity detection, regulatory bodies will continue to evolve and update their guidelines. This may include new frameworks specifically tailored for validating in silico toxicity models, ensuring that AI-driven decisions are auditable and reproducible throughout the drug development lifecycle. Future regulations might mandate specific transparency and explainability metrics for AI-based models used in safety evaluations.

8. Personalized Toxicity Prediction:

The integration of patient-specific data such as genetic information and biomarkers into AI models offers the potential for personalized toxicity prediction. Such personalized models could forecast how individual patients might react to specific compounds, providing early signals that help tailor drug dosages and even lead to individualized treatment plans that minimize adverse effects.

Conclusion

In summary, AI assists in the early detection of drug toxicity by leveraging a suite of advanced computational techniques that surpass traditional toxicology assays in both speed and accuracy. By integrating vast and heterogeneous datasets—from molecular descriptors to multi-omics data and clinical records—AI-based models such as deep neural networks, graph neural networks, and multitask learning frameworks provide enhanced predictive capabilities. These technologies allow researchers to quickly flag potentially toxic compounds during the early phases of drug development, thereby significantly reducing the financial and temporal burdens of late‐stage failures.

Alongside improvements in predictive accuracy and fast processing speeds, AI technologies have also improved the overall safety profile assessments by providing early warnings about adverse effects such as hepatotoxicity, mitochondrial dysfunction, and neuronal toxicity. Case studies—ranging from the co-culture organoid systems in toxicity evaluation platforms to advanced multitask deep learning models that analyze in vitro data—demonstrate real-world applications where AI has accelerated toxicity screening processes. The integration of AI with traditional methods not only widens the scope of detectable toxic effects but also provides a complementary approach that enriches the overall scientific understanding of drug safety.

Ethically, the introduction of these AI systems presents challenges regarding the interpretability of their “black-box” predictions, bias in training data, and issues related to patient data privacy and security. Regulatory agencies are actively working on formulating guidelines that mandate transparency, comprehensive documentation, and rigorous post-market surveillance of AI models to establish trust and ensure patient safety during drug development.

Looking toward the future, research in AI for drug toxicity detection is expected to focus on making models more interpretable through explainable AI techniques, integrating multi-omics data for a more comprehensive assessment, adopting hybrid and multitask learning approaches, and creating digital twins for dynamic simulation of drug response. Continuous data sharing, infrastructure improvements, and an evolving regulatory landscape will further enhance the applications of AI in predicting drug toxicity, leading to more efficient, cost-effective, and safer drug development pipelines.

Overall, AI’s multifaceted approach—combining cutting-edge computational methods, broad data integration, and continuous learning mechanisms—holds the promise of dramatically improving early drug toxicity detection. By addressing current challenges and aligning with ethical and regulatory requirements, AI-driven platforms are poised to reshape how pharmaceutical companies develop new therapies, ensuring that only compounds with a favorable safety profile progress further into clinical trials. This promises not only to reduce financial risk and shorten development timelines but also ultimately to improve patient outcomes and public health.

In conclusion, AI is transforming the early detection of drug toxicity through enhanced accuracy, speed, and integration capabilities. The benefits of this transformative approach extend beyond laboratory efficiency into the realms of personalized medicine, regulatory adherence, and ethical healthcare innovations. As the field advances further with improved algorithms, robust data infrastructures, and collaborative partnerships across the industry, AI will undoubtedly continue to play a pivotal role in ensuring drug safety while fostering the development of novel therapies with reduced adverse risks.

Definition and Basic Concepts of AI

Artificial intelligence (AI) refers to a collection of computational approaches that enable machines to perform tasks which would otherwise require human intelligence. These tasks include pattern recognition, classification, prediction, and decision‐making. In drug discovery and safety, AI harnesses techniques such as machine learning (ML), deep learning (DL), natural language processing (NLP), and generative models, among others, to analyze complex and heterogeneous data sets. These data sets span numerical data, images, gene expression profiles, and even unstructured text from scientific literature. The goal is to emulate and sometimes exceed human capability when it comes to analyzing patterns and extracting actionable insights in less time and at lower cost. AI-based technologies have evolved rapidly due to increased computing power, the availability of powerful algorithms, and the explosive growth in biomedical data accumulation. This convergence has led to AI being integrated throughout the drug development pipeline—from target identification to safety evaluations—transforming the traditional paradigms and accelerating decision‐making processes.

Overview of Drug Toxicity and Its Challenges

Drug toxicity refers to the harmful effects that drugs can have on the body, ranging from mild adverse reactions to severe life-threatening conditions. The early detection of toxicity is paramount because adverse effects are one of the primary reasons for drug candidate failures during preclinical and clinical testing phases. Traditional toxicity testing, such as in vitro cell assays and in vivo animal testing, can be laborious, time‑consuming, and expensive. Moreover, these methods sometimes have issues with reproducibility and may not reliably predict toxicity in humans.

A major challenge is that toxicity is a multifaceted phenomenon; a compound might be non‑toxic in one assay but elicit adverse responses in another system or organ. Drug-induced liver injury (DILI), for instance, is notoriously difficult to detect early because its manifestations may only become apparent after accumulation of toxic metabolites or extended exposure. Furthermore, the biological mechanisms underlying toxicity are complex and involve interactions at multiple biological levels, from molecular interactions to systemic physiological responses. Thus, an integrated approach that combines experimental data, omics data, and computational predictions is essential for accurately forecasting toxicity profiles across different platforms. AI addresses these challenges by mining large datasets, integrating heterogeneous data sources, and reconstructing complex relationships that may not be evident using conventional methods.

AI Technologies for Detecting Drug Toxicity

Common AI Techniques Used

One of the major contributions of AI in the realm of drug safety is the ability to predict toxicity profiles by employing a suite of advanced algorithms. Various AI-based methods have been developed and refined over the past few years.

1. Deep Neural Networks (DNNs) and Ensemble Models:

Deep learning models, such as deep neural networks and ensemble approaches, are widely used to predict toxicity endpoints. For example, the DeepTox model employs a three-layer DNN that leverages molecular descriptors from 0D to 3D to predict toxicity effects reliably. These models are capable of capturing nonlinear relationships between molecular structure features and toxicity outcomes, often outperforming traditional machine learning models in terms of accuracy and sensitivity.

2. Graph Neural Networks (GNNs):

GNNs analyze molecules represented as graphs where atoms serve as nodes and bonds serve as edges. This approach brings the added advantage of capturing the spatial and relational information inherent in chemical structures. By using message-passing operations, GNNs can learn high-dimensional representations of molecules which are then used to predict adverse effects like hepatotoxicity or QT prolongation.

3. Support Vector Machines (SVMs) and Random Forests (RF):

While deep learning models are gaining momentum, traditional machine learning methods like support vector machines and random forests are still in use, particularly for their relative ease of interpretation and efficient performance on smaller datasets. These techniques have been applied extensively in toxicity prediction tasks and work well when combined with appropriate molecular descriptors.

4. Generative Models and Variational Autoencoders:

Generative models such as generative adversarial networks (GANs) and variational autoencoders (VAEs) have shown promise in developing novel compounds with reduced toxicity profiles. By learning the underlying distribution of existing non-toxic molecules, these models can propose novel chemical structures that are predicted to have favorable safety profiles while preserving bioactivity.

5. Natural Language Processing (NLP):

AI also leverages NLP to mine scientific literature and electronic health records, extracting valuable information related to adverse drug reactions (ADRs) and suspected toxicity pathways. NLP techniques help in consolidating dispersed data, including case reports, and legal documents to understand patterns that might indicate toxicity before clinical trials.

6. Multitask Learning Models:

Toxicity is inherently a multilinear phenomenon. Multitask deep learning models have been developed to predict various toxicity endpoints simultaneously, such as liver toxicity, cardiotoxicity, and neuronal toxicity. These approaches benefit from shared features across different tasks and often achieve higher predictive performances than models trained on a single task. An example of this is the multitask DeepTox model, which simultaneously predicts multiple toxicity endpoints from a single set of molecular descriptors.

Comparison and Suitability of Different Techniques

The suitability of any AI technique for toxicity detection primarily depends on the complexity of the data model, the data quality, data volume, the specificity of the endpoints, and the requirement for interpretability.

- Interpretability vs. Accuracy:

Deep neural networks and generative models tend to offer superior predictive power but often suffer from a lack of interpretability—they operate as "black boxes". In contrast, methods such as SVMs or random forests provide easier interpretability but might not capture complex nonlinear relationships as effectively. Selecting the proper balance is critical in drug safety where understanding why a drug may be toxic is as important as predicting that it is likely to be so.

- Data Requirements:

Models like DNNs require extensive data to perform well. In datasets where data is sparse or imbalanced (as often seen with rare toxic events), traditional models or hybrid approaches that incorporate data-augmentation techniques are more fitting.

- Computational Efficiency:

Techniques such as GNNs might demand significant computational resources, especially when dealing with high-throughput screening datasets. However, with the advent of cloud computing and GPUs, these computational challenges are being mitigated over time, allowing for faster predictions and model training.

- Task Specificity:

Certain AI techniques have been designed specifically for particular types of toxicity. For example, specialized models have been built to evaluate mitochondrial toxicity using high-content imaging and mitochondrial membrane potential analyses. Additionally, models that predict genomic toxicity or ADME (absorption, distribution, metabolism, excretion) related toxicities are tailored for those specific endpoints and use combinations of biochemical data and molecular simulations.

Impact on Early Detection of Drug Toxicity

Improvements in Detection Accuracy and Speed

AI-assisted toxicity detection has several transformative impacts on the early detection process:

1. Enhanced Predictive Accuracy:

AI models can process vast chemical spaces and incorporate multiple sources of data—ranging from molecular property descriptors to high-content screening images—thereby improving the detection of subtle toxicity signals that traditional assays might miss. The use of ensemble DNN models like DeepTox and multitask learning frameworks allows the capture of complex dose-response relationships, predicting toxic events with higher sensitivity and specificity than traditional methods.

2. Reduction in Time and Cost:

Traditional toxicity assays, especially in vivo studies, can take months or even years to complete and are resource-intensive. With AI, predictive models can rapidly screen hundreds of thousands of compounds, effectively triaging which candidates may lead to adverse effects and thereby reducing the overall cost and time associated with drug development. This accelerated pipeline enables researchers to focus laboratory resources on the most promising candidates early in the process.

3. Early Identification of Adverse Effects:

AI systems are capable of detecting early molecular signals of toxicity, such as changes in gene expression or metabolic disruptions, long before overt symptoms occur in vivo. This preemptive identification is crucial for avoiding expensive late-stage failures and reducing the risk associated with advancing toxic compounds into clinical trials.

4. Integration of Heterogeneous Data:

One of AI’s greatest assets is its ability to integrate data from multiple sources. For instance, high-throughput screening data, electronic health records, genomic information, and chemical structure data can be synthesized to provide a comprehensive toxicity profile of a compound. This holistic view strengthens predictive capabilities and allows for cross-validation of signals that indicate potential adverse effects.

Case Studies and Examples

Several case studies and published research illustrate the potential of AI in early toxicity detection:

1. Drug Toxicity Evaluation Platforms:

A patent from Yokohama City University and Takeda Pharmaceutical described a method for evaluating drug toxicity using a co-culture system of an organoid with blood cells, where the addition of a drug is followed by assessing toxicity on the organoid. This approach, when combined with AI algorithms, can be used to quantify subtle cellular changes and predict toxic endpoints with high accuracy.

2. DeepTox and Multitask Modeling:

The development of models such as DeepTox, which employs a deep neural network trained on in vitro toxicity datasets like Tox21, has shown excellent predictive performance by comparing favorably to traditional machine learning approaches. These models are capable of predicting endpoints like hepatotoxicity and cardiotoxicity, thereby enabling early flagging of compounds that may fail due to toxicity in clinical trials.

3. Mitochondrial Toxicity Detection:

A patent describing a drug mitochondrial toxicity detection method uses high-content imaging, mitochondrial membrane potential assessment, and fluorescent probe labeling to evaluate toxicity effects. AI algorithms further interpret these rich data streams to provide a rapid and accurate toxicity profile that is applicable for both preclinical safety evaluations and toxicological research.

4. Neuronal Toxicity Detection:

Other patents detail systems and methods for assessing neuronal toxicity. These approaches integrate multi-parametric data from neuronal cell assays with machine learning models to predict whether a compound induces neuronal damage. Automation of this evaluation not only decreases the turnaround time but also enhances the overall sensitivity of the detection process.

5. Comparative Analyses:

Studies comparing deep learning approaches with simpler statistical methods consistently show that AI methods outperform traditional screening protocols in terms of both detection accuracy and speed. For example, in studies predicting in vitro toxic responses, AI models not only provided a higher accuracy for compounds deemed toxic but also demonstrated the ability to detect minor structural features responsible for the toxicity.

Ethical and Regulatory Considerations

Ethical Issues in AI Use

The integration of AI into drug toxicity detection is not without ethical challenges. Since AI models can sometimes function as "black boxes," transparency in the decision-making process is critical. Researchers and regulatory authorities are particularly concerned about:

1. Interpretability and Explainability:

When AI models predict that a drug candidate is toxic, it is essential to understand the rationale behind that prediction. Lack of interpretability can lead to mistrust among clinical researchers and decision-makers, which may impede the adoption of AI solutions. Efforts are underway to develop explainable AI (XAI) techniques to demystify these models, thereby ensuring that their conclusions can be transparently evaluated and validated by human experts.

2. Data Bias and Quality:

AI’s performance is highly dependent on the quality and diversity of the data used during training. In areas where the toxicity data may be imbalanced—where few compounds exhibit significant toxicity—there is a risk of overfitting or biased predictions. Addressing these issues requires carefully curated training datasets and the employment of techniques such as data augmentation and rigorous cross-validation to mitigate biases.

3. Privacy and Security:

Many AI applications in drug safety involve patient data, especially when integrating real-world evidence from electronic health records (EHRs). Ensuring data privacy and protecting sensitive health information is of egregious ethical importance. Healthcare providers and researchers must implement robust data encryption, anonymization methods, and ensure compliance with relevant data protection regulations to maintain the confidentiality of patient data.

Regulatory Requirements for AI in Drug Safety

The burgeoning role of AI in early toxicity detection has led to the need for regulatory frameworks that ensure both efficacy and safety in AI-driven methods:

1. Validation and Standardization:

Regulatory agencies such as the FDA have recognized the potential of AI in drug safety but require that these AI models meet high standards of validation. This involves rigorous benchmarking against standardized datasets (e.g., Tox21) and demonstrating reproducible performance across different studies. Standardization initiatives, including open-source toolkits and public data repositories, are being developed to facilitate better validation procedures among AI models for toxicity detection.

2. Documentation and Transparency:

For regulatory approval, it is essential to document the AI model’s performance, training processes, data sources, and decision logic comprehensively. Detailed documentation helps regulators assess the robustness of the AI’s predictions and minimizes potential liabilities that might arise later in the drug development process. Clear guidelines for exposing the inner workings of these algorithms are helping foster trust and wider acceptance.

3. Post-Market Surveillance:

Even once an AI-driven toxicity screening method is approved, regulators require ongoing post-market surveillance to ensure that the model continues to perform accurately as it encounters new chemical entities and real-world data. Continuous monitoring and periodic re-validation of the model are now integral requirements to maintain regulatory compliance in dynamic environments.

4. Ethical Oversight Committees:

Given the ethical challenges related to bias, transparency, and data security, many institutions have established ethical oversight committees to govern the development and deployment of AI technologies. These committees work closely with regulatory bodies to ensure that the AI models not only comply with technical standards but also adhere to ethical expectations in patient care and drug safety.

Challenges and Future Directions

Current Challenges in AI Implementation

Despite the impressive advances, several challenges remain:

1. Data Quality and Availability:

One of the most significant challenges is the availability of high-quality, diverse datasets. Many toxicity prediction models are hindered by data imbalances, limited positive instances of adverse toxicity events, and heterogeneity in data recording methods. AI models must be designed to handle these deficiencies effectively through techniques such as data augmentation and transfer learning.

2. Interpretability and Trust:

The black-box nature of many deep learning models continues to be a barrier. While interpretability methods are advancing, making AI decisions more transparent is critical, particularly when these decisions influence regulatory and clinical make‑up processes. The challenge is not only to predict that a compound is toxic, but also to elucidate the biological pathways or structural alerts that lead to such predictions.

3. Integration with Traditional Methods:

Integrating AI predictions with traditional experimental methods requires reconciliation between in silico models and in vitro/in vivo toxicology assays. AI will most effectively support early toxicity detection if it can complement established methods. Developing frameworks that seamlessly integrate predictive models with experimental validation is an ongoing area of research.

4. Computational Resource Demands:

Advanced models, particularly those utilizing deep learning or graph neural networks, demand considerable computational resources. Although cloud computing and specialized hardware (such as GPUs) have alleviated some of this burden, the scalability of such systems remains a concern, especially for smaller research institutions or startups.

5. Ethical and Regulatory Hesitation:

As mentioned earlier, the ethical implications of AI decisions, including issues of bias and data privacy, as well as the stringent requirements of regulatory agencies, often slow the adoption of AI methodologies in drug safety. Overcoming these challenges requires collaboration across disciplines, as well as investments not only in technology but also in developing comprehensive legal frameworks.

Future Prospects and Research Directions in AI for Drug Toxicity

Looking forward, several promising avenues and research directions can further enhance the role of AI in early toxicity detection:

1. Advancements in Explainable AI (XAI):

Future research will likely focus on developing more robust XAI techniques that provide clear explanations for toxicity predictions, thereby increasing trust among regulators, clinicians, and researchers alike. Improved interpretability will also help in refining the models by pinpointing specific molecular substructures that contribute to toxicity, leading to better drug design and safety profiles.

2. Integration of Multi-Omics Data:

The next frontier involves integrating multi-omics datasets—such as genomics, proteomics, and metabolomics—with traditional chemical data. By doing so, AI models can capture the multi-layered nature of toxicity, enabling predictions that encompass not only chemical structure but also biological context and patient-specific factors. This holistic approach is expected to enhance both predictive accuracy and clinical relevance.

3. Enhanced Multitask and Hybrid Models:

Hybrid models that combine the strengths of deep learning with traditional statistical methods may overcome current limitations. These multitask models can simultaneously predict several toxicity endpoints and, when combined with mechanistic models that account for biological pathways, can provide more comprehensive safety evaluations. Future iterations may include hierarchical models that mimic biological organization—from molecular to system-level toxicity.

4. Integration With Laboratory Automation:

As laboratory automation increases in prevalence, AI models can be continually refined in near real-time by feeding back experimental data from high-throughput screens. This continuous learning environment—sometimes referred to as a “closed-loop” drug discovery approach—will further shorten the cycle times from prediction to experimental validation, making early detection of toxicity more dynamic and responsive.

5. Digital Twin Technology:

The concept of digital twins—virtual counterparts to physical systems—may soon be applied to toxicology. Digital twins of organs or even complete human systems might be generated using multi-scale data, allowing AI systems to simulate and predict how drugs interact with human biology in real-time. This would revolutionize early toxicity detection, shifting from static assay-based predictions to dynamic, personalized simulations.

6. Collaborative Platforms and Open Data Initiatives:

Moving toward open, collaborative platforms is essential. Initiatives that encourage data sharing among academic institutions, pharmaceutical companies, and regulatory bodies will enable the development of more generalized and robust models. Government and industry partnerships could standardize data collection protocols, which in turn would foster improved AI model development for toxicity detection.

7. Regulatory Innovation:

As AI models become more integral to early toxicity detection, regulatory bodies will continue to evolve and update their guidelines. This may include new frameworks specifically tailored for validating in silico toxicity models, ensuring that AI-driven decisions are auditable and reproducible throughout the drug development lifecycle. Future regulations might mandate specific transparency and explainability metrics for AI-based models used in safety evaluations.

8. Personalized Toxicity Prediction:

The integration of patient-specific data such as genetic information and biomarkers into AI models offers the potential for personalized toxicity prediction. Such personalized models could forecast how individual patients might react to specific compounds, providing early signals that help tailor drug dosages and even lead to individualized treatment plans that minimize adverse effects.

Conclusion

In summary, AI assists in the early detection of drug toxicity by leveraging a suite of advanced computational techniques that surpass traditional toxicology assays in both speed and accuracy. By integrating vast and heterogeneous datasets—from molecular descriptors to multi-omics data and clinical records—AI-based models such as deep neural networks, graph neural networks, and multitask learning frameworks provide enhanced predictive capabilities. These technologies allow researchers to quickly flag potentially toxic compounds during the early phases of drug development, thereby significantly reducing the financial and temporal burdens of late‐stage failures.

Alongside improvements in predictive accuracy and fast processing speeds, AI technologies have also improved the overall safety profile assessments by providing early warnings about adverse effects such as hepatotoxicity, mitochondrial dysfunction, and neuronal toxicity. Case studies—ranging from the co-culture organoid systems in toxicity evaluation platforms to advanced multitask deep learning models that analyze in vitro data—demonstrate real-world applications where AI has accelerated toxicity screening processes. The integration of AI with traditional methods not only widens the scope of detectable toxic effects but also provides a complementary approach that enriches the overall scientific understanding of drug safety.

Ethically, the introduction of these AI systems presents challenges regarding the interpretability of their “black-box” predictions, bias in training data, and issues related to patient data privacy and security. Regulatory agencies are actively working on formulating guidelines that mandate transparency, comprehensive documentation, and rigorous post-market surveillance of AI models to establish trust and ensure patient safety during drug development.

Looking toward the future, research in AI for drug toxicity detection is expected to focus on making models more interpretable through explainable AI techniques, integrating multi-omics data for a more comprehensive assessment, adopting hybrid and multitask learning approaches, and creating digital twins for dynamic simulation of drug response. Continuous data sharing, infrastructure improvements, and an evolving regulatory landscape will further enhance the applications of AI in predicting drug toxicity, leading to more efficient, cost-effective, and safer drug development pipelines.

Overall, AI’s multifaceted approach—combining cutting-edge computational methods, broad data integration, and continuous learning mechanisms—holds the promise of dramatically improving early drug toxicity detection. By addressing current challenges and aligning with ethical and regulatory requirements, AI-driven platforms are poised to reshape how pharmaceutical companies develop new therapies, ensuring that only compounds with a favorable safety profile progress further into clinical trials. This promises not only to reduce financial risk and shorten development timelines but also ultimately to improve patient outcomes and public health.

In conclusion, AI is transforming the early detection of drug toxicity through enhanced accuracy, speed, and integration capabilities. The benefits of this transformative approach extend beyond laboratory efficiency into the realms of personalized medicine, regulatory adherence, and ethical healthcare innovations. As the field advances further with improved algorithms, robust data infrastructures, and collaborative partnerships across the industry, AI will undoubtedly continue to play a pivotal role in ensuring drug safety while fostering the development of novel therapies with reduced adverse risks.

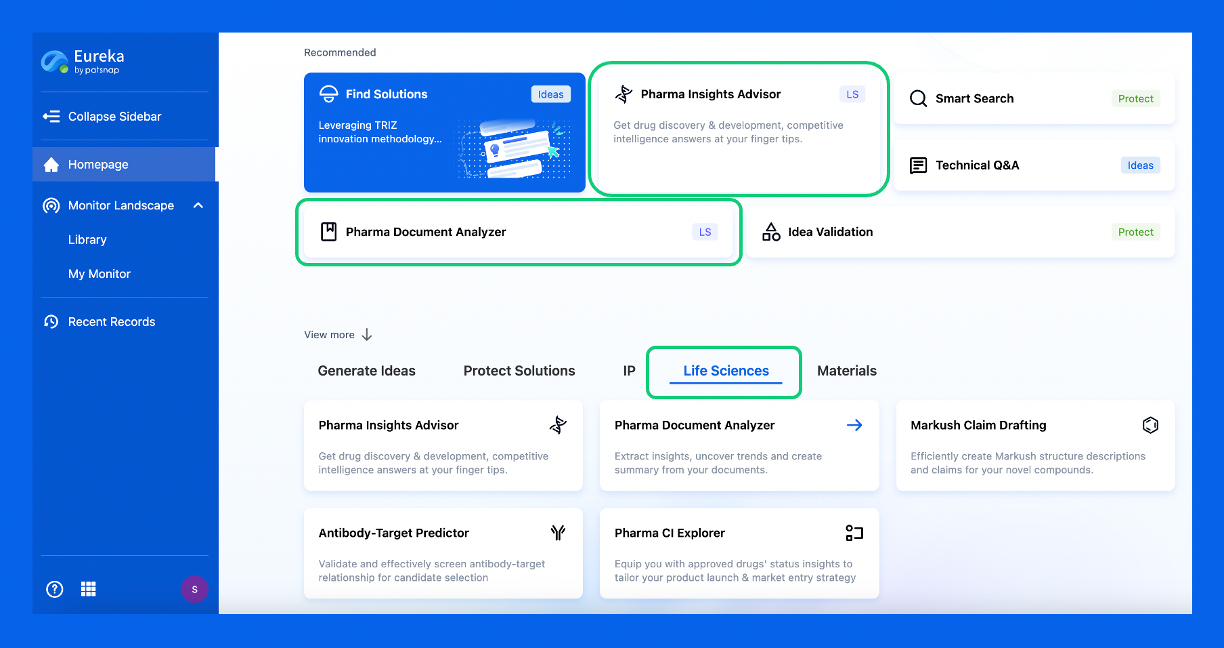

Discover Eureka LS: AI Agents Built for Biopharma Efficiency

Stop wasting time on biopharma busywork. Meet Eureka LS - your AI agent squad for drug discovery.

▶ See how 50+ research teams saved 300+ hours/month

From reducing screening time to simplifying Markush drafting, our AI Agents are ready to deliver immediate value. Explore Eureka LS today and unlock powerful capabilities that help you innovate with confidence.

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.