Request Demo

How to avoid overfitting in bioinformatics models?

29 May 2025

Introduction

In the rapidly evolving field of bioinformatics, developing robust models to analyze and predict biological data is fundamental. However, one of the common pitfalls researchers encounter is overfitting—when a model learns the training data too well, including its noise and outliers, to the detriment of its performance on new, unseen data. This blog explores various strategies to mitigate overfitting in bioinformatics models, ensuring they generalize well and provide reliable predictions.

Understanding Overfitting in Bioinformatics

Before diving into solutions, it's crucial to comprehend what overfitting entails. In the context of bioinformatics, models are often faced with high-dimensional data, such as gene expression profiles or protein sequences, where the number of features significantly exceeds the number of samples. This complexity can lead models to memorize data rather than learn underlying patterns, resulting in poor generalization.

Regularization Techniques

Regularization is a widely used technique to prevent overfitting. It works by adding a penalty term to the model's loss function, discouraging excessive complexity. Popular regularization methods include L1 and L2 regularization, which penalize large coefficients and encourage simpler models. In bioinformatics, these techniques are particularly useful when dealing with genomic data where sparse solutions can improve interpretation.

Feature Selection and Dimensionality Reduction

Bioinformatics datasets often contain thousands of features, many of which might be irrelevant or redundant. Employing feature selection methods, like recursive feature elimination or mutual information-based selection, can help identify the most informative variables. Additionally, dimensionality reduction techniques like Principal Component Analysis (PCA) or t-distributed Stochastic Neighbor Embedding (t-SNE) aid in reducing the complexity of the data while preserving essential information.

Cross-Validation Strategies

Cross-validation is an effective way to assess a model's ability to generalize. By partitioning the data into training and validation sets multiple times, researchers can ensure that the model's performance is consistent across different subsets of data. Techniques like k-fold cross-validation provide a more reliable estimate of a model's performance compared to a simple train-test split, helping to detect overfitting early in the modeling process.

Ensemble Methods

Ensemble methods, including bagging and boosting, combine predictions from multiple models to improve accuracy and robustness. In bioinformatics, methods like Random Forests and Gradient Boosting Machines have been successfully applied to genomics and proteomics data. By leveraging the wisdom of crowds, ensemble methods can help reduce the risk of overfitting by averaging out biases present in individual models.

Early Stopping

During the training phase, models might continue to learn beyond the point where they correctly capture the underlying patterns in the data. Early stopping involves monitoring the model's performance on a validation set and halting training once the performance plateaus or begins to degrade. This prevents the model from learning noise in the training data and ensures better generalization.

Conclusion

Avoiding overfitting in bioinformatics models is crucial for ensuring they provide accurate and meaningful predictions. By employing techniques such as regularization, feature selection, cross-validation, ensemble methods, and early stopping, researchers can develop models that are both powerful and generalizable. As bioinformatics continues to grow, mastering these strategies will be essential for unlocking the full potential of biological data and driving innovation in the field.

In the rapidly evolving field of bioinformatics, developing robust models to analyze and predict biological data is fundamental. However, one of the common pitfalls researchers encounter is overfitting—when a model learns the training data too well, including its noise and outliers, to the detriment of its performance on new, unseen data. This blog explores various strategies to mitigate overfitting in bioinformatics models, ensuring they generalize well and provide reliable predictions.

Understanding Overfitting in Bioinformatics

Before diving into solutions, it's crucial to comprehend what overfitting entails. In the context of bioinformatics, models are often faced with high-dimensional data, such as gene expression profiles or protein sequences, where the number of features significantly exceeds the number of samples. This complexity can lead models to memorize data rather than learn underlying patterns, resulting in poor generalization.

Regularization Techniques

Regularization is a widely used technique to prevent overfitting. It works by adding a penalty term to the model's loss function, discouraging excessive complexity. Popular regularization methods include L1 and L2 regularization, which penalize large coefficients and encourage simpler models. In bioinformatics, these techniques are particularly useful when dealing with genomic data where sparse solutions can improve interpretation.

Feature Selection and Dimensionality Reduction

Bioinformatics datasets often contain thousands of features, many of which might be irrelevant or redundant. Employing feature selection methods, like recursive feature elimination or mutual information-based selection, can help identify the most informative variables. Additionally, dimensionality reduction techniques like Principal Component Analysis (PCA) or t-distributed Stochastic Neighbor Embedding (t-SNE) aid in reducing the complexity of the data while preserving essential information.

Cross-Validation Strategies

Cross-validation is an effective way to assess a model's ability to generalize. By partitioning the data into training and validation sets multiple times, researchers can ensure that the model's performance is consistent across different subsets of data. Techniques like k-fold cross-validation provide a more reliable estimate of a model's performance compared to a simple train-test split, helping to detect overfitting early in the modeling process.

Ensemble Methods

Ensemble methods, including bagging and boosting, combine predictions from multiple models to improve accuracy and robustness. In bioinformatics, methods like Random Forests and Gradient Boosting Machines have been successfully applied to genomics and proteomics data. By leveraging the wisdom of crowds, ensemble methods can help reduce the risk of overfitting by averaging out biases present in individual models.

Early Stopping

During the training phase, models might continue to learn beyond the point where they correctly capture the underlying patterns in the data. Early stopping involves monitoring the model's performance on a validation set and halting training once the performance plateaus or begins to degrade. This prevents the model from learning noise in the training data and ensures better generalization.

Conclusion

Avoiding overfitting in bioinformatics models is crucial for ensuring they provide accurate and meaningful predictions. By employing techniques such as regularization, feature selection, cross-validation, ensemble methods, and early stopping, researchers can develop models that are both powerful and generalizable. As bioinformatics continues to grow, mastering these strategies will be essential for unlocking the full potential of biological data and driving innovation in the field.

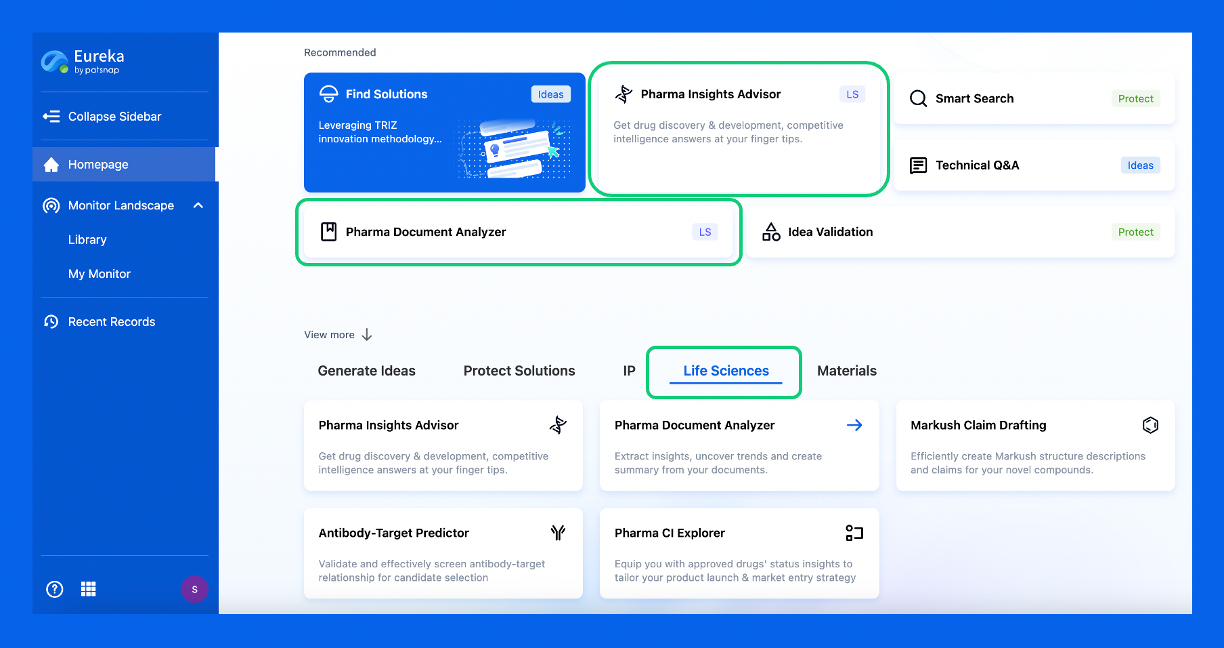

Discover Eureka LS: AI Agents Built for Biopharma Efficiency

Stop wasting time on biopharma busywork. Meet Eureka LS - your AI agent squad for drug discovery.

▶ See how 50+ research teams saved 300+ hours/month

From reducing screening time to simplifying Markush drafting, our AI Agents are ready to deliver immediate value. Explore Eureka LS today and unlock powerful capabilities that help you innovate with confidence.

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.