Request Demo

How to Validate a Biochemical Method per ICH Guidelines

9 May 2025

Validating a biochemical method according to the International Council for Harmonisation (ICH) guidelines is a critical process in ensuring the reliability, accuracy, and consistency of analytical results. These guidelines are set to harmonize the requirements for pharmaceuticals, ensuring that methods used in the analysis of drugs are scientifically sound and produce reproducible results. The validation process involves a series of steps that need to be meticulously followed to comply with regulatory standards.

To begin with, one of the primary aspects of method validation is defining the purpose and scope of the method. It is essential to clearly understand what the method is intended to measure and under what conditions it will be used. This step includes outlining the specific parameters to be tested, such as the concentration range, the sample type, and any instrumental or procedural conditions that might affect the results.

The next step involves assessing the method's specificity, which is its ability to measure the desired analyte accurately without interference from other components in the sample. This involves testing the method with both the analyte of interest and potential interfering substances to ensure that the results are unaffected by other sample components.

Precision is another crucial parameter that refers to the consistency of results when a method is repeated under the same conditions. This can be evaluated by conducting replicate tests and calculating the standard deviation or relative standard deviation of the results. Precision is often divided into repeatability (intra-assay precision) and intermediate precision (inter-assay precision), which involves testing on different days or with different analysts and equipment.

Accuracy, on the other hand, is a measure of how close the test results are to the true value or accepted reference value. This can be determined by comparing the test results with results obtained from a well-characterized reference method or by spiking samples with known quantities of the analyte and recovering them accurately.

The method's linearity must also be established, demonstrating that the test results are directly proportional to the concentration of the analyte over a specified range. This involves generating a calibration curve by analyzing samples with known concentrations and statistically evaluating the linearity of the response.

Another important consideration is the method's limit of detection (LOD) and limit of quantitation (LOQ). LOD is the smallest amount of analyte that can be detected but not necessarily quantified, while LOQ is the smallest amount that can be quantitatively measured with acceptable precision and accuracy. These parameters are crucial for determining the method's sensitivity.

Robustness is a measure of a method's capacity to remain unaffected by small but deliberate variations in method parameters. This involves testing the method's reliability under slight changes in experimental conditions, such as temperature, pH, or flow rate, and ensuring that results remain consistent.

Finally, the method's range, which is the interval between the upper and lower levels of analyte that have been demonstrated to be determined with precision, accuracy, and linearity, must be established. It is important that the method performs reliably within the defined range to ensure that it meets the intended analytical needs.

Each of these validation parameters is crucial for ensuring that the biochemical method is fit for its intended purpose. Following the ICH guidelines ensures that the method is scientifically valid and complies with regulatory standards, ultimately ensuring the safety and efficacy of pharmaceutical products. By rigorously validating analytical methods, laboratories can provide high-quality, reliable results that support critical decision-making in drug development and quality control.

To begin with, one of the primary aspects of method validation is defining the purpose and scope of the method. It is essential to clearly understand what the method is intended to measure and under what conditions it will be used. This step includes outlining the specific parameters to be tested, such as the concentration range, the sample type, and any instrumental or procedural conditions that might affect the results.

The next step involves assessing the method's specificity, which is its ability to measure the desired analyte accurately without interference from other components in the sample. This involves testing the method with both the analyte of interest and potential interfering substances to ensure that the results are unaffected by other sample components.

Precision is another crucial parameter that refers to the consistency of results when a method is repeated under the same conditions. This can be evaluated by conducting replicate tests and calculating the standard deviation or relative standard deviation of the results. Precision is often divided into repeatability (intra-assay precision) and intermediate precision (inter-assay precision), which involves testing on different days or with different analysts and equipment.

Accuracy, on the other hand, is a measure of how close the test results are to the true value or accepted reference value. This can be determined by comparing the test results with results obtained from a well-characterized reference method or by spiking samples with known quantities of the analyte and recovering them accurately.

The method's linearity must also be established, demonstrating that the test results are directly proportional to the concentration of the analyte over a specified range. This involves generating a calibration curve by analyzing samples with known concentrations and statistically evaluating the linearity of the response.

Another important consideration is the method's limit of detection (LOD) and limit of quantitation (LOQ). LOD is the smallest amount of analyte that can be detected but not necessarily quantified, while LOQ is the smallest amount that can be quantitatively measured with acceptable precision and accuracy. These parameters are crucial for determining the method's sensitivity.

Robustness is a measure of a method's capacity to remain unaffected by small but deliberate variations in method parameters. This involves testing the method's reliability under slight changes in experimental conditions, such as temperature, pH, or flow rate, and ensuring that results remain consistent.

Finally, the method's range, which is the interval between the upper and lower levels of analyte that have been demonstrated to be determined with precision, accuracy, and linearity, must be established. It is important that the method performs reliably within the defined range to ensure that it meets the intended analytical needs.

Each of these validation parameters is crucial for ensuring that the biochemical method is fit for its intended purpose. Following the ICH guidelines ensures that the method is scientifically valid and complies with regulatory standards, ultimately ensuring the safety and efficacy of pharmaceutical products. By rigorously validating analytical methods, laboratories can provide high-quality, reliable results that support critical decision-making in drug development and quality control.

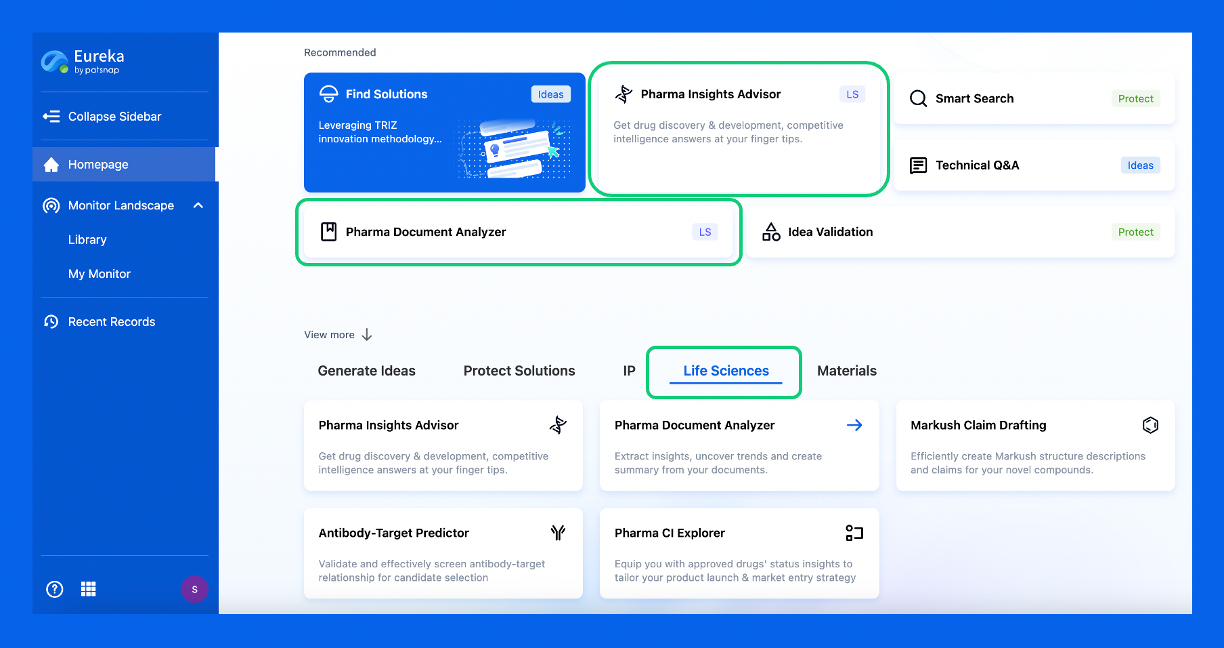

Discover Eureka LS: AI Agents Built for Biopharma Efficiency

Stop wasting time on biopharma busywork. Meet Eureka LS - your AI agent squad for drug discovery.

▶ See how 50+ research teams saved 300+ hours/month

From reducing screening time to simplifying Markush drafting, our AI Agents are ready to deliver immediate value. Explore Eureka LS today and unlock powerful capabilities that help you innovate with confidence.

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.