Request Demo

What are the main stages in drug discovery and development?

21 March 2025

Overview of Drug Discovery and Development

Definition and Importance

Drug discovery and development is the complex, multi‐step process that transforms initial biological ideas into safe and effective therapeutic medicines. At its core, drug discovery involves identifying potential targets and designing compounds that interact with these targets, whereas drug development focuses on evaluating, refining, and ultimately approving these compounds for clinical use. The entire pathway—from bench research through preclinical safety testing and clinical trials to final regulatory approval—is essential as it ensures that only compounds with a favorable risk–benefit profile reach patients. This process is supported by a vast array of scientific disciplines including biology, chemistry, pharmacology, toxicology, clinical science, and regulatory affairs, making it inherently multidisciplinary. Its importance is underscored by the need to address unmet medical needs, reduce the incidence of treatment failures in humans, and optimize drug safety and efficacy.

Historical Evolution

Historically, drug discovery began with the isolation and refinement of natural products, as early physicians extracted remedies directly from nature. Over time, advances in organic chemistry allowed for synthetic modification and rational design. The field has evolved from “trial-and-error” methods to modern approaches integrating high-throughput screening (HTS) and computer-aided drug design (CADD). Early paradigms now coexist with sophisticated methods to optimize targets and reduce attrition rates by incorporating improvements in cell culture models, animal testing, and in silico techniques. Over the decades, the evolution of regulation—from initial Hippocratic principles to today’s rigorous Good Laboratory Practice (GLP) standards—has coupled regulatory science with scientific innovation. These advances have not only shortened the development timeline in some cases but have also improved the overall probability of successful translation from molecule to market.

Stages of Drug Discovery

The process of drug discovery is a highly iterative process that begins with the identification and validation of a biological target, followed by the identification of molecules (hits) that interact with the target, and then further refinement (lead optimization) to produce a promising drug candidate.

Target Identification and Validation

Target identification is the first and arguably most critical step in drug discovery. It involves discovering a biological molecule—such as a protein, receptor, enzyme, gene, or ion channel—that is integral to a disease’s pathology. A robust initial target is essential because it directs subsequent research and resource investment. Researchers leverage diverse data sources, from genomic and proteomic studies to system biology insights, ensuring that the identified target plays a central role in the disease state.

Target validation follows target identification to establish that modulating this target will have a therapeutic effect. This stage relies on multiple approaches. For example, genetic manipulation techniques such as RNA interference (RNAi) or CRISPR can knock down or knock out the target gene, demonstrating its potential clinical relevance. Pharmacological validation, meanwhile, uses chemical inhibitors or modulators in preclinical models to show that altering target function yields a beneficial outcome. Data from affinity-based pull-down experiments, label-free methods, and computational screening further enhance target validation by confirming binding interactions and downstream effects. Validation methods not only confirm the role of the target but are also critical for predicting safety and efficacy in later stages.

Lead Compound Identification

Once a target is validated, the next step is to discover molecules that interact with and modulate the target’s activity. Lead compound identification uses high-throughput screening and other techniques to sift through thousands to millions of compounds looking for “hits” that bind to the target. In modern drug discovery, hit identification can involve large chemical libraries screened in in vitro assays that measure binding affinity, biological activity, and preliminary safety parameters.

Screening techniques have evolved from early ligand-binding assays to sophisticated cell-based systems and real-time biophysical measurements. HTS platforms can use robotic systems and computer algorithms to rapidly quantify interactions, while virtual screening approaches such as structure-based drug design (SBDD) and ligand-based drug design (LBDD) use in silico methods to predict binding modes using computational molecular modeling. These methods are complemented by data from high-throughput experimental studies to select the most promising candidates. This stage is not only guided by physicochemical properties but also by predictive models of absorption, distribution, metabolism, excretion, and toxicity (ADMET), which are critical to determine if a hit might be viable as a drug candidate.

Lead Optimization

After identifying one or more promising hit compounds, the process advances to lead optimization—a phase where medicinal chemists systematically modify the chemical structure to improve potency, selectivity, drug-likeness, and safety. Lead optimization is highly iterative and involves synthesizing analogue series, structure–activity relationship (SAR) studies, and quantitative structure–activity relationship (QSAR) modeling.

During this stage, compounds are evaluated for their pharmacokinetic properties and toxicity profiles using both in silico and in vitro experiments. For example, computer-aided drug design (CADD) can predict which modifications will most improve a compound’s binding affinity or metabolic stability, whereas experimental work such as high-throughput affinity screening often directs further chemical refinement. Factors such as molecular weight, lipophilicity, solubility, and the nature of functional groups are carefully adjusted. The goal of lead optimization is to generate candidates that possess a balanced profile: high affinity for the target, acceptable pharmacokinetics, minimal off-target activity, and an acceptable safety margin.

The iterative nature requires assessing developmental risks and frequently includes a “go/no-go” decision-making process at multiple checkpoints. This approach helps narrow the candidate pool so that only the highest quality and most promising leads move forward to development.

Stages of Drug Development

Once an optimized lead compound emerges from the discovery phase, the process shifts to drug development. These stages are designed to rigorously test the compound’s safety and efficacy before it can be approved for clinical use.

Preclinical Testing

Preclinical testing is the stage at which the candidate drug is evaluated through a series of in vitro and in vivo experiments. In vitro studies use cell-based assays (2D or 3D cultures) to assess biological activity, ADME properties, and initial safety. More sophisticated models, such as organ-on-a-chip (OoC) platforms or engineered tissue models, are increasingly used to better recapitulate the human microenvironment and predict outcomes more reliably.

In vivo studies typically involve animal models. These studies provide critical data on the pharmacodynamics (PD) and pharmacokinetics (PK) of the compound, its metabolism, bioavailability, and toxicity profiles. Standardized protocols aligned with Good Laboratory Practice (GLP) and guidelines set out by the OECD and ICH are followed to ensure reproducibility and regulatory compliance. Preclinical phases serve as the final gate before the initiation of human trials, with a primary focus on safety, although they also provide early proof-of-concept efficacy data.

Preclinical testing also is a platform for determining the non-observed adverse effect level (NOAEL) and informing the safe starting dose for Phase I clinical trials. Thus, the extensive preclinical data gathered is crucial to persuade regulatory authorities of the drug’s safety profile and its potential for clinical benefit.

Clinical Trials

Clinical trials are the human testing phases of drug development, designed to evaluate safety, tolerability, efficacy, and dosing regimens. They occur in several sequential phases with increasing participant numbers and complexity.

Phase I

Phase I clinical trials are typically the first stage of testing in humans and involve a small group of healthy volunteers (or patients in cases of oncology or other high-risk treatments). These studies are primarily focused on safety and pharmacokinetics. Researchers gather data on absorption, distribution, metabolism, and excretion (ADME) in humans and determine the maximum tolerated dose while monitoring for side effects. In some instances, Phase I may include both single ascending-dose and multiple ascending-dose studies. The information garnered here provides the foundation for dosing and safety monitoring in subsequent phases.

Phase II

Phase II trials move from small-scale safety studies to more formal efficacy assessments in a patient population that has the target disease. These studies provide preliminary evidence of therapeutic activity, while continuing to evaluate safety and further defining the side effect profile. Phase II studies are generally split into “proof-of-concept” or “pilot” studies and encompass dose-ranging studies to confirm the optimal dose that offers clinical benefit. The design of Phase II is critical; it must balance the need for statistically significant results with efficient resource allocation. There is typically an increased sample size compared to Phase I, often including hundreds of patients, to obtain initial estimates of the drug’s efficacy and safety.

Phase III

Phase III clinical trials are pivotal studies designed to definitively establish the drug’s efficacy and safety in a large, diverse patient population. They are randomized, controlled, and often multicenter, sometimes multinational. The sample sizes in Phase III usually range in the thousands, which allows for more rigorous statistical analysis and detection of less common adverse events. The data from Phase III serve as the main body of evidence for regulatory submissions. Phase III trials are both expensive and time-consuming, sometimes taking several years to complete because they must meet stringent standards and adequately represent the target population.

Regulatory Approval

Once the clinical trials demonstrate that the candidate drug meets prespecified endpoints regarding safety and efficacy, the data is compiled into a New Drug Application (NDA) or Biologics License Application (BLA) for submission to regulatory agencies such as the FDA, EMA, or other national bodies. The regulatory approval phase involves a comprehensive review of all preclinical and clinical data, manufacturing processes, labeling, and quality control measures.

The regulatory agency evaluates whether the benefit-risk profile of the drug is positive for its intended use. In many regions, this review process spans several months to over a year, and may include advisory committee meetings and facility inspections. Approval is a critical milestone that authorizes the drug to be marketed, and it is followed by post-marketing surveillance (Phase IV) to continuously monitor long-term safety and effectiveness once the drug is in regular use.

Challenges and Future Directions

Even with these robust and multi-staged processes, drug discovery and development face several ongoing challenges that prompt continual evolution in methodology and technology.

Current Challenges

The modern drug discovery and development pipeline is associated with high attrition rates. Statistics show that from the initial hit-to-lead identification through clinical trials, only a small percentage of compounds eventually gain regulatory approval. One of the critical challenges is the translation gap between in vitro/in vivo preclinical models and human clinical trials. For example, traditional animal models often fail to predict human responses due to species differences in drug metabolism, receptor expression, and immune responses.

Another challenge is the enormous cost and time required for drug development. The development process can take 10–15 years and billions of dollars, with later stages (especially Phase III trials) being particularly resource intensive. Additionally, there is a high failure rate in late-stage clinical trials because of issues with efficacy, toxicity, pharmacokinetics, and sometimes poor target selection or inadequate early research.

The rapidly evolving scientific landscape has also contributed to the challenges of integrating new technologies with existing frameworks. For example, while opportunities exist in utilizing machine learning and artificial intelligence (AI) for predictive modeling and drug screening, ensuring data quality and regulatory acceptance remains a hurdle. Regulatory processes themselves, though designed for thorough evaluation, may add delays particularly when protocols are rigid and not easily adaptable to new data sources or novel therapeutic modalities.

Technological Advancements and Future Trends

To overcome these challenges, technological innovation is at the forefront of future drug discovery and development. Emerging technologies such as organ-on-a-chip (OoC) systems and 3D cell culture models promise more physiologically relevant in vitro methods, which could reduce the reliance on animal testing and improve prediction of human responses. Advances in high-throughput screening and combinatorial chemistry, combined with machine learning approaches for data analysis, are expected to streamline hit identification and lead optimization processes further.

Furthermore, computer-aided drug design (CADD) and in silico methods are gradually improving their accuracy and predictive power. These approaches leverage structural biology, bioinformatics, and large chemical databases to optimize candidate compounds rapidly and more cost-effectively. In addition, personalized medicine and pharmacogenomics are opening up the possibility for tailoring drugs to individual genetic profiles, thereby increasing efficacy and reducing adverse reactions.

Future trends include the integration of multidisciplinary data—combining omics data (such as genomics, proteomics, metabolomics) with advanced modeling techniques—to predict drug behavior in humans with unprecedented precision. Collaborative networks across academia, industry, and regulatory bodies will further aid the harmonization of global drug development processes, accelerating both discovery and approval phases. Moreover, regulatory agencies are already establishing expedited review pathways for drugs treating serious conditions or unmet medical needs, which, if optimized, could significantly reduce the development timeline without compromising safety.

Innovations also include improvements in overall process efficiency through lean management strategies and portfolio management in R&D. Such systemic improvements not only enhance productivity at both the program and portfolio levels but also facilitate informed decision-making regarding project continuation or termination. Finally, the adoption of novel assay techniques, such as high-throughput compressed screening and targeted information management systems in drug development, reflects a move towards more streamlined, data-driven approaches that promise to reduce development costs while increasing success rates.

Conclusion

In summary, drug discovery and development is an extensive process that begins with scientific exploration and hypothesis generation before proceeding through defined stages of target identification and validation, lead compound discovery, and iterative lead optimization. Once a promising candidate is identified, the development process rigorously tests the compound through preclinical studies and multiple clinical trial phases (Phase I, II, and III) to thoroughly establish its safety and efficacy. The regulatory review process then scrutinizes this wealth of data before granting marketing approval, while post-approval surveillance continues to ensure long-term safety. Despite significant challenges—including high attrition rates, long development timelines, and costly resource demands—the field is rapidly evolving by integrating innovative technologies such as organ-on-a-chip models, AI-driven screening tools, and personalized medicine approaches.

The modern paradigm increasingly reflects a general-to-specific-to-general approach: initial broad scientific discovery is refined through targeted evaluations, and thereafter, specialized technological advances are reintegrated into an overall strategy that aims to ultimately benefit patients. Each stage—from target validation to regulatory clearance—requires meticulous planning, coordinated multidisciplinary collaborations, and adaptive strategies to meet evolving scientific and regulatory demands. Emerging trends show promise in revolutionizing the field by improving predictability, reducing reliance on animal models, leveraging big data analytics, and optimizing overall R&D productivity, all of which are poised to shorten timeframes and reduce costs while enhancing the safety, efficacy, and personalization of therapies.

Ultimately, these comprehensive efforts ensure that only the most promising and safe drugs reach the market, underscoring the significance of a rigorous and evolving drug discovery and development process. As technology advances, regulatory frameworks adapt, and cross-disciplinary collaborations strengthen, the future of drug development holds the promise of more rapid, effective, and patient-centric therapies. This intricate yet dynamic journey continues to be vital for addressing emerging healthcare challenges and transforming innovative research into real-world clinical solutions.

Definition and Importance

Drug discovery and development is the complex, multi‐step process that transforms initial biological ideas into safe and effective therapeutic medicines. At its core, drug discovery involves identifying potential targets and designing compounds that interact with these targets, whereas drug development focuses on evaluating, refining, and ultimately approving these compounds for clinical use. The entire pathway—from bench research through preclinical safety testing and clinical trials to final regulatory approval—is essential as it ensures that only compounds with a favorable risk–benefit profile reach patients. This process is supported by a vast array of scientific disciplines including biology, chemistry, pharmacology, toxicology, clinical science, and regulatory affairs, making it inherently multidisciplinary. Its importance is underscored by the need to address unmet medical needs, reduce the incidence of treatment failures in humans, and optimize drug safety and efficacy.

Historical Evolution

Historically, drug discovery began with the isolation and refinement of natural products, as early physicians extracted remedies directly from nature. Over time, advances in organic chemistry allowed for synthetic modification and rational design. The field has evolved from “trial-and-error” methods to modern approaches integrating high-throughput screening (HTS) and computer-aided drug design (CADD). Early paradigms now coexist with sophisticated methods to optimize targets and reduce attrition rates by incorporating improvements in cell culture models, animal testing, and in silico techniques. Over the decades, the evolution of regulation—from initial Hippocratic principles to today’s rigorous Good Laboratory Practice (GLP) standards—has coupled regulatory science with scientific innovation. These advances have not only shortened the development timeline in some cases but have also improved the overall probability of successful translation from molecule to market.

Stages of Drug Discovery

The process of drug discovery is a highly iterative process that begins with the identification and validation of a biological target, followed by the identification of molecules (hits) that interact with the target, and then further refinement (lead optimization) to produce a promising drug candidate.

Target Identification and Validation

Target identification is the first and arguably most critical step in drug discovery. It involves discovering a biological molecule—such as a protein, receptor, enzyme, gene, or ion channel—that is integral to a disease’s pathology. A robust initial target is essential because it directs subsequent research and resource investment. Researchers leverage diverse data sources, from genomic and proteomic studies to system biology insights, ensuring that the identified target plays a central role in the disease state.

Target validation follows target identification to establish that modulating this target will have a therapeutic effect. This stage relies on multiple approaches. For example, genetic manipulation techniques such as RNA interference (RNAi) or CRISPR can knock down or knock out the target gene, demonstrating its potential clinical relevance. Pharmacological validation, meanwhile, uses chemical inhibitors or modulators in preclinical models to show that altering target function yields a beneficial outcome. Data from affinity-based pull-down experiments, label-free methods, and computational screening further enhance target validation by confirming binding interactions and downstream effects. Validation methods not only confirm the role of the target but are also critical for predicting safety and efficacy in later stages.

Lead Compound Identification

Once a target is validated, the next step is to discover molecules that interact with and modulate the target’s activity. Lead compound identification uses high-throughput screening and other techniques to sift through thousands to millions of compounds looking for “hits” that bind to the target. In modern drug discovery, hit identification can involve large chemical libraries screened in in vitro assays that measure binding affinity, biological activity, and preliminary safety parameters.

Screening techniques have evolved from early ligand-binding assays to sophisticated cell-based systems and real-time biophysical measurements. HTS platforms can use robotic systems and computer algorithms to rapidly quantify interactions, while virtual screening approaches such as structure-based drug design (SBDD) and ligand-based drug design (LBDD) use in silico methods to predict binding modes using computational molecular modeling. These methods are complemented by data from high-throughput experimental studies to select the most promising candidates. This stage is not only guided by physicochemical properties but also by predictive models of absorption, distribution, metabolism, excretion, and toxicity (ADMET), which are critical to determine if a hit might be viable as a drug candidate.

Lead Optimization

After identifying one or more promising hit compounds, the process advances to lead optimization—a phase where medicinal chemists systematically modify the chemical structure to improve potency, selectivity, drug-likeness, and safety. Lead optimization is highly iterative and involves synthesizing analogue series, structure–activity relationship (SAR) studies, and quantitative structure–activity relationship (QSAR) modeling.

During this stage, compounds are evaluated for their pharmacokinetic properties and toxicity profiles using both in silico and in vitro experiments. For example, computer-aided drug design (CADD) can predict which modifications will most improve a compound’s binding affinity or metabolic stability, whereas experimental work such as high-throughput affinity screening often directs further chemical refinement. Factors such as molecular weight, lipophilicity, solubility, and the nature of functional groups are carefully adjusted. The goal of lead optimization is to generate candidates that possess a balanced profile: high affinity for the target, acceptable pharmacokinetics, minimal off-target activity, and an acceptable safety margin.

The iterative nature requires assessing developmental risks and frequently includes a “go/no-go” decision-making process at multiple checkpoints. This approach helps narrow the candidate pool so that only the highest quality and most promising leads move forward to development.

Stages of Drug Development

Once an optimized lead compound emerges from the discovery phase, the process shifts to drug development. These stages are designed to rigorously test the compound’s safety and efficacy before it can be approved for clinical use.

Preclinical Testing

Preclinical testing is the stage at which the candidate drug is evaluated through a series of in vitro and in vivo experiments. In vitro studies use cell-based assays (2D or 3D cultures) to assess biological activity, ADME properties, and initial safety. More sophisticated models, such as organ-on-a-chip (OoC) platforms or engineered tissue models, are increasingly used to better recapitulate the human microenvironment and predict outcomes more reliably.

In vivo studies typically involve animal models. These studies provide critical data on the pharmacodynamics (PD) and pharmacokinetics (PK) of the compound, its metabolism, bioavailability, and toxicity profiles. Standardized protocols aligned with Good Laboratory Practice (GLP) and guidelines set out by the OECD and ICH are followed to ensure reproducibility and regulatory compliance. Preclinical phases serve as the final gate before the initiation of human trials, with a primary focus on safety, although they also provide early proof-of-concept efficacy data.

Preclinical testing also is a platform for determining the non-observed adverse effect level (NOAEL) and informing the safe starting dose for Phase I clinical trials. Thus, the extensive preclinical data gathered is crucial to persuade regulatory authorities of the drug’s safety profile and its potential for clinical benefit.

Clinical Trials

Clinical trials are the human testing phases of drug development, designed to evaluate safety, tolerability, efficacy, and dosing regimens. They occur in several sequential phases with increasing participant numbers and complexity.

Phase I

Phase I clinical trials are typically the first stage of testing in humans and involve a small group of healthy volunteers (or patients in cases of oncology or other high-risk treatments). These studies are primarily focused on safety and pharmacokinetics. Researchers gather data on absorption, distribution, metabolism, and excretion (ADME) in humans and determine the maximum tolerated dose while monitoring for side effects. In some instances, Phase I may include both single ascending-dose and multiple ascending-dose studies. The information garnered here provides the foundation for dosing and safety monitoring in subsequent phases.

Phase II

Phase II trials move from small-scale safety studies to more formal efficacy assessments in a patient population that has the target disease. These studies provide preliminary evidence of therapeutic activity, while continuing to evaluate safety and further defining the side effect profile. Phase II studies are generally split into “proof-of-concept” or “pilot” studies and encompass dose-ranging studies to confirm the optimal dose that offers clinical benefit. The design of Phase II is critical; it must balance the need for statistically significant results with efficient resource allocation. There is typically an increased sample size compared to Phase I, often including hundreds of patients, to obtain initial estimates of the drug’s efficacy and safety.

Phase III

Phase III clinical trials are pivotal studies designed to definitively establish the drug’s efficacy and safety in a large, diverse patient population. They are randomized, controlled, and often multicenter, sometimes multinational. The sample sizes in Phase III usually range in the thousands, which allows for more rigorous statistical analysis and detection of less common adverse events. The data from Phase III serve as the main body of evidence for regulatory submissions. Phase III trials are both expensive and time-consuming, sometimes taking several years to complete because they must meet stringent standards and adequately represent the target population.

Regulatory Approval

Once the clinical trials demonstrate that the candidate drug meets prespecified endpoints regarding safety and efficacy, the data is compiled into a New Drug Application (NDA) or Biologics License Application (BLA) for submission to regulatory agencies such as the FDA, EMA, or other national bodies. The regulatory approval phase involves a comprehensive review of all preclinical and clinical data, manufacturing processes, labeling, and quality control measures.

The regulatory agency evaluates whether the benefit-risk profile of the drug is positive for its intended use. In many regions, this review process spans several months to over a year, and may include advisory committee meetings and facility inspections. Approval is a critical milestone that authorizes the drug to be marketed, and it is followed by post-marketing surveillance (Phase IV) to continuously monitor long-term safety and effectiveness once the drug is in regular use.

Challenges and Future Directions

Even with these robust and multi-staged processes, drug discovery and development face several ongoing challenges that prompt continual evolution in methodology and technology.

Current Challenges

The modern drug discovery and development pipeline is associated with high attrition rates. Statistics show that from the initial hit-to-lead identification through clinical trials, only a small percentage of compounds eventually gain regulatory approval. One of the critical challenges is the translation gap between in vitro/in vivo preclinical models and human clinical trials. For example, traditional animal models often fail to predict human responses due to species differences in drug metabolism, receptor expression, and immune responses.

Another challenge is the enormous cost and time required for drug development. The development process can take 10–15 years and billions of dollars, with later stages (especially Phase III trials) being particularly resource intensive. Additionally, there is a high failure rate in late-stage clinical trials because of issues with efficacy, toxicity, pharmacokinetics, and sometimes poor target selection or inadequate early research.

The rapidly evolving scientific landscape has also contributed to the challenges of integrating new technologies with existing frameworks. For example, while opportunities exist in utilizing machine learning and artificial intelligence (AI) for predictive modeling and drug screening, ensuring data quality and regulatory acceptance remains a hurdle. Regulatory processes themselves, though designed for thorough evaluation, may add delays particularly when protocols are rigid and not easily adaptable to new data sources or novel therapeutic modalities.

Technological Advancements and Future Trends

To overcome these challenges, technological innovation is at the forefront of future drug discovery and development. Emerging technologies such as organ-on-a-chip (OoC) systems and 3D cell culture models promise more physiologically relevant in vitro methods, which could reduce the reliance on animal testing and improve prediction of human responses. Advances in high-throughput screening and combinatorial chemistry, combined with machine learning approaches for data analysis, are expected to streamline hit identification and lead optimization processes further.

Furthermore, computer-aided drug design (CADD) and in silico methods are gradually improving their accuracy and predictive power. These approaches leverage structural biology, bioinformatics, and large chemical databases to optimize candidate compounds rapidly and more cost-effectively. In addition, personalized medicine and pharmacogenomics are opening up the possibility for tailoring drugs to individual genetic profiles, thereby increasing efficacy and reducing adverse reactions.

Future trends include the integration of multidisciplinary data—combining omics data (such as genomics, proteomics, metabolomics) with advanced modeling techniques—to predict drug behavior in humans with unprecedented precision. Collaborative networks across academia, industry, and regulatory bodies will further aid the harmonization of global drug development processes, accelerating both discovery and approval phases. Moreover, regulatory agencies are already establishing expedited review pathways for drugs treating serious conditions or unmet medical needs, which, if optimized, could significantly reduce the development timeline without compromising safety.

Innovations also include improvements in overall process efficiency through lean management strategies and portfolio management in R&D. Such systemic improvements not only enhance productivity at both the program and portfolio levels but also facilitate informed decision-making regarding project continuation or termination. Finally, the adoption of novel assay techniques, such as high-throughput compressed screening and targeted information management systems in drug development, reflects a move towards more streamlined, data-driven approaches that promise to reduce development costs while increasing success rates.

Conclusion

In summary, drug discovery and development is an extensive process that begins with scientific exploration and hypothesis generation before proceeding through defined stages of target identification and validation, lead compound discovery, and iterative lead optimization. Once a promising candidate is identified, the development process rigorously tests the compound through preclinical studies and multiple clinical trial phases (Phase I, II, and III) to thoroughly establish its safety and efficacy. The regulatory review process then scrutinizes this wealth of data before granting marketing approval, while post-approval surveillance continues to ensure long-term safety. Despite significant challenges—including high attrition rates, long development timelines, and costly resource demands—the field is rapidly evolving by integrating innovative technologies such as organ-on-a-chip models, AI-driven screening tools, and personalized medicine approaches.

The modern paradigm increasingly reflects a general-to-specific-to-general approach: initial broad scientific discovery is refined through targeted evaluations, and thereafter, specialized technological advances are reintegrated into an overall strategy that aims to ultimately benefit patients. Each stage—from target validation to regulatory clearance—requires meticulous planning, coordinated multidisciplinary collaborations, and adaptive strategies to meet evolving scientific and regulatory demands. Emerging trends show promise in revolutionizing the field by improving predictability, reducing reliance on animal models, leveraging big data analytics, and optimizing overall R&D productivity, all of which are poised to shorten timeframes and reduce costs while enhancing the safety, efficacy, and personalization of therapies.

Ultimately, these comprehensive efforts ensure that only the most promising and safe drugs reach the market, underscoring the significance of a rigorous and evolving drug discovery and development process. As technology advances, regulatory frameworks adapt, and cross-disciplinary collaborations strengthen, the future of drug development holds the promise of more rapid, effective, and patient-centric therapies. This intricate yet dynamic journey continues to be vital for addressing emerging healthcare challenges and transforming innovative research into real-world clinical solutions.

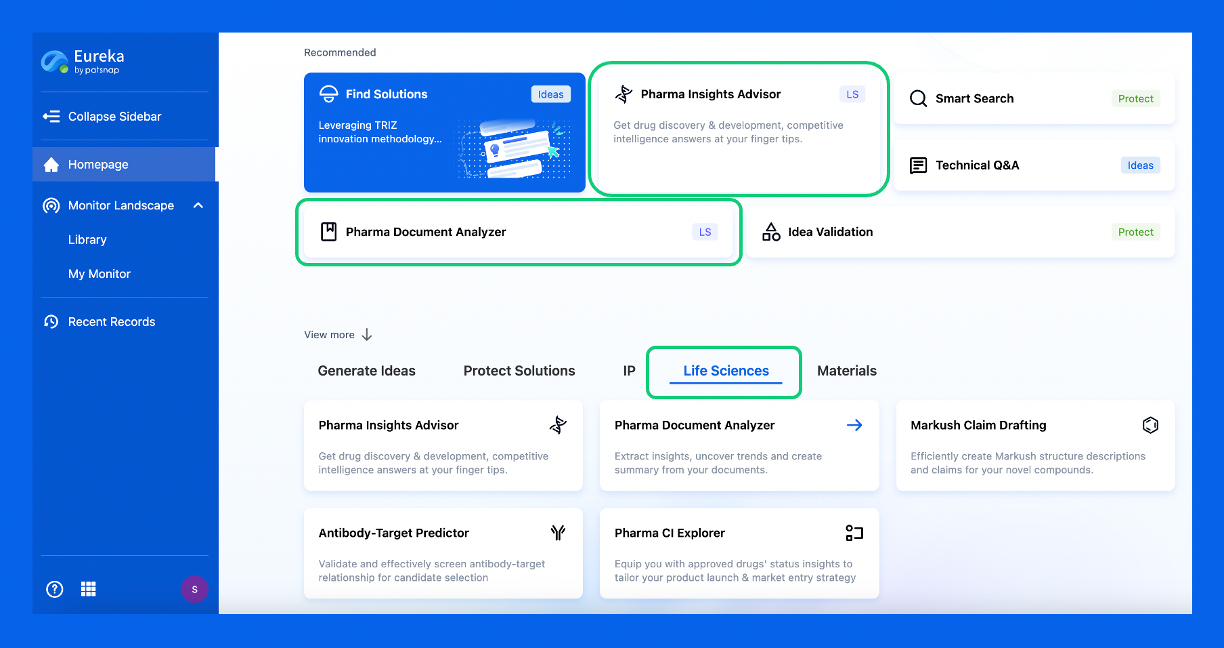

Discover Eureka LS: AI Agents Built for Biopharma Efficiency

Stop wasting time on biopharma busywork. Meet Eureka LS - your AI agent squad for drug discovery.

▶ See how 50+ research teams saved 300+ hours/month

From reducing screening time to simplifying Markush drafting, our AI Agents are ready to deliver immediate value. Explore Eureka LS today and unlock powerful capabilities that help you innovate with confidence.

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.