What role does AI play in high-throughput screening for drug discovery?

Introduction to High-Throughput Screening (HTS)

Definition and Purpose

High-throughput screening (HTS) is a paradigm employed in drug discovery to rapidly test large libraries of chemical compounds, biological molecules, or gene knock-downs to assess their ability to modulate a specific biological target or pathway. In essence, HTS is a brute-force approach that utilizes automation, robotics, highly miniaturized assay formats (ranging from 96-well to 1536-well microplates), liquid-handling devices, and advanced detection technologies (such as fluorescence, luminescence, and mass spectrometry) to identify “hits” that demonstrate desired bioactivity. The fundamental purpose of HTS is to significantly accelerate the early stages of drug discovery by efficiently filtering through thousands to millions of candidate compounds to determine those with potential therapeutic effects. This step is critical because it lays the groundwork for subsequent hit-to-lead optimization, de novo design, and eventual clinical candidate selection.

HTS assays may be either cell-free (biochemical assays) or cell-based. While biochemical assays allow for direct evaluation of enzyme activities or isolated molecular interactions, cell-based assays enable screening in an environment that mimics the natural physiological state. Both formats are designed to produce quantitative readouts that can be analyzed statistically, providing insights into mechanisms of action, potential off-target effects, toxicity, and pharmacodynamic characteristics. Ultimately, HTS serves as an essential ‘gatekeeper’ in drug discovery pipelines, improving the efficiency of downstream processes and reducing costs associated with testing compounds with no measurable biological activity.

Current Challenges in HTS

Despite its transformative potential, several challenges persist in traditional HTS workflows. One of the primary difficulties relates to the handling and analysis of overwhelming amounts of data generated during screening campaigns. As HTS typically produces large datasets, the data management systems must effectively process, standardize, and interpret results to reliably distinguish true positive hits from false positives or negatives.

Another challenge is the intrinsic cost and time associated with high-density assay development. Optimizing reagents, establishing robust assay conditions, overcoming issues like signal interference, matrix suppression, and edge effects, and ensuring reproducibility across thousands of wells constitute significant bottlenecks. Moreover, traditional high-throughput methods can sometimes suffer from limited dynamic range or sensitivity, resulting in suboptimal hit identification and leading to high false-positive rates, particularly when optical techniques are used.

In addition, the rigorous physical infrastructure, including robotics, liquid-handling units, and specialized detection instruments, often requires dedicated capital investment and extensive maintenance. As various compound libraries may come from disparate sources, the heterogeneity in chemical or biological materials also complicates standardization of assays. These issues demand innovative solutions that not only improve the reliability and throughput of screening operations but also reduce developmental costs and manual intervention.

Role of AI in High-Throughput Screening

AI Technologies Used in HTS

Artificial intelligence (AI) has emerged as a transformative tool to overcome many of the limitations inherent in traditional HTS methods. At its core, AI integrates advanced technologies such as machine learning (ML), deep learning (DL), natural language processing (NLP), and graph neural networks (GNN) into the HTS pipeline. These AI approaches are employed to extract meaningful patterns from large, often complex datasets generated during screening assays.

For instance, deep neural networks (DNNs) and convolutional neural networks (CNNs) have been extensively applied in scenarios where image-based high-content screening (HCS) is used to capture cellular phenotypes. By leveraging large datasets and sophisticated training methods, these networks can perform automated segmentation, feature extraction, and classification of cellular responses, thereby reducing the need for manual image analysis and increasing throughput and accuracy.

In the realm of compound screening, AI models such as support vector machines (SVM), random forests (RF), and Bayesian learning methods have been employed to model structure-activity relationships (SAR) and to predict the physicochemical properties, absorption, distribution, metabolism, excretion, and toxicity (ADMET) profiles of compounds even before synthesis. More recently, integrative approaches that exploit molecular fingerprints in conjunction with deep learning architectures have been developed to establish high-throughput virtual screening systems. These systems can filter millions of compounds in a matter of hours, prioritizing those with promising drug-like properties and reducing the number of expensive wet lab experiments.

Graph neural networks (GNNs) represent another cutting-edge AI technology utilized in HTS. GNNs are particularly adept at encoding the structural information of molecules by considering atoms as nodes and bonds as edges. When combined with knowledge graphs (KG) or other relational data, GNNs can provide insights into potential binding modes, off-target effects, and even de novo drug design, thus enriching the screening process with spatial and contextual information.

The integration of these various AI methodologies enables a paradigm shift—from the conventional brute-force screening approach to one that is data-driven, predictive, and adaptive. Notably, some patents have detailed systems that include AI-assisted high-throughput drug virtual screening methods based on molecular fingerprints and deep learning, showcasing rapid model training and online screening capabilities that are accessible to medicinal chemists and drug discovery specialists.

Integration of AI with HTS Processes

In practical HTS workflows, AI is not employed as a standalone technology but is integrated holistically into multiple stages of the screening process. First, AI algorithms are used during the assay optimization phase. By analyzing historical experimental data and identifying key variables that influence throughput and assay performance, machine learning models can predict the optimal conditions to minimize variability and enhance sensitivity.

During the screening phase, AI systems can operate in real time to monitor assay performance, ensure quality control, and flag anomalies associated with sample handling or readout errors. These real-time analytics, powered by AI, enable dynamic adjustments in assay conditions, such as automating the selection of wells for re-testing in case of low signal-to-noise ratios and optimizing reagent dispensing through robotic systems.

Integration of AI into the data analysis pipeline further enhances the process. Complex statistical methods combined with deep learning allow for the rapid sorting of screening results. AI-driven models can establish robust structure-activity relationships (SAR) by cross-referencing chemical structures with biological activity data stored in curated databases, which are often updated continuously with new screening data. These feedback loops not only bolster hit identification and prioritization but also contribute to an improving predictive model over time.

Furthermore, AI platforms facilitate virtual screening approaches that serve as a pre-filter before performing wet-lab experiments. Through molecular docking simulations that are accelerated using AI, potential drug targets can be matched with compounds that are predicted to have high binding affinities, thereby reducing the number of compounds that must undergo costly and time-consuming experimental validation. The ability of AI systems to integrate multiple types of data—from imaging data in high-content screening to in silico docking scores and even clinical trial results—ensures that compound selection is robust and multi-dimensional.

This integrated AI workflow not only streamlines data management and enhances decision-making but ultimately leads to a faster, more cost-effective HTS process. By reducing the burden on human experts and allowing for more adaptive operations, AI is key to transforming HTS from a resource-intensive, manual process into an intelligent, automated pipeline that drives forward drug discovery.

Impact of AI on Drug Discovery

Efficiency and Accuracy Improvements

The incorporation of AI into high-throughput screening has had a significant impact on the efficiency and accuracy of drug discovery processes. On the efficiency front, AI-powered systems are able to handle and analyze vast quantities of complex data in a fraction of the time required by traditional methods. For instance, AI-accelerated screening platforms can rapidly sift through millions of compounds to identify promising candidates, thereby reducing the screening duration from years to a matter of weeks or months. This speed is not only beneficial for early-stage discovery but also critical when responding to urgent medical needs, such as during pandemics or outbreak situations.

In terms of accuracy, AI algorithms improve signal detection through sophisticated pattern recognition and anomaly detection techniques. By learning from historical data and continuously updating their predictive models, AI platforms improve the hit rate and reduce the false positive and false negative rates that often plague traditional screening campaigns. Deep learning models, with their ability to process high-dimensional data from complex assay systems, have been shown to achieve improved accuracy in identifying bioactive compounds when compared to conventional statistical methods.

Moreover, AI integration into HTS processes facilitates the automated quality control and real-time data corrections necessary for reliable screening. For example, advanced AI systems can flag inconsistent data due to technical errors, identify low-quality assay plates, and mandate re-screens of samples that fall below predetermined thresholds. This level of automation reduces human error and ensures that only the most reliable data is used to drive decision-making in drug discovery.

From a cost perspective, the efficiency gains provided by AI also translate into significant reductions in both time and manpower. AI can help prioritize compounds with the highest potential, minimizing the number of expensive wet-lab validations needed. In turn, this accelerates the lead optimization and eventual clinical candidate selection phases, ultimately reducing the overall development cost of a new drug.

Case Studies and Success Stories

Several case studies and success stories illustrate the transformative impact of AI on HTS and overall drug discovery. A notable example comes from a study where an AI-based screening method achieved up to 97% accuracy in predicting drug-protein interactions, an advancement that dramatically increases confidence in hit compounds selected from initial HTS rounds. Such achievements are significant when contrasted with the traditional hit rates observed in laboratory settings, where experimental hit rates can be as low as 0.021%.

Another success story is the deployment of deep learning models that integrated molecular fingerprints with neural network algorithms to conduct high-throughput virtual screening. This system not only streamlined the screening process for millions of compounds but also significantly reduced the time required for model establishment and virtual screening, thereby accelerating downstream drug development stages. Numerous patents have been filed detailing systems and methods for AI-assisted HTS, underscoring the growing industrial interest in these technologies.

In addition, AI-driven in silico screening platforms have been employed in pharmaceutical companies to predict ADMET properties and optimize the structure and activity of novel compounds. These applications demonstrate that AI models are not only capable of high-throughput compound prioritization but also offer predictive insights that guide medicinal chemists in the design of more effective and safer drugs.

Furthermore, industry news reports have revealed case studies where AI-driven HTS systems have successfully reduced screening costs and improved clinical success rates in drug discovery pipelines, with some pharmaceutical companies reporting up to a 21% clinical success rate compared to an industry average of 11%. These examples provide concrete evidence that the integration of AI with HTS has a substantial impact on the overall efficiency and effectiveness of drug discovery.

Ethical and Practical Considerations

Ethical Implications

As AI becomes increasingly embedded within the drug discovery landscape, ethical questions and societal implications naturally arise. One significant ethical consideration is the transparency and interpretability of AI systems. Given that many AI algorithms—particularly deep neural networks—can function as “black boxes,” it is critical to develop explainable AI (XAI) frameworks that allow researchers and regulatory bodies to understand the rationale behind hit selection and predictive outputs. This transparency is crucial for building trust among stakeholders, including scientists, clinicians, and patients, especially when such predictions may directly influence clinical decision-making.

Data privacy and security also represent paramount ethical concerns. HTS processes generate vast datasets that often include sensitive proprietary information about chemical compounds, biological assays, and even patient-derived data in later development stages. Ensuring that AI systems processing this data are secure and that sensitive information is encrypted and managed under strict regulatory compliance is essential to prevent data breaches and to maintain ethical standards.

Moreover, the potential for algorithmic bias must be addressed. Training AI models on datasets that may be incomplete or non-representative of the broader chemical or biological space can lead to biased predictions, favoring certain types of compounds or assay conditions over others. Such bias may inadvertently exclude promising candidates or skew the overall direction of a drug discovery program. Hence, maintaining a diverse and high-quality dataset is crucial, and steps should be taken to audit and update AI models regularly to minimize bias.

Another ethical aspect is the fairness in the distribution of AI’s benefits. While AI may drastically accelerate drug discovery and drive down costs, there is a risk that these advancements may primarily benefit large pharmaceutical companies and developed regions, potentially exacerbating disparities in access to new therapies across different populations and regions. It is therefore incumbent upon both industry and regulatory bodies to ensure that AI-driven innovations in drug discovery are implemented fairly and inclusively.

Practical Challenges and Solutions

From a practical standpoint, integrating AI into HTS presents several challenges that must be tackled systematically. One primary challenge is the necessity for large volumes of high-quality data. Many AI algorithms require extensive and well-curated datasets to learn effectively and to make accurate predictions. In contexts where data is heterogeneous or incomplete, the performance of AI systems can be compromised. To mitigate this, platforms that integrate data augmentation techniques, normalization, and robust data management practices have been developed.

Another practical challenge is the integration of AI systems with existing laboratory infrastructures. Traditional HTS workflows rely on a confluence of robotics, plate readers, and bespoke laboratory information management systems (LIMS). Seamlessly integrating AI-driven analytics into these pre-existing frameworks demands significant investment in new hardware, communication protocols, and software interfaces. However, recent advances in cloud-based computing and modular robotics are beginning to address these integration issues, enabling more flexible and adaptive screening platforms.

Scalability is yet another concern. As HTS campaigns expand in size, the computational burden placed on AI systems increases. Ensuring that AI models can scale to handle millions of compound assays in real time without sacrificing accuracy is essential. Distributed computing, parallel processing, and the use of high-performance GPUs have proven effective in this area, and continued research is likely to yield even more scalable solutions.

In parallel, there is a practical need for interdisciplinary collaboration. The successful deployment of AI in HTS requires chemists, biologists, data scientists, and IT specialists to work together closely. Establishing robust communication channels and collaboration platforms is a practical solution to harness the collective expertise required to effectively troubleshoot issues, optimize AI algorithms, and refine HTS processes. Many organizations are now setting up integrated research teams and cross-functional units dedicated to AI-assisted drug discovery, ensuring that human expertise complements advanced computational tools.

Finally, the regulatory environment surrounding AI-driven drug discovery remains a challenge. As AI models become integral to the decision-making process, regulatory bodies must develop standardized criteria to evaluate these systems, ensuring that they meet stringent requirements for accuracy, reproducibility, and safety. Collaborative efforts between industry, academia, and regulatory agencies are essential to draft guidelines that balance innovation with consumer safety.

Future Directions and Research Opportunities

Emerging Trends

Looking ahead, several emerging trends suggest that the role of AI in high-throughput screening and drug discovery will only continue to expand. One major trend is the rise of explainable AI (XAI). As stakeholders demand greater transparency in AI-driven decision-making, research into models that not only predict outcomes but also provide human-interpretable explanations is on the rise. XAI frameworks will be instrumental in increasing trust, particularly when AI systems are used as part of clinical drug development pipelines.

Another trend is the convergence of AI with other rapidly advancing technologies. For example, the integration of microfluidics with AI-driven control systems heralds the advent of next-generation high-throughput screening platforms. These systems combine ultra-miniaturized reaction chambers with real-time AI analytics to achieve unprecedented throughput and resolution in screening assays. Such platforms promise to refine the granularity of data collected from each screening event while maintaining high throughput, thus advancing both discovery efficiency and data quality.

Advances in cloud computing and edge analytics are also emerging trends that will further empower AI in HTS. With the ability to process large datasets in real time on distributed computing networks, AI-driven HTS can become highly adaptive, automatically adjusting screening protocols based on incoming data streams. This can enable dynamic experimental designs where screening conditions are continuously refined based on predictive feedback loops, significantly improving the hit rate and reducing the cost of drug discovery.

In addition, there is growing interest in the application of generative models, such as generative adversarial networks (GANs) and variational autoencoders (VAEs), in drug design and screening. These models can propose novel chemical structures that meet desired properties or simulate the potential binding interactions with target proteins. When integrated with HTS data, generative models offer a pathway to not only screen existing libraries but to design new compounds de novo. This synergy could revolutionize the pipeline, accelerating the journey from hit identification to lead optimization.

Potential Research Areas

Several potential research areas have emerged at the intersection of AI and HTS that warrant further exploration. A primary area for investigation is the development of standardized, open-source platforms that integrate AI with HTS workflows. Such platforms would allow for wider adoption in academic institutions and smaller biotech companies, democratizing access to advanced screening technologies and reducing the disparities between large pharmaceutical enterprises and resource-limited settings.

Another potential research direction involves enhancing the interpretability of AI models used in HTS. There is a critical need for research into methods that provide real-time explanations for why specific compounds are identified as hits, taking into account both chemical structure and biological activity. The development of visualization tools and interactive dashboards to present these insights in an accessible manner would greatly benefit medicinal chemists and regulatory bodies alike.

Research efforts could also focus on improving the robustness and generalizability of AI models when applied to heterogeneous screening datasets. This includes investigating techniques for handling missing data, integrating multi-omics data with HTS results, and developing algorithms that can adapt to new classes of compounds without necessitating full retraining. Approaches that leverage transfer learning and active learning are particularly promising in this context.

Additionally, the integration of robotics and automated laboratory systems with AI offers a fertile ground for research. Investigating how to seamlessly connect real-world hardware (e.g., microplate readers, robotic liquid handlers) with sophisticated AI analytics in a closed-loop system is a promising avenue that could lead to highly autonomous screening laboratories. Research in this field might explore the development of modular interfaces and standard communication protocols that enable the plug-and-play integration of AI modules with laboratory instrumentation.

Finally, there is a growing need to address the ethical, legal, and societal framework surrounding AI in HTS. Research on AI governance in drug discovery, including studies on data privacy, algorithmic bias, and equitable access to AI-driven technologies, is essential. This interdisciplinary research must involve ethicists, legal experts, and domain scientists to ensure that the future deployment of AI in high-throughput drug discovery is both responsible and socially acceptable.

Conclusion

In summary, artificial intelligence plays a multifaceted and transformative role in high-throughput screening for drug discovery. Initially, HTS is an essential methodology designed to rapidly test vast libraries of potential therapeutic compounds, yet traditional techniques face significant challenges in data management, reproducibility, cost, and assay optimization. AI technologies—including machine learning, deep learning, graph neural networks, and generative models—have emerged as powerful tools to address these challenges by optimizing assay conditions, automating data analysis, and predicting key chemical and biological properties.

AI is integrated thoroughly into HTS processes. During assay development, it leverages historical data to optimize experimental conditions; during screening, it provides real-time quality control and data correction; and in data analysis, it automates hit identification and prioritization—thereby streamlining the entire drug discovery pipeline. The impact of these integrations is evident in improved screening efficiency, better hit rates, lower costs, and a significant reduction in human error. Numerous case studies and patents have demonstrated that AI-driven HTS systems are not only faster but also more accurate than traditional methods, with some AI-based solutions achieving record hit rates and accelerating the transition of candidate compounds into clinical phases.

At the same time, ethical and practical considerations must be addressed. Transparency, data privacy, algorithmic bias, and the need for inclusivity are all key challenges that require attention to ensure that AI-informed drug discovery meets rigorous ethical standards. Practical challenges such as data quality, system integration, scalability, and regulatory compliance demand cross-disciplinary collaboration and the development of open, standardized platforms that foster trust and reproducibility.

Looking to the future, emerging trends such as explainable AI, microfluidics integration, cloud-based real-time analytics, and generative models indicate that the role of AI in high-throughput screening will continue to expand and evolve. Potential research areas include improving AI model interpretability, developing robust and scalable platforms, integrating robotics with AI systems, and ensuring ethical and equitable access to these transformative technologies.

In conclusion, AI is revolutionizing the HTS landscape in drug discovery by enhancing speed, accuracy, and cost-efficiency while introducing new paradigms for compound design and analysis. Its holistic integration into screening processes transforms vast raw data into actionable insights and propels the entire drug development pipeline forward. Despite challenges—both ethical and practical—the continued evolution of AI, supported by interdisciplinary research, promises to redefine high-throughput screening and expand its role in the efficient discovery of effective drugs, ultimately benefiting patients worldwide.

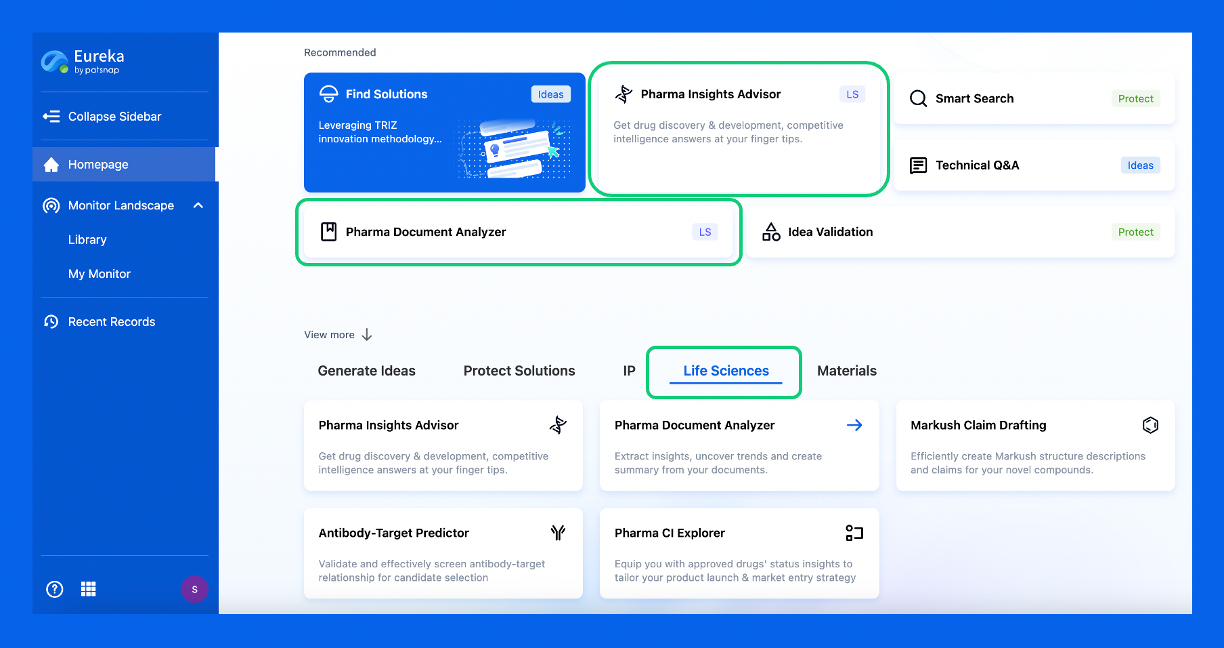

Discover Eureka LS: AI Agents Built for Biopharma Efficiency

Stop wasting time on biopharma busywork. Meet Eureka LS - your AI agent squad for drug discovery.

▶ See how 50+ research teams saved 300+ hours/month

From reducing screening time to simplifying Markush drafting, our AI Agents are ready to deliver immediate value. Explore Eureka LS today and unlock powerful capabilities that help you innovate with confidence.