Request Demo

Last update 24 Jan 2026

OpenAI OpCo LLC

Last update 24 Jan 2026

Overview

Related

100 Clinical Results associated with OpenAI OpCo LLC

Login to view more data

0 Patents (Medical) associated with OpenAI OpCo LLC

Login to view more data

1

Literatures (Medical) associated with OpenAI OpCo LLC01 Sep 2020·IEEE transactions on neural networks and learning systemsQ1 · COMPUTER SCIENCE

Teacher–Student Curriculum Learning

Q1 · COMPUTER SCIENCE

Article

Author: Taco Cohen ; John Schulman ; Tambet Matiisen ; Avital Oliver

We propose Teacher-Student Curriculum Learning (TSCL), a framework for automatic curriculum learning, where the Student tries to learn a complex task, and the Teacher automatically chooses subtasks from a given set for the Student to train on. We describe a family of Teacher algorithms that rely on the intuition that the Student should practice more those tasks on which it makes the fastest progress, i.e., where the slope of the learning curve is highest. In addition, the Teacher algorithms address the problem of forgetting by also choosing tasks where the Student's performance is getting worse. We demonstrate that TSCL matches or surpasses the results of carefully hand-crafted curricula in two tasks: addition of decimal numbers with long short-term memory (LSTM) and navigation in Minecraft. Our automatically ordered curriculum of submazes enabled to solve a Minecraft maze that could not be solved at all when training directly on that maze, and the learning was an order of magnitude faster than a uniform sampling of those submazes.

134

News (Medical) associated with OpenAI OpCo LLC09 Jan 2026

Eli Lilly is partnering with Chai Discovery, an AI drug design startup with a valuation of $1.3 billion, to gain access to its core platforms – Chai 1 and Chai 2 – and build a Lilly-exclusive model for early-stage drug discovery and development. Under Friday's deal, Chai said it will use Lilly's data to build an AI model tailored to Lilly’s discovery workflows. The pharma giant also plans to use Chai's existing platform to design therapeutics for multiple targets. The collaboration removes some of the mystery surrounding the startup's commercial strategy. After its $130-million series B announcement in December, Chai was mum about revealing whether its plans were to partner with pharma, sign co-development deals or move ahead with its own programmes, saying it would share more in due course. In Friday's announcement, Chai CEO Josh Meier said, "Beyond providing access to our core models, training custom models trained on Lilly’s data presents the opportunity to expand the boundaries of AI-enabled early-stage drug discovery and development."The Chai 1 platform, unveiled in 2024, can predict the structure of molecules crucial for drug development, analysing a range of substances such as proteins, small molecules, DNA and RNA. Chai 2 made its debut in June and is able to design antibodies, fully from scratch, with a near-20% hit rate, easily surpassing the 0.1% rate seen with some computational models.Backed by the likes of OpenAI, Oak HC/FT and General Catalyst, Chai has raised nearly $230 million to date. Oak HC/FT co-founder Annie Lamont said that "drug discovery is one of the areas that stands to benefit most from AI transformation, and Chai is the leader in building frontier models to reshape the category."Lilly's all in on AISeparately, more companies have got on board with Lilly's AI and machine learning platform, TuneLab, which gives them access to drug discovery models trained on the company's internal research data. According to Chief Scientific Officer Daniel Skovronsky, the platform is meant to be an "equaliser so that smaller companies can access some of the same AI capabilities used every day by Lilly scientists." The company has inked a string of new deals with Benchling, BigHat Biosciences, Schrödinger and Revvity. Alongside TuneLab, the drugmaker last year teamed up with NVIDIA to build what it has described as the industry’s most powerful AI supercomputer for drug discovery, designed to train models on millions of experiments and feed them back into internal platforms. Lilly has framed that work as a shift from using AI as a standalone tool to hard-wiring it into how drugs are discovered and developed.

20 Dec 2025

Welcome back to Endpoints Weekly! Before we get into this week’s headlines, here’s a holiday programming note: We won’t be sending our Saturday newsletter on Dec. 27 or Jan. 3. Our daily newsletters will also go dark between Dec. 24 and Jan. 1 — but our reporters will continue to bring you news on our website, so be sure to check in!

It’s almost the end of the year, and that means it’s time for our newsroom to weigh in on the pharma industry’s highs and lows of 2025. Check out the team’s recap

here

, or

watch our event

for an inside look at how the list came together. We also reported this week on the Trump administration’s latest “most favored nation” deals, an AI biotech’s $130 million raise, and the rise of investigator-initiated trials in China.

Our team will be on the ground at the JP Morgan conference next month. Whether you’re heading to San Francisco in person or tuning in remotely, you can sign up for our event

here

. Until then, happy holidays! Thanks so much for spending your Saturdays with us this year. See you in 2026! —

Nicole DeFeudis

🤝Amgen, Boehringer Ingelheim, Bristol Myers Squibb, Genentech, GSK, Gilead, Merck, Novartis and Sanofi

are the latest companies to announce drug pricing deals with the Trump administration. A senior administration official predicted major reductions in Medicaid prices as a result of the latest deals, which Zachary Brennan

detailed here.

And all of the companies have also agreed that any new product launches will come at the MFN-based prices. The White House plans to launch a new government site early next year, known as TrumpRx, to help patients purchase drugs directly at discounted rates.

President Donald Trump

sent letters to

17 drugmakers in July

, calling for them to implement MFN pricing.

Pfizer

,

Eli Lilly, Novo Nordisk

,

AstraZeneca

and

EMD Serono

have also struck deals with the White House. The latest companies are expected to receive a three-year reprieve from tariffs, in line with other recently announced deals.

📋Investigator-initiated trials — or IITs — have grown increasingly popular among China researchers,

and Western drugmakers working on genetic medicines want in, Endpoints’ Lei Lei Wu and Ryan Cross

reported

. The small, cheap, quick trials have played a major part in how China became a competitive force in cell and gene therapy.

Unlike a traditional clinical trial,

which requires approval from national regulators, IITs may only need clearance from the Chinese research hospital running the study. They also benefit from looser manufacturing requirements. And while drug regulators often require companies to monitor patients for long periods before ramping up to higher doses, the turnaround is shorter in an IIT.

IITs can’t be used for approval.

But they can help companies see whether their medicines are promising, or allow them to “fail fast,” Imviva Biotech CEO Lu Han told Endpoints. They can also be used to help attract new backing. EsoBiotec used an IIT to treat its first multiple myeloma patient in November 2024 and quickly showed that its therapy could keep the blood cancer at bay. Just months later, AstraZeneca

said it planned

to buy the company for $425 million.

“Everybody in biotech

is reevaluating how they work with China,” said Emile Nuwaysir, CEO of the recently launched Cambridge, MA-based genetic medicine startup Stylus Medicine. He said Stylus is considering a China IIT for its first clinical trial.

How will the US respond?

FDA Commissioner Marty Makary has expressed skepticism about trials conducted in China. He recently suggested that companies with early-stage studies outside the US might have to pay a higher user fee. Meanwhile, some US biotech investors have called for the FDA to follow China’s model, allowing for decentralized approvals for first-in-human trials.

Read more here

from Ryan and Lei Lei.

☕An ambitious AI biotech startup pulled in some new cash this week

, effectively

giving it unicorn status

. Chai Discovery closed a $130 million Series B at a $1.3 billion valuation, continuing a furious fundraising pace since being founded last year. The round was co-led by Oak HC/FT and General Catalyst. Other investors included Thrive Capital, OpenAI and Dimension Capital.

Chai

publicly launched in 2024

with a $30 million seed round carrying a $150 million valuation. The company then closed a $70 million Series A round this August at a $550 million valuation and is now taking another sizable jump just four months later.

Despite the speedy venture rounds, Chai has been slower

in disclosing specifics on its business plans. In previous interviews with

Endpoints News

, its co-founders have declined to say if they plan to build an internal pipeline of drugs, or focus narrowly on selling software or services to the pharma industry. This week’s announcement suggests the former Endpoints 11 winner may be more focused on going the software and/or services route.

Vivek Ramaswamy, the former Roivant CEO and presidential candidate,

appeared

on the board of a private chronic pain biotech this week. Ramaswamy, now running for governor of Ohio in next year’s election, is a co-founder, investor in and board member at Ambros Therapeutics. Ambros raised $125 million and hopes to begin a Phase 3 study in 2026 for its lead program, neridronate, in complex regional pain syndrome type 1, or CRPS-1.

In somewhat classic Ramaswamy fashion,

this isn’t neridronate’s first go-around at an approval. The drug has been approved for CRPS for more than a decade in Italy, but German drugmaker Grünenthal discontinued two Phase 3 studies in 2019. The new attempt harkens back to the business model Ramaswamy established at Roivant: taking older, less expensive drugs and running new, better studies for them. There are currently no FDA-approved treatments for CRPS.

Biotech correspondent Kyle LaHucik reported this week

that Areteia Therapeutics, one of the biotech industry’s biggest recent startup bets in respiratory illness, will

wind down operations

. Its top executives appear to have left the company, and three Phase 3 studies were listed as terminated on the federal clinical trials registry. Areteia had put together funding for as much as $425 million from investors such as Bain Capital Life Sciences, ARCH Venture Partners, Access Biotechnology, GV and Population Health Partners.

Phase 3AcquisitionDrug Approval

19 Dec 2025

Today, a brief rundown of news from Novo Nordisk and Merck & Co., as well as updates from Pfizer, Camp4 Therapeutics and Chai Discovery that you may have missed.Novo Nordisk on Thursday said it filed an application for U.S. approval of CagriSema, its weekly injectable for obesity. Earlier this year, the Danish drugmaker disappointed investors when the drug, a combination of an amylin-targeting medicine and semaglutide, missed the mark in a closely watched late-stage study. Still, its application is based on data from that trial, which found that patients shed an average of 23% of their body weight over 68 weeks, as well as a secondary study. Novo hopes to position CagriSema as a follow-up to the success of Wegovy, and to take the edge off the threat of its competitor Eli Lilly, which recently submitted an application for approval of its oral GLP-1 orforglipron. It would be the first amylin-targeting and GLP-1 combination on the market if approved. Gwendolyn WuCamp4 Therapeutics, an RNA-targeting biotechnology company, has inked a deal with GSK worth as much as $440 million to make medicines for neurodegenerative and kidney diseases. In a Thursday announcement, Camp4 said GSK would hand over $17.5 million in upfront costs, and could pay tiered royalties if any of the programs that emerge from the collaboration are commercialized. The partnership is focused on antisense oligonucleotide drugs that boost gene expression, which could be beneficial in genetic disorders caused by loss of function. Camp4 also announced the pricing of a $30 million public stock offering on Thursday. Gwendolyn WuA San Francisco-based AI drugmaker, Chai Discovery, has raised $130 million in a Series B financing to support its ambitions of speeding up the drugmaking process. The company claims its technology can predict how biochemical molecules interact with one another and find new ways to pursue hard-to-drug targets. Its investor syndicate included Oak HC/FT and General Catalyst, which co-led the round, as well as others such as Thrive Capital, OpenAI and Yosemite. Chai raised its $70 million Series A funding just four months ago. Gwendolyn WuA combination of Merck & Co.s immunotherapy Keytruda and the Pfizer/Astellas antibody-drug conjugate Padcev succeeded in another bladder cancer study, helping people who are otherwise eligible for chemotherapy live longer than those who got chemo, the companies said Wednesday. Trial enrollees had muscle-invasive bladder cancer that hadnt spread outside the bladder, and were undergoing surgery with curative intent. Participants were randomized to receive either Keytruda and Padcev before and after surgery or platinum-based chemo before surgery. Trial investigators then measured event-free survival, overall survival and remission rates. Beyond stating that the Keytruda-Padcev combination had a statistically significant and clinically meaningful benefit on those three measures, the companies didnt release specifics but said they will disclose data at a coming medical meeting. The combination has also improved survival for those who cant receive chemotherapy as well as those whose disease spread beyond the bladder. Jonathan GardnerAdaptive Biotechnologies said Monday it has signed two non-exclusive licenses with Pfizer to use its T cell receptor technology for discovery of potential therapeutic targets in people with rheumatoid arthritis. Under one deal, Adaptive will lead target discovery activities and Pfizer will be responsible for development and commercialization of any drugs that emerge from the research. Adaptive said the deal includes an unspecified upfront payment and will be eligible for additional payments based on achievement of specific data delivery, development, regulatory and commercial goals, all worth a potential total of $890 million. The second agreement will give Pfizer access to Adaptives T cell receptor-antigen dataset to accelerate its own research and drug discovery. That deal involves an unstated upfront payment and annual licensing fees. Jonathan Gardner '

License out/inImmunotherapyClinical Study

100 Deals associated with OpenAI OpCo LLC

Login to view more data

100 Translational Medicine associated with OpenAI OpCo LLC

Login to view more data

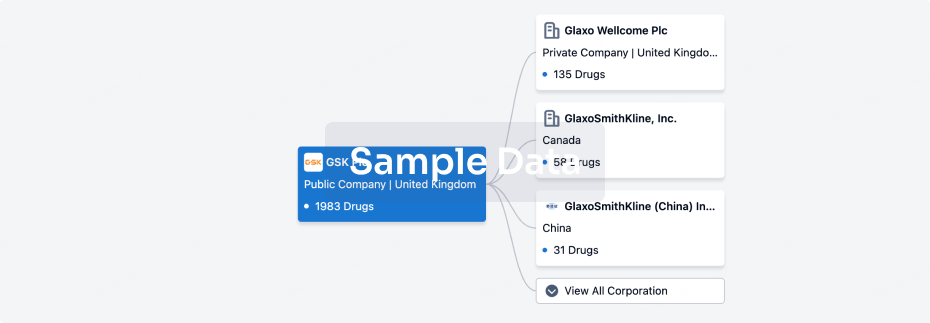

Corporation Tree

Boost your research with our corporation tree data.

login

or

Pipeline

Pipeline Snapshot as of 05 Feb 2026

No data posted

Login to keep update

Deal

Boost your decision using our deal data.

login

or

Translational Medicine

Boost your research with our translational medicine data.

login

or

Profit

Explore the financial positions of over 360K organizations with Synapse.

login

or

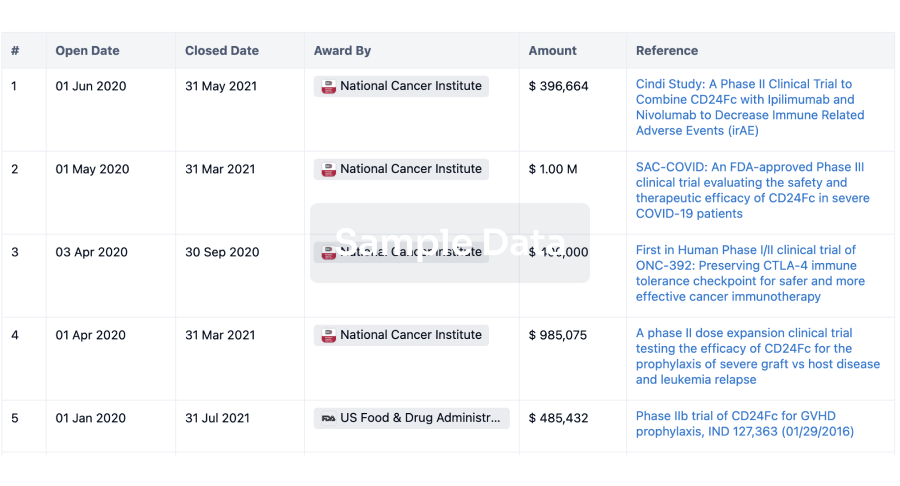

Grant & Funding(NIH)

Access more than 2 million grant and funding information to elevate your research journey.

login

or

Investment

Gain insights on the latest company investments from start-ups to established corporations.

login

or

Financing

Unearth financing trends to validate and advance investment opportunities.

login

or

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.

Bio

Bio Sequences Search & Analysis

Sign up for free

Chemical

Chemical Structures Search & Analysis

Sign up for free