Request Demo

What is the mechanism of 2LALERG?

18 July 2024

2LALERG, or Two-Level Adaptive Learning with Error-Regulated Gradient, represents a sophisticated mechanism within the realm of machine learning and artificial intelligence. This technique is designed to enhance the learning process by incorporating adaptive strategies and error regulation to refine the gradient descent method. To understand the mechanism of 2LALERG, it is essential to delve into its core components and their interaction.

At the heart of 2LALERG lies the concept of two-level adaptive learning. The first level of adaptation occurs at the macro level, where the learning algorithm adjusts the overall learning rate based on the observed performance across multiple training iterations. This macro-level adaptation helps in managing the learning pace, ensuring that the model does not converge too quickly or diverge due to an excessively high learning rate. By fine-tuning the learning rate dynamically, the algorithm maintains a balance between exploration and exploitation, which is crucial for achieving optimal performance.

The second level of adaptation operates at a micro level, focusing on individual parameters within the model. Here, the learning process is further refined by adjusting the learning rates of specific parameters based on their respective gradients. This targeted adaptation allows for more precise updates, particularly in scenarios where certain parameters require more significant adjustments than others. By addressing the unique needs of each parameter, the algorithm can achieve a more nuanced and effective learning process.

The error-regulated gradient aspect of 2LALERG introduces an additional layer of sophistication. Traditional gradient descent methods update parameters based solely on the computed gradients, without explicitly considering the error associated with each update. In contrast, 2LALERG incorporates an error regulation mechanism that evaluates the impact of each gradient update on the overall model performance. If an update leads to a substantial increase in error, the algorithm can mitigate this by adjusting the learning rates or even reverting the update. This error-regulated approach helps in maintaining stability and preventing the model from making drastic changes that could degrade performance.

To implement 2LALERG effectively, the algorithm continuously monitors the training process, assessing both the global and local impacts of parameter updates. This real-time monitoring enables the algorithm to make informed decisions about learning rate adjustments and error regulation. By integrating these adaptive strategies, 2LALERG ensures that the learning process remains responsive to the dynamic nature of the data and the model's evolving performance.

In summary, the mechanism of 2LALERG combines two levels of adaptive learning with an error-regulated gradient approach to enhance the efficiency and effectiveness of the learning process. By dynamically adjusting learning rates at both macro and micro levels and incorporating error regulation, this technique offers a robust framework for training machine learning models. The adaptive nature of 2LALERG allows it to respond to the unique challenges of different datasets and model architectures, ultimately leading to improved performance and generalization.

At the heart of 2LALERG lies the concept of two-level adaptive learning. The first level of adaptation occurs at the macro level, where the learning algorithm adjusts the overall learning rate based on the observed performance across multiple training iterations. This macro-level adaptation helps in managing the learning pace, ensuring that the model does not converge too quickly or diverge due to an excessively high learning rate. By fine-tuning the learning rate dynamically, the algorithm maintains a balance between exploration and exploitation, which is crucial for achieving optimal performance.

The second level of adaptation operates at a micro level, focusing on individual parameters within the model. Here, the learning process is further refined by adjusting the learning rates of specific parameters based on their respective gradients. This targeted adaptation allows for more precise updates, particularly in scenarios where certain parameters require more significant adjustments than others. By addressing the unique needs of each parameter, the algorithm can achieve a more nuanced and effective learning process.

The error-regulated gradient aspect of 2LALERG introduces an additional layer of sophistication. Traditional gradient descent methods update parameters based solely on the computed gradients, without explicitly considering the error associated with each update. In contrast, 2LALERG incorporates an error regulation mechanism that evaluates the impact of each gradient update on the overall model performance. If an update leads to a substantial increase in error, the algorithm can mitigate this by adjusting the learning rates or even reverting the update. This error-regulated approach helps in maintaining stability and preventing the model from making drastic changes that could degrade performance.

To implement 2LALERG effectively, the algorithm continuously monitors the training process, assessing both the global and local impacts of parameter updates. This real-time monitoring enables the algorithm to make informed decisions about learning rate adjustments and error regulation. By integrating these adaptive strategies, 2LALERG ensures that the learning process remains responsive to the dynamic nature of the data and the model's evolving performance.

In summary, the mechanism of 2LALERG combines two levels of adaptive learning with an error-regulated gradient approach to enhance the efficiency and effectiveness of the learning process. By dynamically adjusting learning rates at both macro and micro levels and incorporating error regulation, this technique offers a robust framework for training machine learning models. The adaptive nature of 2LALERG allows it to respond to the unique challenges of different datasets and model architectures, ultimately leading to improved performance and generalization.

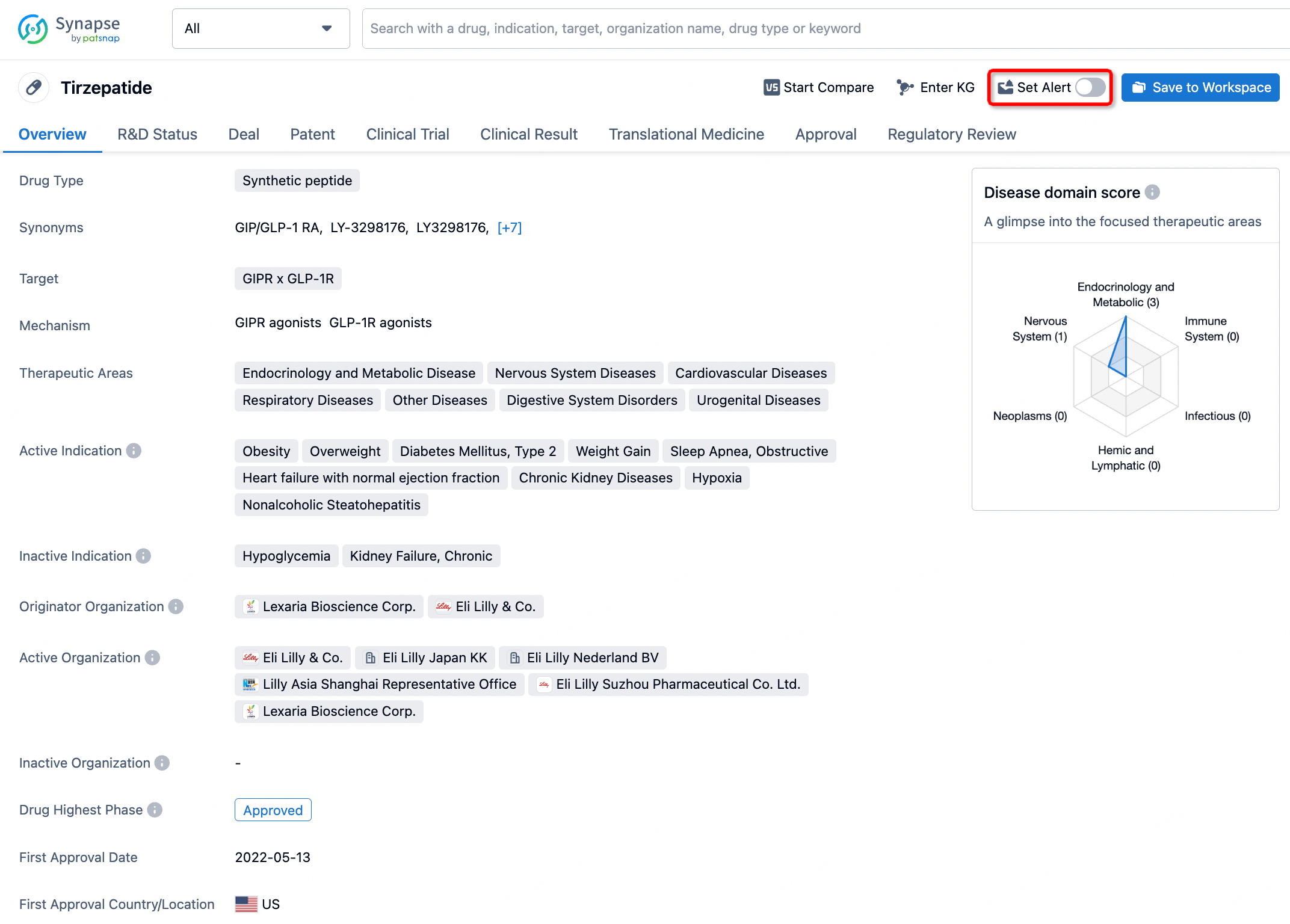

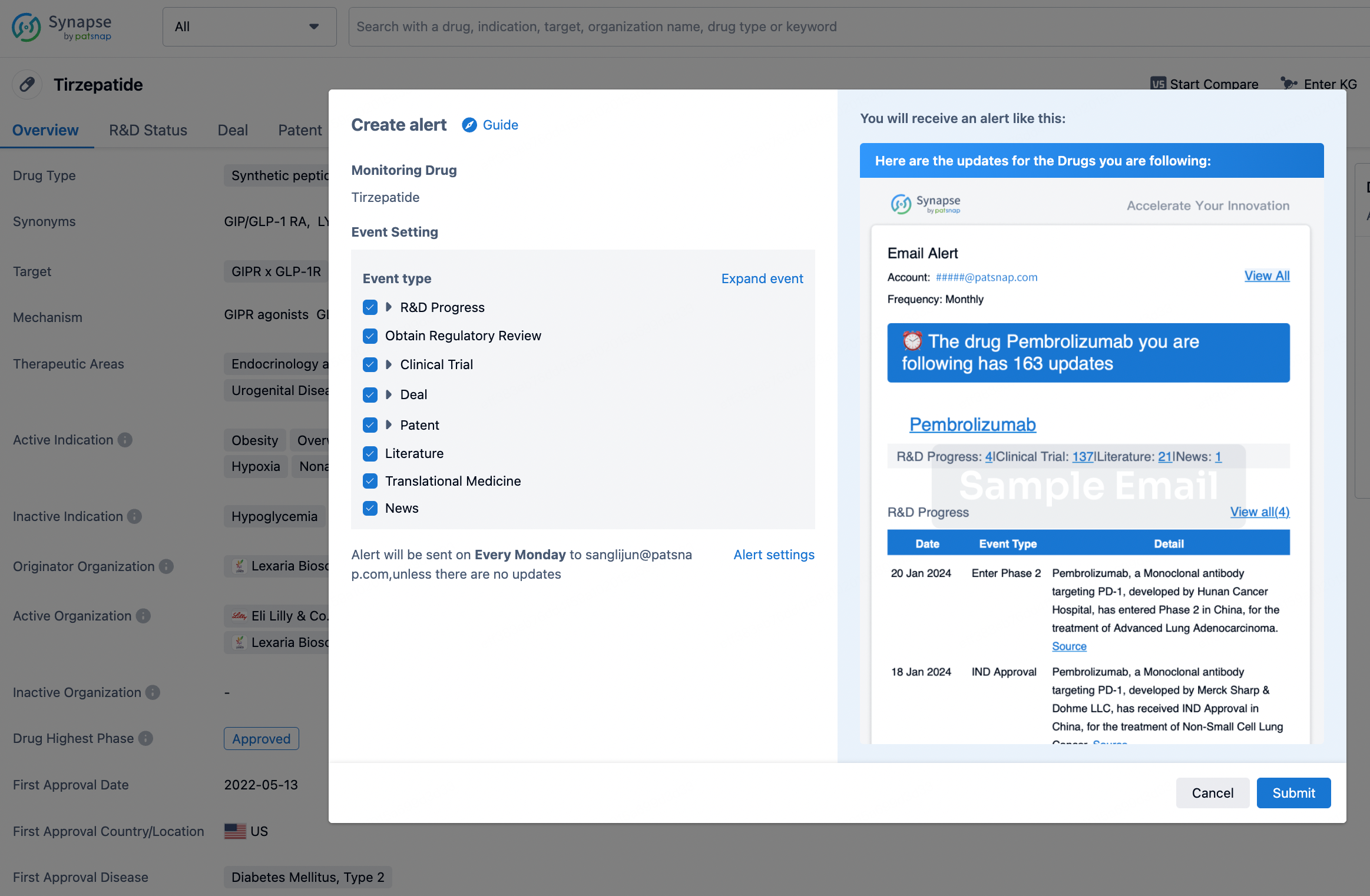

How to obtain the latest development progress of all drugs?

In the Synapse database, you can stay updated on the latest research and development advances of all drugs. This service is accessible anytime and anywhere, with updates available daily or weekly. Use the "Set Alert" function to stay informed. Click on the image below to embark on a brand new journey of drug discovery!

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.