Request Demo

Last update 09 Sep 2025

Thammasat University Hospital

Last update 09 Sep 2025

Overview

Related

44

Clinical Trials associated with Thammasat University HospitalNCT06943053

Does Ioband Coverage Waterproof Dressing Provide Better Outcome Than Isolate Waterproof Dressing After Primary Total Knee Arthroplasty? A Prospective Randomized Controlled Trial

Title: Evaluation of Ioband® Coverage Waterproof Dressing in Post-Operative Total Knee Arthroplasty (TKA)

Goal: To evaluate the effectiveness of Ioband® coverage waterproof dressing compared to standard waterproof dressing in reducing dressing change frequency and peel-off degree post-op TKA.

Main Research Questions:

1. Does Ioband® coverage waterproof dressing significantly decrease the degree of peel-off compared to standard waterproof dressing?

2. Does Ioband® coverage reduce the number of wound dressing changes required post-operatively?

3. Does Ioband® coverage improve overall patient satisfaction compared to standard waterproof dressing?

Participants:

Participants will include patients who have undergone total knee arthroplasty (TKA).

Main Tasks and Interventions:

1. Randomization: Participants will be randomly assigned to receive either the Ioband® coverage waterproof dressing or the standard waterproof dressing.

2. Application of Dressings: Participants will have the assigned dressing applied to their surgical site post-operatively.

3. Assessment of Peel-Off Degree: Participants will undergo assessments to evaluate the degree of peel-off of the dressing over a specified time.

4. Wound Dressing Changes: Participants will have their dressing changed as per routine care protocols, with documentation of the number of changes.

5. Patient Satisfaction Survey: Participants will complete a satisfaction survey to assess their experiences with the dressing and overall comfort.

Conclusion: The trial aims to provide insights into the benefits of Ioband® coverage waterproof dressing in improving post-operative care for TKA patients, focusing on key outcomes related to dressing performance and patient satisfaction.

Goal: To evaluate the effectiveness of Ioband® coverage waterproof dressing compared to standard waterproof dressing in reducing dressing change frequency and peel-off degree post-op TKA.

Main Research Questions:

1. Does Ioband® coverage waterproof dressing significantly decrease the degree of peel-off compared to standard waterproof dressing?

2. Does Ioband® coverage reduce the number of wound dressing changes required post-operatively?

3. Does Ioband® coverage improve overall patient satisfaction compared to standard waterproof dressing?

Participants:

Participants will include patients who have undergone total knee arthroplasty (TKA).

Main Tasks and Interventions:

1. Randomization: Participants will be randomly assigned to receive either the Ioband® coverage waterproof dressing or the standard waterproof dressing.

2. Application of Dressings: Participants will have the assigned dressing applied to their surgical site post-operatively.

3. Assessment of Peel-Off Degree: Participants will undergo assessments to evaluate the degree of peel-off of the dressing over a specified time.

4. Wound Dressing Changes: Participants will have their dressing changed as per routine care protocols, with documentation of the number of changes.

5. Patient Satisfaction Survey: Participants will complete a satisfaction survey to assess their experiences with the dressing and overall comfort.

Conclusion: The trial aims to provide insights into the benefits of Ioband® coverage waterproof dressing in improving post-operative care for TKA patients, focusing on key outcomes related to dressing performance and patient satisfaction.

Start Date06 Apr 2025 |

Sponsor / Collaborator |

NCT06910930

Impact of Muscle Training Device on Non-Severe OSA: A Randomized Controlled Study

The goal of this clinical trial is to test for the efficacy of the newly invented device #DidgeriTU with non-severe obstructive sleep apnea. The main question it aims to answer is:

• Can DidgeriTU reduce apnea events in patients with non-severe obstructive sleep apnea? Researchers will compare DidgeriTU with a sham device to see how the apnea event has changed.

Participants will:

* Use DidgeriTU or sham device for 3 month

* Do an online questionnaire once a month during the study

* Home sleep test, lung function test, and tongue strength test at the start and end of the study

• Can DidgeriTU reduce apnea events in patients with non-severe obstructive sleep apnea? Researchers will compare DidgeriTU with a sham device to see how the apnea event has changed.

Participants will:

* Use DidgeriTU or sham device for 3 month

* Do an online questionnaire once a month during the study

* Home sleep test, lung function test, and tongue strength test at the start and end of the study

Start Date01 Apr 2025 |

Sponsor / Collaborator |

NCT06901362

Seroprevalence and Vaccination Rate of Hepatitis B Virus and Varicella-zoster Virus Among Medical Students Under Immunizing Program

This study is a cross-sectional study. The study aims to determine the levels of immunity against hepatitis B virus and varicella-zoster virus in medical students.

Start Date01 Apr 2025 |

Sponsor / Collaborator  Thammasat University Thammasat University [+1] |

100 Clinical Results associated with Thammasat University Hospital

Login to view more data

0 Patents (Medical) associated with Thammasat University Hospital

Login to view more data

509

Literatures (Medical) associated with Thammasat University Hospital01 Oct 2025·Annals of Physical and Rehabilitation Medicine

Effectiveness of a 3D-printed silicone medial arch support on foot pain in individuals with pes planus: A randomized controlled trial

Article

Author: Channasanon, Somruethai ; Tesavibul, Passakorn ; Choosawad, Nayada ; Paecharoen, Siranya ; Tanodekaew, Siriporn ; Ngamsopasirisakul, Kan

BACKGROUND:

Pes planus, a foot deformity that causes foot pain and functional limitations, is often treated with custom-made foot orthoses as a conservative approach to managing symptoms. In this study, silicone medial arch orthotics produced by 3D printing technology were evaluated for their ability to reduce pain and improve foot function, compared to conventional Total Contact Insoles (TCI).

OBJECTIVES:

To assess the effectiveness of 3D-printed orthoses made from soft and hard silicone by evaluating primary outcomes (pain and foot function) and secondary outcomes (plantar pressure, heel valgus angle, and satisfaction), in comparison to those of TCI.

METHODS:

A total of 78 participants were randomized into 3 groups: soft and hard 3D-printed arch supports, and TCI. Outcomes were assessed at baseline and 2, 6, and 12 weeks after orthotic wear using various tools, including numeric pain scales, Foot Function Index (FFI) questionnaires, plantar pressure measurements, goniometric measurements of heel valgus angle, and satisfaction surveys.

RESULTS:

All 3 groups showed a significant reduction in pain after a 12-week intervention (P < 0.001), with no significant difference between orthotic types. FFI demonstrated progressive and comparable improvement in all groups, with an advantage for TCI. Redistribution of plantar pressure was observed, with no significant difference between the orthoses. The heel valgus angle showed no significant change from baseline in all groups. High satisfaction scores (over 80 %) were achieved for all groups, with no significant differences between them.

CONCLUSION:

The 3D-printed arch supports are as effective as TCI in reducing foot pain and improving foot function in participants with pes planus. They represent a viable alternative, but without demonstrated superiority.

TRIAL REGISTRATION:

Australian New Zealand Clinical Trials Registry (ACTRN12624000330549).

03 Aug 2025·Journal of Surgical Case Reports

When stroke uncovers a hidden cardiac mass: LVOT papillary fibroelastoma in an elderly Thai male

Article

Author: Witoonchart, Kan ; Sae-Li, Porntita ; Hannarong, Juthamas ; Nana, Ruj

Abstract:

Papillary fibroelastoma (PFE) is a rare, benign cardiac tumor usually arising from the heart valves, whereas non-valvular involvement is uncommon. We report herein a case of a 71-year-old Asian male who developed an ischemic stroke during hospitalization for gastrointestinal bleeding. A stroke workup led to the incidental detection of a cardiac mass in the left ventricular outflow tract, later confirmed as PFE following surgical excision without complications. This case highlights the importance of cardiac imaging in patients with cryptogenic stroke and supports early surgical excision to prevent recurrent embolic events.

01 Aug 2025·Sleep and Breathing

Poor sleep quality is a predictor of severe hypoglycemia during comprehensive diabetes care in type 1 diabetes

Article

Author: Tharavanij, Thipaporn ; Likitmaskul, Supawadee ; Dejkhamron, Prapai ; Deerochanawong, Chaicharn ; Santiprabhob, Jeerunda ; Reutrakul, Sirimon ; Nitiyanant, Wannee ; Rawdaree, Petch

Abstract:

Purpose:

Sleep disturbances is common in type 1 diabetes (T1D) and can be associated with poor glycemic control, and possibly hypoglycemia. This study aims to investigate whether poor sleep quality, as assessed by the Pittsburgh Sleep Quality Index (PSQI), was associated with glycemic control or severe hypoglycemia in T1D individuals.

Methods:

This one-year prospective cohort study included 221 (148 F/63 M) T1D participants (aged ≥ 13 years), receiving intensive insulin therapy. A1C levels were obtained at baseline and during the 12-month follow-up. Incidences of diabetic ketoacidosis (DKA) and severe hypoglycemia were collected.

Results:

The mean age of participants was 21.4 ± 8.9 years, with a baseline A1C of 9.27 ± 2.61%. Poor sleep quality was reported in 33.0% of participants. A1C levels improved over the one-year follow-up, but there was no significant difference in A1C reduction between those with good vs. poor sleep quality (-0.42 ± 1.73 vs. -0.42 ± 1.67, P = 0.835), nor in DKA incidence (P = 0.466). However, participants with poor sleep quality experienced more SH episodes (6.53 (2.45–17.41) vs. 0 per 100-person year, P = 0.01). After adjusting for age, body mass index, and glucose monitoring, each one-point increase in PSQI score was associated with a higher severe hypoglycemia risk (OR 1.31, 95%CI 1.13–1.52). Poor sleep quality predicted an increased risk of severe hypoglycemia (OR 24.54, 95%CI 1.31-459.29).

Conclusion:

Poor sleep quality is common in T1D individuals and is a risk factor for incident SH. These findings highlight the importance of incorporating sleep assessment into routine T1D diabetes care and the need of targeted interventions to improve sleep quality in T1D individuals. These findings support the importance of addressing sleep quality in T1D management, particularly in relation to hypoglycemia risk.

1

News (Medical) associated with Thammasat University Hospital03 Nov 2024

Results from Novo Nordisk, presented at ObesityWeek®, showed treatment with semaglutide injection 2.4 mg resulted in significant reductions in hospital admissions as well as overall time spent in the hospital1

Cardiovascular disease represents the leading cause of death globally,2 and adults with obesity are at a higher risk of developing cardiovascular disease3

The analysis is based on SELECT, a large cardiovascular outcomes trial that evaluated the effect of semaglutide 2.4 mg on the risk of MACE (heart attack, stroke, or death) in adults with overweight or obesity and known heart disease4

PLAINSBORO, N.J., Nov. 3, 2024 /PRNewswire/ -- Today, Novo Nordisk presented an exploratory post hoc analysis from the SELECT phase 3 cardiovascular outcomes trial that showed semaglutide 2.4 mg significantly reduced hospital admissions and overall length of hospital stay for adults with obesity or overweight with established cardiovascular disease (CVD) and without diabetes.1 The results were presented during an oral session at the annual ObesityWeek® conference and provide further insights based on data from SELECT– a large cardiovascular outcomes trial that evaluated the effect of semaglutide 2.4 mg on the risk of MACE (heart attack, stroke, or death) in this population.

CVD covers a wide range of conditions that, when combined, represent the leading cause of death globally and are associated with substantial healthcare costs.2,5 Obesity directly increases the risk of CVD, including heart attack and stroke, while also contributing to the progression of other cardiovascular (CV) risk factors including elevated blood pressure and cholesterol.3,5 Two in three patients with overweight or obesity die from CVD.6

"People with obesity or overweight with established cardiovascular disease (CVD) and without diabetes are more likely to be admitted to the hospital for events like heart attack or stroke, contributing to reduced patient well-being, higher use of healthcare resources, and disease burden," said Dr. Steven E. Kahn, M.D., Ch.B., Division of Metabolism, Endocrinology and Nutrition, Department of Medicine, VA Puget Sound Health Care System and University of Washington, Seattle. "In the SELECT trial, this cohort of patients had a high rate of hospital admissions, but for those given once-weekly semaglutide 2.4 mg, we observed significant reductions in hospital admissions and overall time they spent in the hospital. We are pleased to have this analysis that further examines the effects of semaglutide."

According to this SELECT analysis, a lower percentage of patients taking semaglutide 2.4 mg experienced a first hospital admission for any indication versus placebo (33.4 vs 36.7%, hazard ratio [HR] 0.89 [0.84, 0.93], p<0.0001) and for serious adverse events (30.3 vs 33.4%, HR 0.88 [0.84, 0.93], p<0.0001).1 In addition, the number of total hospitalizations was lower in the semaglutide 2.4 mg group versus placebo for all indications (18.3 vs 20.4 admissions per 100 patient years, HR 0.90 [0.85, 0.95], p=0.0002) and for serious adverse events (15.2 vs 17.1 admissions per 100 patient years, HR 0.89 [0.84, 0.94], p<0.0001).1 The number of days hospitalized per 100 patient years was lower in the semaglutide 2.4 mg group for all hospitalizations (157.2 vs 176.2 days, risk ratio [RR] 0.89 [0.82, 0.98], p=0.01) and for hospitalizations related to serious adverse events (137.6 vs 153.9 days, RR 0.89 [0.81, 0.98], p=0.02).1 The widths of the confidence intervals have not been adjusted for multiplicity and therefore the confidence intervals and p-values should not be used to infer definitive treatment effects for this exploratory post hoc analysis. Semaglutide is not approved in the U.S. for hospitalization-related outcomes.

Safety data collection in the SELECT trial was limited to serious adverse events (including death), adverse events leading to discontinuation, and adverse events of special interest.4,7 In the SELECT trial, the proportion of patients for whom serious adverse events were reported was 33.4% in patients randomized to semaglutide 2.4 mg and 36.4% of patients receiving placebo.4 Sixteen percent (16%) of semaglutide 2.4 mg-treated patients and 8% of placebo-treated patients, respectively, discontinued study drug due to an adverse event.7 The most common adverse event leading to discontinuation was gastrointestinal disorders, occurring in 10% of patients in the semaglutide 2.4 mg group and 2% in the placebo group.4

"We are pleased to continue building on the strong foundation of SELECT trial data that demonstrated the effectiveness of semaglutide 2.4 mg in lowering CV risk in patients with obesity and established cardiovascular disease, and to continue our ongoing commitment to improve the lives of people facing serious chronic diseases," said Michelle Skinner, PharmD, Vice President, Medical Affairs at Novo Nordisk. "This new SELECT analysis represents another step forward, exploring how semaglutide 2.4 mg impacted repeat hospitalizations and prolonged hospital stays, which are two pressing issues in terms of healthcare cost and quality."

About the SELECT trial

SELECT (Semaglutide Effects on Cardiovascular Outcomes in People with Overweight or Obesity) was a multicenter, randomized, double-blind, placebo-controlled, event-driven superiority trial designed to evaluate the efficacy of semaglutide 2.4 mg versus placebo as an adjunct to cardiovascular standard of care for reducing the risk of major adverse cardiovascular events in people with established CVD with overweight or obesity with no prior history of diabetes.4

The trial, initiated in 2018, enrolled 17,604 adults and was conducted in 41 countries at more than 800 investigator sites.4

About Novo Nordisk

Novo Nordisk is a leading global healthcare company that's been making innovative medicines to help people with diabetes lead longer, healthier lives for more than 100 years. This heritage has given us experience and capabilities that also enable us to drive change to help people defeat other serious chronic diseases such as obesity, rare blood, and endocrine disorders. We remain steadfast in our conviction that the formula for lasting success is to stay focused, think long-term, and do business in a financially, socially, and environmentally responsible way. With U.S. headquarters in New Jersey and commercial, production, and research facilities in seven states plus Washington DC, Novo Nordisk employs approximately 8,000 people throughout the country. For more information, visit novonordisk-us.com, Facebook, Instagram, X, LinkedIn, and YouTube.

References:

Nicholls SJ, Ryan D, Deanfield J, et al. Semaglutide Reduces Hospital Admissions in Patients with Obesity or Overweight and Established CVD. Presented at ObesityWeek® 2024, Nov 3, 2024.

World Health Organization. Cardiovascular diseases (cvds). Accessed October 31, 2024. (cvds).

Powell-Wiley TM, Poirier P, Burke LE, et al. Obesity and cardiovascular disease: a scientific statement from the American Heart Association. Circulation. 2021;143(21):e984-e1010. doi:10.1161/CIR.0000000000000973.

Lincoff MA, Brown-Frandson K, Colhoun HM, et al. Semaglutide and cardiovascular outcomes in obesity without diabetes. N Engl J Med. 2023;389:2221-2232.

Reiter-Brennan C, Dzaye O, Davis D, et al. Comprehensive care models for cardiometabolic disease. Curr Cardiol Rep. 2021;23(3):22. Published 2021 Feb 24. doi:10.1007/s11886-021-01450-1.

The GBD 2015 Obesity Collaborators. Health effects of overweight and obesity in 195 countries over 25 years. N Engl J Med. 2017;377(1):13-27. doi:10.1056/nejmoa1614362.

Wegovy® (semaglutide) injection 2.4 mg Prescribing Information. Plainsboro, NJ: Novo Nordisk Inc.

© 2024 Novo Nordisk All rights reserved. US24SEMO01281 November 2024

SOURCE NOVO NORDISK INC.

WANT YOUR COMPANY'S NEWS FEATURED ON PRNEWSWIRE.COM?

440k+

Newsrooms &

Influencers

9k+

Digital Media

Outlets

270k+

Journalists

Opted In

GET STARTED

Clinical ResultPhase 3AHA

100 Deals associated with Thammasat University Hospital

Login to view more data

100 Translational Medicine associated with Thammasat University Hospital

Login to view more data

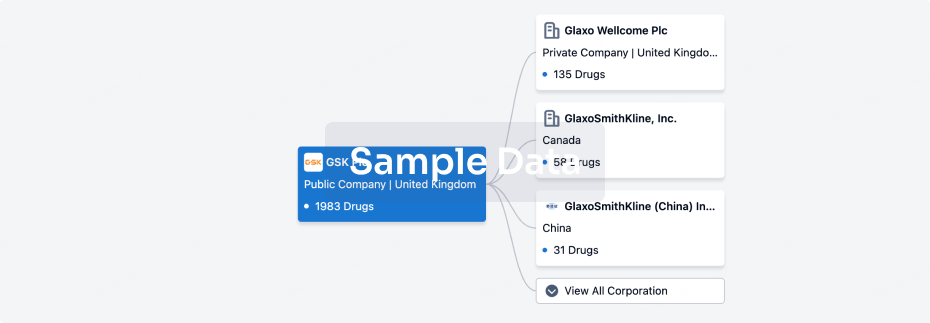

Corporation Tree

Boost your research with our corporation tree data.

login

or

Pipeline

Pipeline Snapshot as of 07 Jan 2026

No data posted

Login to keep update

Deal

Boost your decision using our deal data.

login

or

Translational Medicine

Boost your research with our translational medicine data.

login

or

Profit

Explore the financial positions of over 360K organizations with Synapse.

login

or

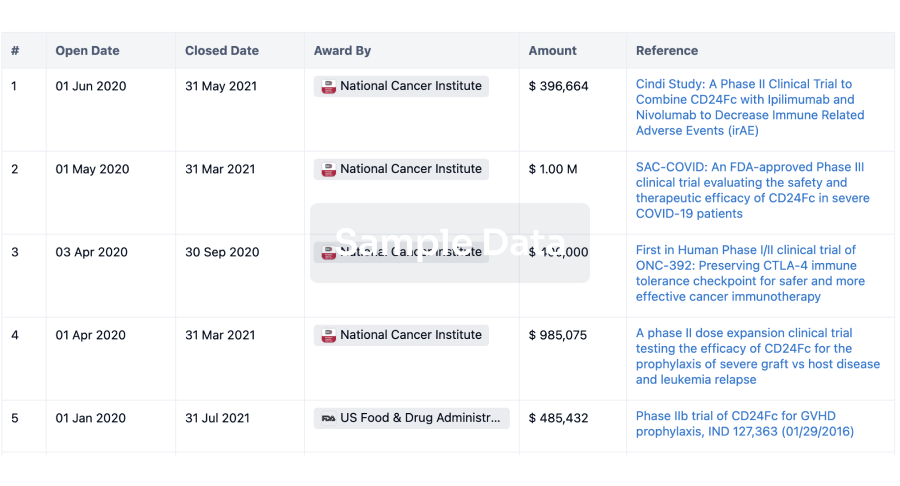

Grant & Funding(NIH)

Access more than 2 million grant and funding information to elevate your research journey.

login

or

Investment

Gain insights on the latest company investments from start-ups to established corporations.

login

or

Financing

Unearth financing trends to validate and advance investment opportunities.

login

or

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.

Bio

Bio Sequences Search & Analysis

Sign up for free

Chemical

Chemical Structures Search & Analysis

Sign up for free