Request Demo

Last update 09 Sep 2025

Georgetown University in Qatar

Last update 09 Sep 2025

Overview

Tags

Nervous System Diseases

Neoplasms

Small molecule drug

Diagnostic radiopharmaceuticals

Disease domain score

A glimpse into the focused therapeutic areas

Technology Platform

Most used technologies in drug development

Targets

Most frequently developed targets

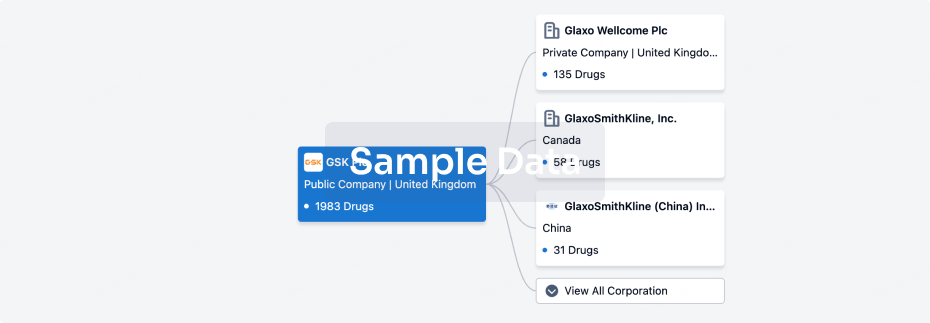

Corporation Tree

Boost your research with our corporation tree data.

login

or

Pipeline

Pipeline Snapshot as of 27 Jan 2026

The statistics for drugs in the Pipeline is the current organization and its subsidiaries are counted as organizations,Early Phase 1 is incorporated into Phase 1, Phase 1/2 is incorporated into phase 2, and phase 2/3 is incorporated into phase 3

Preclinical

1

1

Phase 1

Other

3

Login to view more data

Current Projects

Login to view more data

Deal

Boost your decision using our deal data.

login

or

Translational Medicine

Boost your research with our translational medicine data.

login

or

Profit

Explore the financial positions of over 360K organizations with Synapse.

login

or

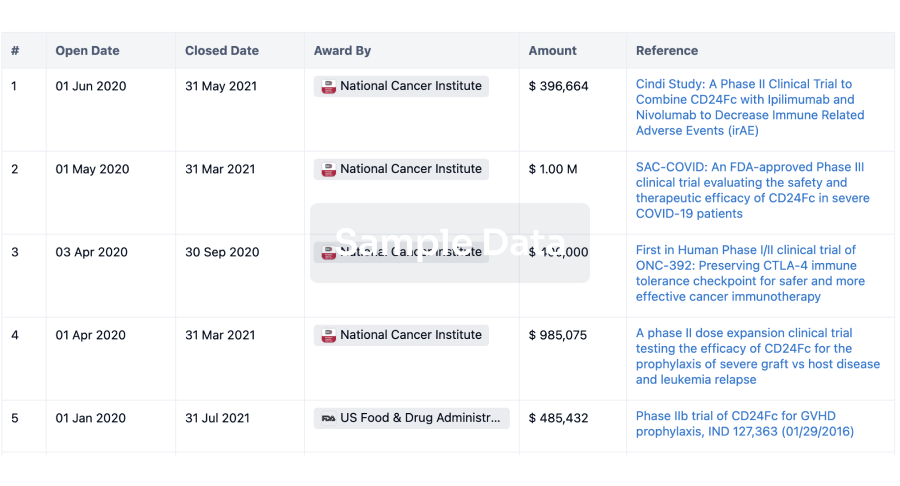

Grant & Funding(NIH)

Access more than 2 million grant and funding information to elevate your research journey.

login

or

Investment

Gain insights on the latest company investments from start-ups to established corporations.

login

or

Financing

Unearth financing trends to validate and advance investment opportunities.

login

or

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.

Bio

Bio Sequences Search & Analysis

Sign up for free

Chemical

Chemical Structures Search & Analysis

Sign up for free