Request Demo

Last update 30 Oct 2025

Gilead Sciences, Inc.

Last update 30 Oct 2025

Overview

Tags

Neoplasms

Respiratory Diseases

Immune System Diseases

Small molecule drug

Antibody drug conjugate (ADC)

Monoclonal antibody

Disease domain score

A glimpse into the focused therapeutic areas

No Data

Technology Platform

Most used technologies in drug development

No Data

Targets

Most frequently developed targets

No Data

| Top 5 Drug Type | Count |

|---|---|

| Small molecule drug | 101 |

| Monoclonal antibody | 13 |

| Autologous CAR-T | 10 |

| Chemical drugs | 7 |

| Unknown | 4 |

Related

170

Drugs associated with Gilead Sciences, Inc.Target |

Mechanism PPARδ agonists |

Active Org. |

Originator Org. |

Active Indication |

Inactive Indication |

Drug Highest PhaseApproved |

First Approval Ctry. / Loc. United States |

First Approval Date14 Aug 2024 |

Target |

Mechanism HIV-1 capsid inhibitors |

Active Org. |

Originator Org. |

Active Indication |

Inactive Indication- |

Drug Highest PhaseApproved |

First Approval Ctry. / Loc. European Union [+3] |

First Approval Date17 Aug 2022 |

Target |

Mechanism PD-1 inhibitors |

Active Org. |

Originator Org. |

Active Indication |

Inactive Indication |

Drug Highest PhaseApproved |

First Approval Ctry. / Loc. China |

First Approval Date25 Aug 2021 |

1,379

Clinical Trials associated with Gilead Sciences, Inc.NCT07178730

NeoAdjuvant Dynamic Marker - Adjusted Personalized Therapy Comparing Sacituzumab Govitecan+Pembrolizumab vs. SoC Chemotherapy in Clinical Stage II-III, Triple-negative Early Breast Cancer

TNBC is a heterogeneous disease with distinct pathological, genetic, and clinical features among subtypes. Treatment results for high-risk primary TNBC remain poor compared to other breast cancer subtypes. Preoperative chemotherapy is the standard of care for patients with stage II or III primary TNBC. Multiple lines of clinical evidence demonstrate that TNBC patients who achieve a pCR to NACT, (ypT0/is ypN0), have an excellent long-term prognosis. A meta-analysis of individual patient data confirmed a strong association of pCR after NACT with improved long-term event-free survival (EFS, hazard ratio [HR] 0.24) and overall survival (OS, HR 0.16) benefit. Taxane- and anthracycline-based neoadjuvant regimens generally result in pCR rates between 25-50% [REFs], whereas the addition of platinum increases pCR rates to approximately 50-55%.

The KEYNOTE-522 trial has demonstrated that the addition of the immune-checkpoint inhibitor PEM to anthracycline- (AC), taxane- and platinum-based NACT resulted in a significant increase in pCR rates to nearly 65%, associated with a significant reduction of recurrences (EFS, HR 0.65 at 5 years) and improvement of OS (HR 0.66). Based on these results, the KEYNOTE-522 regimen has been approved by the FDA and EMA and has become the standard of care for patients with stage II or III TNBC.

Despite this significant progress, two major questions remain unresolved which will be investigated in the ADAPT-TN-IV trial:

1. Do all patients require the full 6 months of NACT as per KEYNOTE-522 or is there a subgroup of patients who are sufficiently treated with 12 weeks of NACT plus PEM?

2. Can incorporation of ADCs into the KEYNOTE-522 regimen improve response and outcomes in patients without an optimal early response? The outcome of patients with residual disease after 24 weeks of NACT and PEM remains suboptimal and there is an urgent need for more effective strategies. ADCs such as SG have demonstrated superior efficacy compared to standard chemotherapy in metastatic TNBC, resulting in substantially higher response rates and improved progression-free (PFS) and OS. Combination studies of ADCs and immunotherapy in metastatic TNBC have demonstrated significant activity, suggesting possible synergistic activity It is therefore a logical next step to investigate, whether the incorporation of SG in the NACT regimen can improve pCR rates and EFS results in patients who have residual clinical disease after 12 weeks of NACT with CARBO/PAC + PEM.

The KEYNOTE-522 trial has demonstrated that the addition of the immune-checkpoint inhibitor PEM to anthracycline- (AC), taxane- and platinum-based NACT resulted in a significant increase in pCR rates to nearly 65%, associated with a significant reduction of recurrences (EFS, HR 0.65 at 5 years) and improvement of OS (HR 0.66). Based on these results, the KEYNOTE-522 regimen has been approved by the FDA and EMA and has become the standard of care for patients with stage II or III TNBC.

Despite this significant progress, two major questions remain unresolved which will be investigated in the ADAPT-TN-IV trial:

1. Do all patients require the full 6 months of NACT as per KEYNOTE-522 or is there a subgroup of patients who are sufficiently treated with 12 weeks of NACT plus PEM?

2. Can incorporation of ADCs into the KEYNOTE-522 regimen improve response and outcomes in patients without an optimal early response? The outcome of patients with residual disease after 24 weeks of NACT and PEM remains suboptimal and there is an urgent need for more effective strategies. ADCs such as SG have demonstrated superior efficacy compared to standard chemotherapy in metastatic TNBC, resulting in substantially higher response rates and improved progression-free (PFS) and OS. Combination studies of ADCs and immunotherapy in metastatic TNBC have demonstrated significant activity, suggesting possible synergistic activity It is therefore a logical next step to investigate, whether the incorporation of SG in the NACT regimen can improve pCR rates and EFS results in patients who have residual clinical disease after 12 weeks of NACT with CARBO/PAC + PEM.

Start Date31 Jan 2026 |

Sponsor / Collaborator |

NCT07218211

INCLUSION - Enhancing PrEP Uptake and Retention Among Latine Transgender Women and Gay, Bisexual, and Other Men Who Have Sex With Men in the South Using Long-Acting Injectable PrEP

The purpose of this project is to test a culturally-tailored, community-delivered long-acting injectable PrEP (lenacapavir) program for Latine gay and bisexual men (GBM) and transgender women (TGW). The objective is to evaluate whether this intervention demonstrates greater persistence on lenacapavir for Latine GBM and TGW compared with what has been observed historically at the Duke PrEP Clinic.

Start Date05 Jan 2026 |

Sponsor / Collaborator  Duke University Duke University [+1] |

NCT07031219

Syndemic Triple HIV/HBV/HCV Virus Screening Via EMR Automation at Primary Care Clinics

Design:

This will be a randomized controlled cross-over trial, testing different order panels for screening blood tests for human immunodeficiency virus (HIV), hepatitis B virus (HBV), and hepatitis C virus (HCV). Study participants will be primary care providers. Participating providers will be randomized to either a control arm (no changes to currently available orders) or a triple-testing order panel intervention arm (order panel with screening tests for all three bloodborne viruses (BBVs) selected by default when a provider attempts to order a screening test for at least one BBV through the study order panels). These order panels will only be triggered if the provider orders a virus BBV screening test based on their normal practice and standard of care for their patient. Providers will see which orders are selected prior to signing (finalizing) them; therefore, this study will be unblinded. To mitigate the effect of unblinding, providers will switch arms halfway through the study, defined as 6 months or the point at which a total of 1000 patients have BBV test orders, whichever comes first.

Outcomes/endpoints:

The investigators will compare incidences of HIV, HBV, and HCV diagnoses between the two arms, estimate number of cases missed by not triple-testing, estimate laboratory costs per arm, and measure patient encounters per arm.

This will be a randomized controlled cross-over trial, testing different order panels for screening blood tests for human immunodeficiency virus (HIV), hepatitis B virus (HBV), and hepatitis C virus (HCV). Study participants will be primary care providers. Participating providers will be randomized to either a control arm (no changes to currently available orders) or a triple-testing order panel intervention arm (order panel with screening tests for all three bloodborne viruses (BBVs) selected by default when a provider attempts to order a screening test for at least one BBV through the study order panels). These order panels will only be triggered if the provider orders a virus BBV screening test based on their normal practice and standard of care for their patient. Providers will see which orders are selected prior to signing (finalizing) them; therefore, this study will be unblinded. To mitigate the effect of unblinding, providers will switch arms halfway through the study, defined as 6 months or the point at which a total of 1000 patients have BBV test orders, whichever comes first.

Outcomes/endpoints:

The investigators will compare incidences of HIV, HBV, and HCV diagnoses between the two arms, estimate number of cases missed by not triple-testing, estimate laboratory costs per arm, and measure patient encounters per arm.

Start Date01 Jan 2026 |

Sponsor / Collaborator |

100 Clinical Results associated with Gilead Sciences, Inc.

Login to view more data

0 Patents (Medical) associated with Gilead Sciences, Inc.

Login to view more data

2,048

Literatures (Medical) associated with Gilead Sciences, Inc.01 Jan 2026·JOURNAL OF PHARMACEUTICAL AND BIOMEDICAL ANALYSIS

Unraveling the bridging region of Lenacapavir atropisomers by two-dimensional liquid chromatography

Article

Author: Carr, Gavin ; Pereira, Alban R ; Adhikari, Sarju ; Foti, Chris ; Shen, Yi

Atropisomers are stereoisomers that arise from restricted bond rotation and undergo dynamic conformational change depending on time and temperature. The interconversion of atropisomers could result in a non-baseline "plateau" or "bridging area" between the isomer peaks in liquid chromatography (LC), which diminishes the chromatographic resolution and reduces the assurance of the analyte's purity. Conventional one-dimensional LC (1D-LC) separation relies on one or two major interactions among the analyte, mobile and stationary phase in an analytical column, which may not be sufficient for deciphering the bridging area. In contrast, two-dimensional LC (2D-LC) leverages orthogonal separation mechanisms in two dimensions, significantly enhancing peak capacity to resolve co-eluting impurities and ensure peak purity. Herein, we present a comprehensive 2D-LC study to investigate the on-column interconversion of Lenacapavir (LEN), which exists as a mixture of two atropisomers. Firstly, different columns, temperature, mobile phase pH, and cation additives were investigated using 1D-LC to assess their potential effects toward on-column interconversion of LEN atropisomers. The bridging area of LEN atropisomers was then unraveled using the heart-cutting mode in 2D-LC, presenting varying ratios for the two atropisomers across the retention time window of LEN. Furthermore, orthogonal conditions for the second dimension were developed to disentangle possible impurities in the bridging area using a regioisomer as surrogate. Lastly, and most importantly, by implementing the developed second-dimension methods, a co-eluting regioisomer of LEN can be separated using 2D-LC. With the aid of high-resolution mass spectrometry (HRMS), we were able to distinguish the co-eluting regioisomer down to 0.05 % (w/w).

31 Dec 2025·HIV Research & Clinical Practice

Effects of antiretroviral resistance on outcomes and health care resource utilisation among people with HIV in the United States and Europe: a real-world survey

Article

Author: Chen, Megan ; Ambler, Will ; Park, Seojin ; Trom, Cassidy ; Jones, Hannah ; Holbrook, Tim ; Zachry, Woodie ; Christoph, Mary J. ; Hennessy, Fritha

Background: Despite advances in antiretroviral therapy (ART), resistance remains a barrier to effective HIV treatment. Objective: This study evaluated associations between ART drug resistance and treatment adherence, health care resource utilisation (HCRU), and quality of life (QoL) among people with HIV. Methods: A retrospective, observational study was conducted using the Adelphi HIV Disease Specific Programme™ (DSP) between 2021 and 2023 across the United States and Europe. Data were collected via physician surveys, patient record forms, and patient self-completion forms. Results: Data for 2006 people with HIV and resistance testing were contributed by 290 physicians, and 586 people with HIV provided patient data. Overall, 286 people with HIV (14%) had documented resistance. People with HIV with resistance had received more ART regimens than those without resistance (p < 0.0001) and had lower viral suppression rates (p = 0.004) and lower CD4 counts (p = 0.032). People with HIV with resistance reported lower treatment adherence (p = 0.017) but similar QoL compared to those without resistance. People with HIV with resistance also had significantly more HIV-related hospitalisations than those without resistance (p = 0.022). Conclusions: ART resistance was associated with higher HCRU and poorer health outcomes in people with HIV, underscoring the need for continued focus on adherence and resistance management.

31 Dec 2025·JOURNAL OF MEDICAL ECONOMICS

Treatment persistence among treatment-experienced people with HIV switching to integrase strand transfer inhibitor–based antiretroviral regimens

Article

Author: Chen, Megan ; de Boer, Melanie ; Trom, Cassidy ; Chuo, Ching-Yi ; Christoph, Mary J. ; Zachry, Woodie

AIMS:

Low rates of persistence are associated with poor outcomes in people with HIV (PWH). This retrospective cohort study assessed treatment persistence as measured by time to treatment switch in treatment-experienced (TE) PWH initiating integrase strand transfer inhibitor (INSTI)-based regimens.

METHODS:

United States prescription claims and medical history data from the IQVIA Longitudinal Access and Adjudication Dataset between January 1, 2018, and August 31, 2023, were analyzed. TE PWH with ≥1 prescription claim for initiation of an INSTI-based antiretroviral regimen during the index period (January 1, 2020, to December 31, 2022) were included. Descriptive analyses of demographic and comorbidity variables were performed, stratified by regimen. Kaplan-Meier analysis was used to evaluate time to subsequent treatment switch in the overall population and among PWH aged ≥50 years, those receiving Medicare, and those with mental health conditions or substance use disorders.

RESULTS:

Overall, 29,348 TE PWH were included. The majority of INSTI-based regimen initiations were for bictegravir/emtricitabine/tenofovir alafenamide (B/F/TAF) (61%; n = 17,917), followed by dolutegravir/lamivudine (27.4%; n = 8034) and cabotegravir + rilpivirine (7.4%; n = 2186). A subsequent switch occurred in 3341 (11.4%) PWH. Risk factors for switch included female sex, Medicare/Medicaid coverage, and higher Charlson Comorbidity Index scores. B/F/TAF was associated with the fewest subsequent switches (9.2%; n = 1656) and was significantly less likely than any other regimen to lead to a subsequent switch, either in the overall population or in the three at-risk subgroups.

LIMITATIONS:

No data are available to determine the underlying reasons for initial treatment choice or subsequent treatment switch.

CONCLUSIONS:

This study provides evidence for greater treatment persistence with B/F/TAF versus other INSTI-based regimens. Unlike prior studies that focused on treatment-naïve individuals, this analysis uniquely evaluates persistence among treatment-experienced PWH initiating newer INSTI-based regimens.

4,834

News (Medical) associated with Gilead Sciences, Inc.29 Oct 2025

RIYADH, SAUDI ARABIA, October 29, 2025 /

EINPresswire.com

/ -- On day three of the Global Health Exhibition 2025, King Faisal Specialist Hospital and Research Centre (KFSHRC) signed a series of agreements at its pavilion, underscoring its commitment to high-impact partnerships that drive innovation, medical education, and biotherapeutics, aligned with its vision to be the provider of choice in specialized care.

To strengthen its role as a national reference center for biotherapies, KFSHRC signed an agreement with Roche to improve spending efficiency and stabilize supply chains for high-value biologic medicines, giving patients local access to advanced treatments that meet the highest quality standards. A framework agreement with Kite will expand the availability of advanced immunotherapies within KFSHRC facilities, reducing referrals abroad and improving cost efficiency.

KFSHRC also signed an MoU with US-based CellCo to accelerate AI- and bioengineering-enabled therapies, including a clinical study involving heparin, the first biologic anticoagulant, alongside collaboration on novel treatments for cancer, immune conditions, and chronic neurological disorders. An MoU with US-based Kopra Bio aims to speed the development and localization of manufacturing for innovative therapies and highlights the intent to explore a value-based partnership model that includes in-kind contributions from KFSHRC in exchange for equity participation at Kopra Bio.

In genomics, KFSHRC entered a framework agreement with Al-Jeel Medical to deploy advanced gene-sequencing in diagnostics and research. The work includes specialized panels in oncology, rare diseases, and pharmacogenomics to pinpoint disease-causing genetic factors and support personalized treatment plans that improve outcomes and quality of life.

KFSHRC also signed an MoU with Novartis covering clinical studies, patient support programs, continuing medical education, and innovative therapies. The agreement includes establishing a Center of Excellence for radioligand therapy and advanced nuclear medicine to transfer know-how and localize cutting-edge technologies for difficult-to-treat cancers, expanding access to the latest global treatments within the Kingdom.

To build local talent, an MoU with LEORON will deliver innovative training and professional development programs that embed global best practices and strengthen the competitiveness of Saudi healthcare professionals.

KFSHRC has been ranked first in the Middle East and North Africa and fifteenth globally among the world’s top 250 academic medical centers for 2025 and recognized by Brand Finance as the region’s most valuable healthcare brand. It is also listed among Newsweek’s World’s Best Hospitals 2025, Best Smart Hospitals 2026, and Best Specialized Hospitals 2026, reaffirming its leadership in innovation-driven care.

Riyadh

King Faisal Specialist Hospital and Research Centre

email us here

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability

for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this

article. If you have any complaints or copyright issues related to this article, kindly contact the author above.

Immunotherapy

28 Oct 2025

The AI-focused startup Genesis Therapeutics has a new name — and a new AI model it believes has best-in-class performance.

In an interview with

Endpoints News

, CEO Evan Feinberg said the new model, called Pearl (short for Placing Every Atom in the Right Location), outperformed Isomorphic Labs’ AlphaFold 3, Chai Discovery’s Chai-1, and other top AI models across several protein-ligand benchmarks.

Feinberg called predicting these structures “one of the most notoriously challenging problems to solve.” AlphaFold 3 has been seen as a leader in this space

since its May 2024 debut

.

“For the problem of modeling protein-ligand interactions, we’re confident that Pearl is the best model that exists today,” Feinberg said. (To be sure, the AlphaFold 3 release is now over a year old. Isomorphic is a “significant generation or two ahead internally in terms of what our models can do, so think like 4, 4.5, 5,” Isomorphic’s chief AI officer Max Jaderberg

told Endpoints earlier this month

.)

The Bay Area biotech has also changed its name to Genesis Molecular AI. Founded in 2019, Genesis has raised over $300 million,

including a $200 million Series B in 2023

. Feinberg was formerly a graduate student in Vijay Pande’s lab at Stanford, where he developed physics-based machine learning models that turned into Genesis. The biotech now has roughly 130 employees and is advancing its own preclinical pipeline with no disclosed timeline to entering the clinic, as well as partnerships with

Gilead

and Incyte.

Genesis detailed the claims about its model in a preprint posted Tuesday from more than 30 authors, including some Nvidia engineers. The paper used three metrics, including “Runs N’ Poses,” an academic-created test which

found in February

that models like AlphaFold 3 appear to largely memorize their training data. That raised the question of whether these models have seen enough data to actually learn and generalize.

Genesis’ Pearl achieved an 85% success rate on the Runs N’ Poses test, compared to 74% for AlphaFold 3, 74% for Boltz-1x, and 70% for Chai-1. The team also tested how these models fared against a higher bar of accuracy, defining success as predictions less than an angstrom away from the actual structure. (The standard test, on which Pearl scored 85%, looks for predictions within two angstroms.) Against that tougher metric, Pearl had a 70% success rate, compared to 62% with AlphaFold 3, 57% with Boltz-1x, and 56% with Chai-1.

The release of the preprint and model coincides with Nvidia’s AI conference in Washington, DC.

Feinberg credited Pearl’s outperformance to Genesis’ long-running focus on integrating more physics knowledge into its models.

That included taking a page from how Waymo developed its autonomous cars using synthetic data. Synthetic data are derived from experiments using physics and allowed Genesis’ models to see more examples, particularly in data-scarce areas, like small molecules in the Protein Data Bank.

“We are the first group to show any evidence of scaling law phenomena here, where we can generate more synthetic data with physics, pretrain the model with this data, get better performance and repeat,” Feinberg said, describing this idea as still being in the “early innings.”

The MIT research team behind Boltz has already extended its modeling to other protein design tasks, like

predicting binding affinity

and, most recently,

generating new protein binders

. Genesis’ preprint does not include Pearl’s performance in predicting binding affinity or potency, and Feinberg said that was beyond the scope of the paper. Having high-accuracy structures is a crucial first step to then tackling predictions like potency, Feinberg said.

“A lot of these co-folding models hallucinate, just like any other diffusion model or LLM,” Feinberg said. “They will make something that

prima facie

looks OK, but any expert looks at it, and is like, ‘That’s not quite right.’”

Editor’s note: A previous version of this story stated Pearl was compared to Chai-2. The story has been corrected to reflect Genesis compared its model to Chai-1.

Clinical Result

28 Oct 2025

Plus, news about Cybin, Incyte, Roche, Merck, Eisai, Neuphoria Therapeutics, Aldeyra Therapeutics and X4 Pharmaceuticals:

🏭 FDA issues CRL to Regeneron:

The drugmaker has officially

been handed

a complete response letter for its pre-filled syringe for Eylea HD. The company had warned in August that it

expected regulatory delays

after issues were found at a Catalent site in Bloomington, IN, that’s now owned by Novo Nordisk. The plant has since been issued an

“official action indicated”

classification. Another customer of the Indiana site, Scholar Rock, also

received

a CRL related to the plant.

— Anna Brown

🧪 AbbVie scraps asset licensed from Dragonfly Therapeutics:

The Chicago-area pharma giant

terminated

a Phase 1 test of the first asset that it

licensed

from

Dragonfly

. AbbVie started testing ABBV-303/DF4101 in 2024 in solid tumors. Dragonfly CEO Bill Haney told

Endpoints News

that the company’s other programs with AbbVie are ongoing. Dragonfly has multiple internal candidates in clinical testing as well as Phase 1 programs with Bristol Myers Squibb and Merck. It also has a preclinical pact with Gilead.

— Kyle LaHucik

💵 Cybin’s $175M direct offering:

The company

is selling

about 22.3 million shares at $6.51 apiece from several blue-chip investors, including Venrock Healthcare Capital Partners, OrbiMed and Point72. It’s developing a psychedelic called CYB003 as a treatment for major depressive disorder.

— Jaimy Lee

🛠️ Incyte halts work on two drugs:

Under new CEO Bill Meury, the drugmaker

has paused

development of its anti-CD122 candidate, called INCA034460, and its BET inhibitor, called INCB57643. The biotech also ended povorcitinib’s development in chronic spontaneous urticaria and hinted that more pipeline adjustments are in the works. “We are taking a deliberate approach to pipeline prioritization. We are actively reviewing our R&D efforts and focusing on high-value programs that are scientifically differentiated, address unmet medical needs, and have the potential to significantly drive Incyte’s next phase of growth,” Meury said in a release. Incyte also raised its revenue guidance for the year. It’s now in the range of $4.23 billion to $4.32 billion.

— Kyle LaHucik

🔬 Roche’s Gazyva succeeds in kidney disorder:

The drug

met

its primary endpoint in a Phase 3 study for idiopathic nephrotic syndrome, a rare autoimmune disease that affects the kidneys. Roche will head to the FDA to discuss next steps for the drug, but the timing is not yet clear. Gazyva was

approved

earlier this month for the treatment of active lupus nephritis.

— Max Gelman

💊 Merck, Eisai tout kidney cancer data:

The combination of Merck’s Welireg and Eisai’s Lenvima

achieved

a statistically significant improvement in progression-free survival over Exelixis’ Cabometyx in advanced renal cell carcinoma. Overall survival did not reach statistical significance, though Merck and Eisai noted this was an interim analysis. There was also a “trend toward improvement” on OS.

— Max Gelman

📈 Neuphoria Therapeutics adopts poison pill after Phase 3 fail:

The biotech’s board

selected

the shareholder rights plan to “protect the interest of the company and its stockholders.” The move lowers the chances that a person or group will accumulate enough shares to control Neuphoria. The biotech’s social anxiety drug

failed a Phase 3 trial

last week, and the company decided to drop the asset, named BNC210. The biotech’s stock price

$NEUP

was up almost 6% in premarket trading on Tuesday.

— Kyle LaHucik

💼 Aldeyra Therapeutics updates its pipeline:

The biotech

discontinued

work on ADX-629 in patients with Sjögren-Larsson Syndrome. It also said it expects to file INDs for a pair of RASP modulators sometime next year.

— Jaimy Lee

💰 X4 Pharmaceuticals

ended up raising

$155.3 million

in its public offering, up from the

$135 million

it set out to raise.

— Jaimy Lee

Phase 3Clinical ResultPhase 1Drug Approval

100 Deals associated with Gilead Sciences, Inc.

Login to view more data

100 Translational Medicine associated with Gilead Sciences, Inc.

Login to view more data

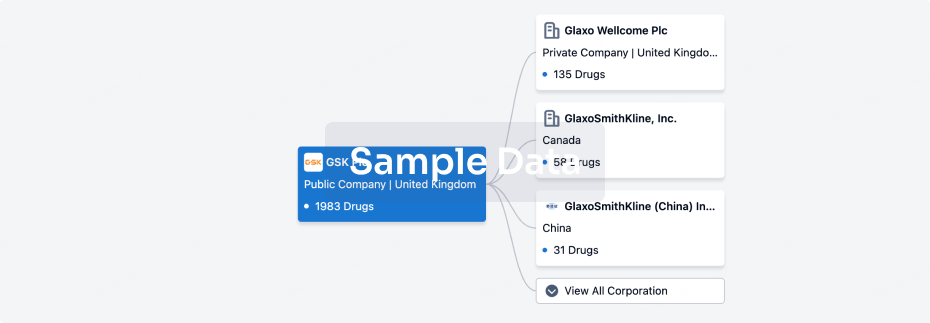

Corporation Tree

Boost your research with our corporation tree data.

login

or

Pipeline

Pipeline Snapshot as of 23 Feb 2026

The statistics for drugs in the Pipeline is the current organization and its subsidiaries are counted as organizations,Early Phase 1 is incorporated into Phase 1, Phase 1/2 is incorporated into phase 2, and phase 2/3 is incorporated into phase 3

Discovery

13

66

Preclinical

Phase 1

32

16

Phase 2

Phase 3

12

31

Approved

Other

208

Login to view more data

Current Projects

| Drug(Targets) | Indications | Global Highest Phase |

|---|---|---|

Sacituzumab govitecan-hziy ( Top I x Trop-2 ) | Triple Negative Breast Cancer More | Approved |

Sofosbuvir ( NS5B polymerase ) | Hepatitis C, Chronic More | Approved |

Sofosbuvir/Velpatasvir ( NS5A x NS5B polymerase ) | Hepatitis C, Chronic More | Approved |

Ledipasvir/Sofosbuvir ( NS5A x NS5B polymerase ) | Chronic hepatitis C genotype 1 More | Approved |

Seladelpar ( PPARδ ) | Primary Biliary Cholangitis More | Approved |

Login to view more data

Deal

Boost your decision using our deal data.

login

or

Translational Medicine

Boost your research with our translational medicine data.

login

or

Profit

Explore the financial positions of over 360K organizations with Synapse.

login

or

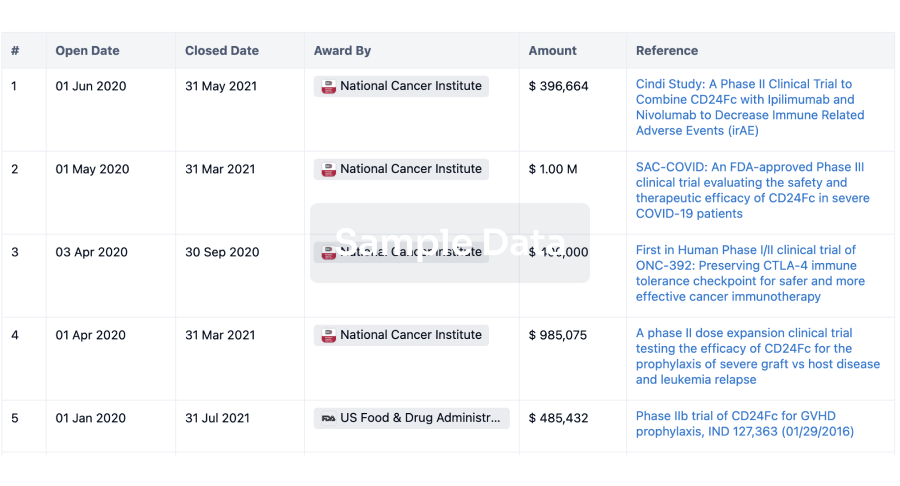

Grant & Funding(NIH)

Access more than 2 million grant and funding information to elevate your research journey.

login

or

Investment

Gain insights on the latest company investments from start-ups to established corporations.

login

or

Financing

Unearth financing trends to validate and advance investment opportunities.

login

or

AI Agents Built for Biopharma Breakthroughs

Accelerate discovery. Empower decisions. Transform outcomes.

Get started for free today!

Accelerate Strategic R&D decision making with Synapse, PatSnap’s AI-powered Connected Innovation Intelligence Platform Built for Life Sciences Professionals.

Start your data trial now!

Synapse data is also accessible to external entities via APIs or data packages. Empower better decisions with the latest in pharmaceutical intelligence.

Bio

Bio Sequences Search & Analysis

Sign up for free

Chemical

Chemical Structures Search & Analysis

Sign up for free